A Practical Guide for Designing, Developing, and Deploying Production-Grade Agentic AI Workflows

Authors: Eranga Bandara, Ross Gore, Peter Foytik, Sachin Shetty, Ravi Mukkamala, Abdul Rahman, Xueping Liang, Safdar H. Bouka, Amin Hass, Sachini Rajapakse, Ng Wee Keong, Kasun De Zoysae, Aruna Withanage, Nilaan Loganathan

Paper: https://arxiv.org/abs/2512.08769

Code: https://gitlab.com/rahasak-labs/podcast-workflow

TL;DR

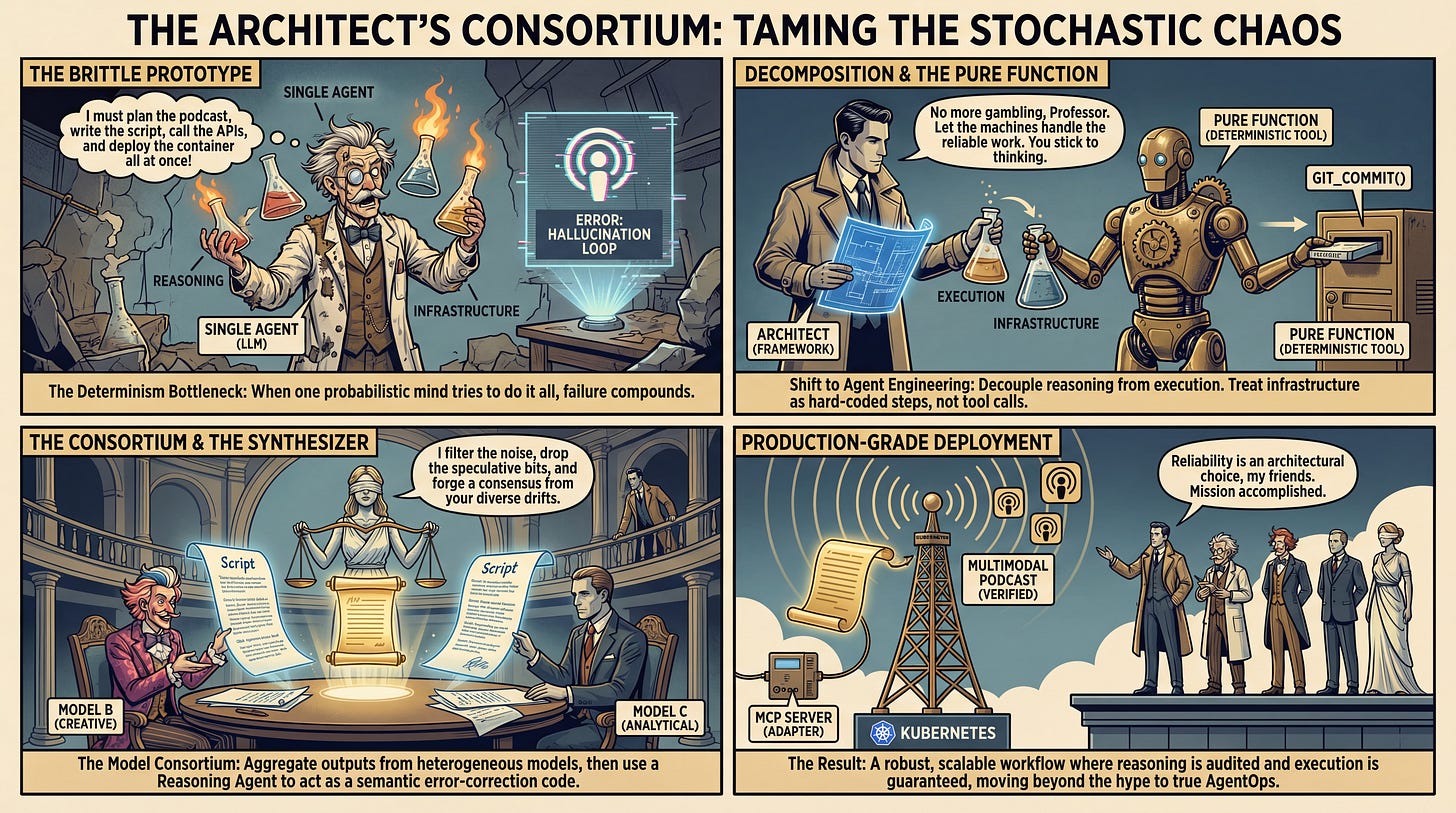

WHAT was done? The authors present a comprehensive engineering framework for transitioning agentic AI from experimental notebooks to production-grade Kubernetes environments. Using a “News-to-Podcast” generation workflow as a case study, they propose nine specific design patterns—such as “Pure Functions over Tool Calls” and “Consortium-based Reasoning”—to mitigate the non-determinism inherent in Large Language Models (LLMs).

WHY it matters? As organizations move beyond single-prompt completion to multi-step agentic workflows, reliability becomes the primary bottleneck. This paper provides a necessary blueprint for AgentOps, demonstrating how to decouple reasoning from execution and establishing that strict software engineering principles (like the Single Responsibility Principle) are even more critical when the compute engine is probabilistic.

Details

The Determinism Bottleneck

The current state of agentic AI is characterized by a stark dichotomy: prototypes are trivial to build, but reliable systems are excruciatingly difficult to scale. A simple script using LangChain or the OpenAI SDK often fails when subjected to real-world variability—agents get stuck in loops, hallucinate tool parameters, or fail to adhere to output schemas. The core conflict lies in the stochastic nature of the LLM; when an agent is responsible for both deciding what to do and executing the infrastructure code, the probability of failure compounds with every step in the chain.

The authors of this paper address this “brittleness” by formalizing a shift from “Prompt Engineering” to “Agent Engineering.” They argue that the solution to LLM drift is not just better prompting, but a rigorous architectural decomposition that treats the LLM as a reasoning engine rather than a master controller of infrastructure.