A Survey of Context Engineering for Large Language Models

Authors: Lingrui Mei, Jiayu Yao, Yuyao Ge, Yiwei Wang, Baolong Bi, Yujun Cai, Jiazhi Liu, Mingyu Li, Zhong-Zhi Li, Duzhen Zhang, Chenlin Zhou, Jiayi Mao, Tianze Xia, Jiafeng Guo, Shenghua Liu

Paper: https://arxiv.org/abs/2507.13334

Code: https://github.com/Meirtz/Awesome-Context-Engineering

TL;DR

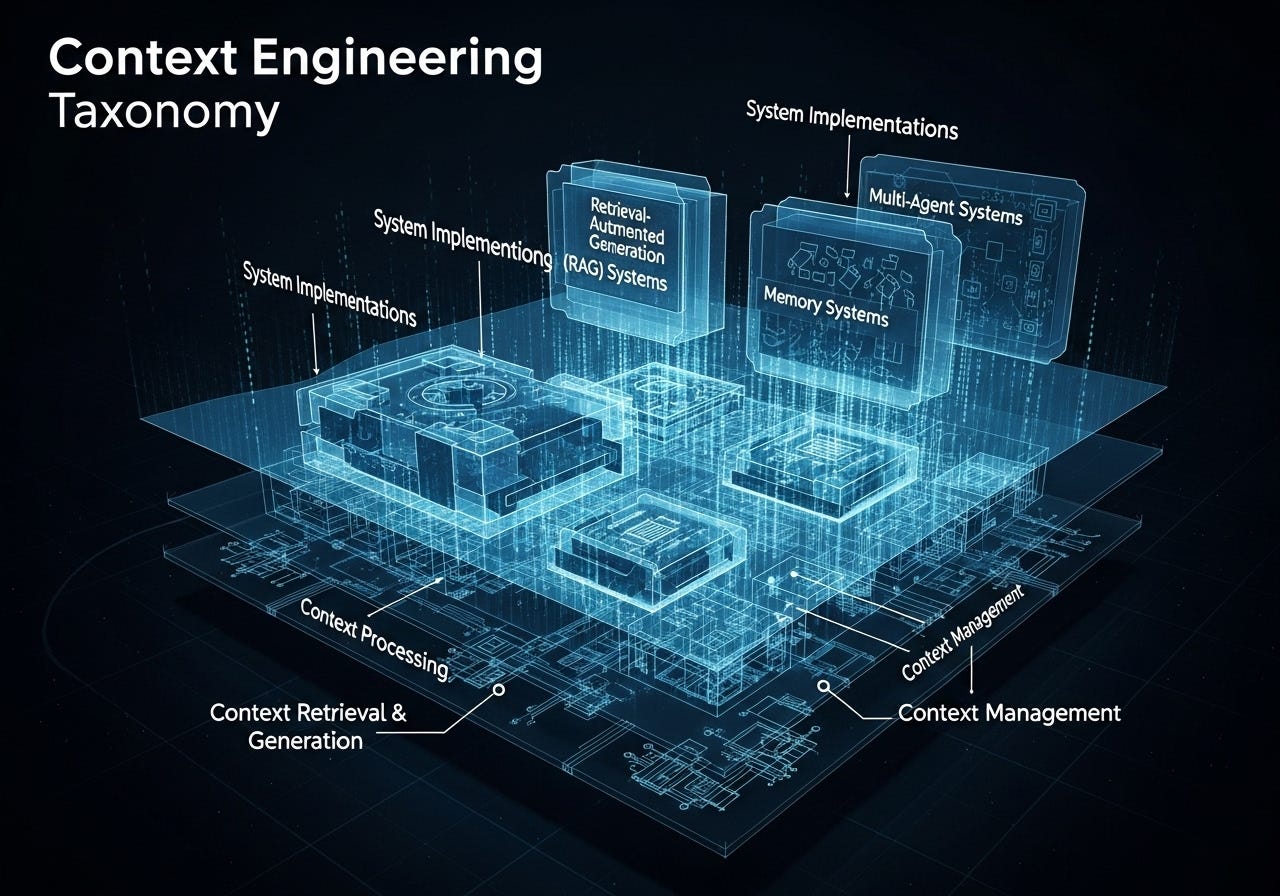

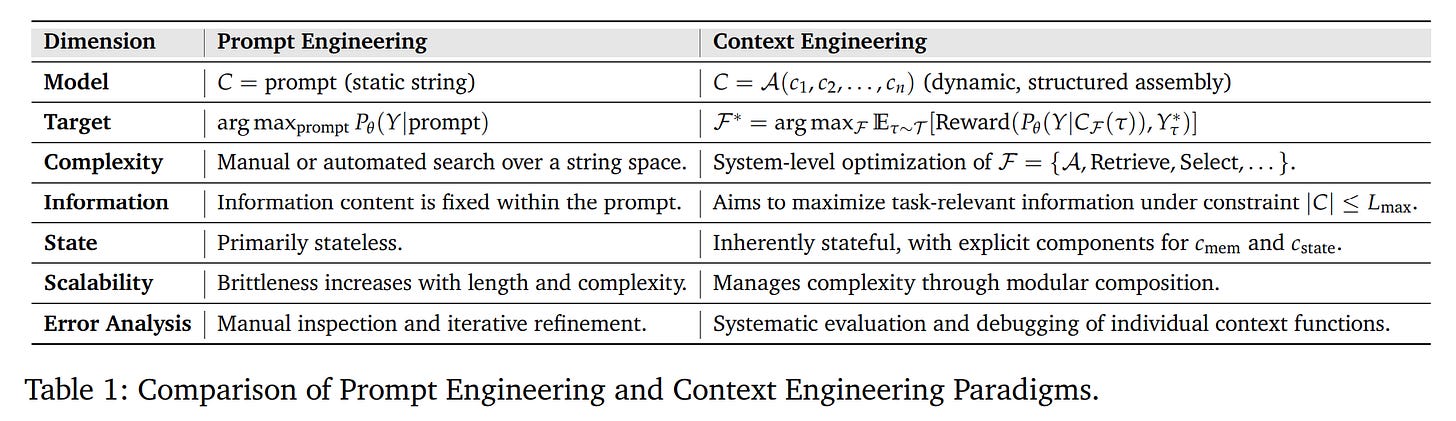

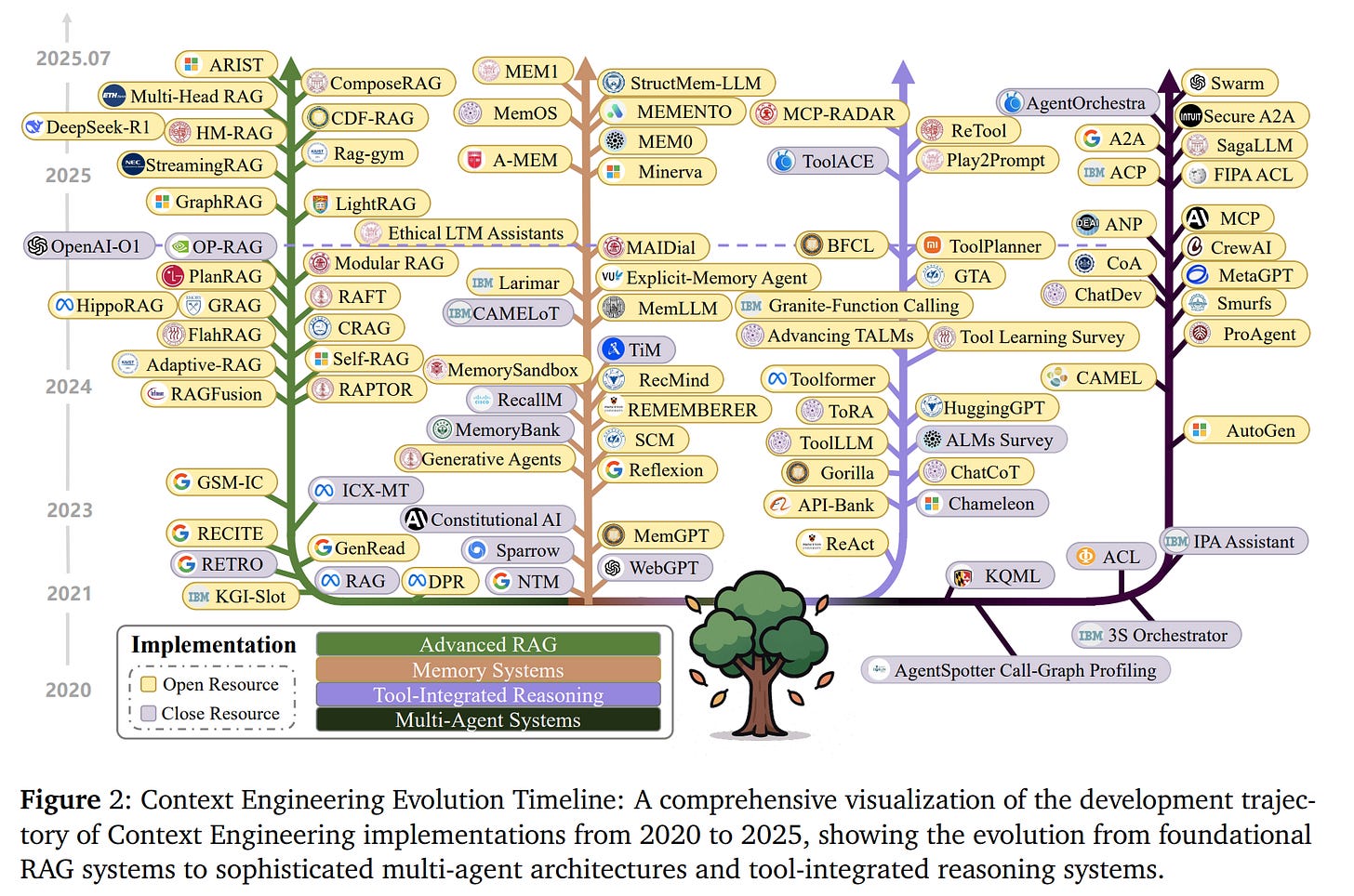

WHAT was done? This survey introduces and formalizes "Context Engineering," a discipline for the systematic optimization of information payloads for Large Language Models (LLMs). The authors present a novel, comprehensive taxonomy that deconstructs this field into two primary layers: foundational Components (Context Retrieval and Generation, Context Processing, Context Management) and sophisticated System Implementations (Retrieval-Augmented Generation, Memory Systems, Tool-Integrated Reasoning, Multi-Agent Systems). By analyzing over 1400 papers, the work formalizes context not as a static string but as a dynamic set of components, framing its assembly as an optimization problem grounded in information theory and Bayesian inference.

WHY it matters? This work provides a critical, unifying framework for a field that has been developing in fragmented silos, shifting the paradigm from the "art" of prompt design to the "science" of information logistics. It offers a clear roadmap for building more capable and reliable AI systems. Most significantly, the survey identifies a fundamental asymmetry in current models: despite remarkable proficiency in understanding complex contexts, LLMs show pronounced limitations in generating equally sophisticated, long-form outputs. This "comprehension-generation gap" pinpoints a major bottleneck in AI development and sets a defining priority for future research.

Details

From Prompt Design to a Formal Discipline

The rapid evolution of Large Language Models (LLMs) from simple instruction-followers to the reasoning engines of complex applications has necessitated a parallel evolution in how we interact with them. The initial "art" of prompt engineering, while foundational, is no longer sufficient to capture the full scope of designing, managing, and optimizing the information streams required by modern AI.

This comprehensive survey formalizes the next step in this evolution, introducing Context Engineering as a formal discipline for the systematic optimization of these information payloads.

Keep reading with a 7-day free trial

Subscribe to ArXivIQ to keep reading this post and get 7 days of free access to the full post archives.