AlphaEvolve

An Evolutionary Leap in Automated Scientific and Algorithmic Discovery

AlphaEvolve: A coding agent for scientific and algorithmic discovery

Authors: Alexander Novikov, Ngân Vũ, Marvin Eisenberger, Emilien Dupont, Po-Sen Huang, Adam Zsolt Wagner, Sergey Shirobokov, Borislav Kozlovskii, Francisco J. R. Ruiz, Abbas Mehrabian, M. Pawan Kumar, Abigail See, Swarat Chaudhuri, George Holland, Alex Davies, Sebastian Nowozin, Pushmeet Kohli and Matej Balog

Paper: link

Code: Discovered algorithms and mathematical results in a Colab notebook

TL;DR

WHAT was done? The researchers developed AlphaEvolve, an evolutionary coding agent. It synergizes state-of-the-art Large Language Models (LLMs) like Gemini with an evolutionary computation framework. AlphaEvolve autonomously generates and iteratively refines complex algorithms by making direct changes to entire codebases, guided by automated evaluation functions.

WHY it matters? AlphaEvolve represents a significant advancement in AI's capacity for autonomous discovery. It has achieved unprecedented breakthroughs, such as improving a 56-year-old algorithm for 4x4 complex matrix multiplication. Furthermore, it has delivered tangible, deployed optimizations for critical large-scale computational infrastructure at Google, including data center scheduling and LLM training kernel efficiency. This work demonstrates a shift from LLMs as coding assistants to AI agents capable of independently driving scientific progress and solving complex engineering challenges, effectively learning how to discover better solutions.

AlphaEvolve: An Evolutionary Coding Agent for Scientific and Algorithmic Discovery

The quest to build AI systems that can make novel scientific discoveries or devise superior algorithms for complex problems has long been a central ambition in artificial intelligence. A recent paper introduces AlphaEvolve, a system that marks a substantial step forward in this pursuit. This work tackles the challenge of enabling Large Language Models (LLMs) to move beyond generating plausible code to making entirely new scientific or practical breakthroughs and optimizing critical computational infrastructure.

AlphaEvolve's core innovation is an autonomous evolutionary coding agent that orchestrates LLMs to iteratively improve algorithms by making direct code changes. This approach has led to new state-of-the-art results in mathematics and computer science, and notable efficiency gains in real-world, large-scale systems.

Context and Differentiation: Beyond Existing Methods

AlphaEvolve builds upon prior work like FunSearch, which also used LLMs for discovery. However, AlphaEvolve significantly expands on these foundations.

While FunSearch typically evolved single Python functions, AlphaEvolve can evolve entire code files—potentially hundreds of lines—in any programming language. This allows it to tackle more complex, multi-component algorithms and integrate with large existing codebases. Furthermore, AlphaEvolve supports multi-objective optimization, leverages more powerful LLMs (Gemini 2.0 Flash and Pro ensemble) with richer contextual information in prompts, and employs a scalable evaluation pipeline. A key methodological innovation is its ability to evolve heuristic search algorithms themselves, not just direct solutions. This approach is particularly powerful because, instead of directly trying to generate a complex mathematical construction (which can be an incredibly sparse search problem), AlphaEvolve can learn how to search for such a construction. By evolving the search heuristic itself, it can discover adaptive, multi-stage strategies to navigate vast problem landscapes more effectively, leading to breakthroughs where direct generation might falter. The paper (page 12) notes that this automated discovery of adaptive search strategies is challenging to replicate manually.

How AlphaEvolve Works: The Methodology

AlphaEvolve employs an evolutionary loop, depicted at a high level in Figure 1 and detailed further in Figure 2 of the paper.

The process begins with a user defining a task by providing an initial program (with specific code blocks marked for evolution) and an evaluation function h. This function automatically assesses generated solutions and returns scalar metrics that AlphaEvolve aims to maximize.

The core loop proceeds as follows:

Prompt Sampling: AlphaEvolve draws from a

Program databasecontaining previously discovered solutions and their scores. This, along with system instructions and contextual information, forms a rich prompt.Creative Generation: An ensemble of LLMs processes these prompts to generate code modifications, often as "diffs" (similar to changes tracked in version control systems) specifying targeted modifications to parent programs. This format allows for precise, incremental improvements to existing, potentially large codebases rather than requiring complete rewrites, facilitating integration and focused evolution.

Program Creation & Evaluation: These diffs are applied to create new child programs, which are then scored by

Evaluatorsusing the user-providedh. This step can involve an "evaluation cascade" for efficient pruning and can even incorporate LLM-generated feedback for qualitative aspects.Database Update: Promising new solutions are registered back into the

Program database, which is inspired by algorithms like MAP-elites to balance exploration and exploitation.

This entire pipeline is an asynchronous, distributed system optimized for throughput, allowing for the generation and testing of numerous ideas. The rationale behind this evolutionary approach is to enable persistent improvement beyond single-shot LLM generation, grounding the search in empirical performance and mitigating LLM hallucinations. The evolutionary process allows for sustained exploration of the solution space, potentially escaping local optima that might trap single-shot LLM generation.

Crucially, AlphaEvolve uses LLMs not as simple random mutators, but as intelligent operators that leverage their vast world knowledge and reasoning capabilities to propose contextually relevant and potentially transformative code modifications, acting as sophisticated, learned "mutation and crossover" mechanisms. Using LLMs to propose code changes offers flexibility over predefined mutation operators, while rich contextual prompts and an ensemble of LLMs enhance the quality and diversity of suggestions.

Experimental Validation: Groundbreaking Results Across Domains

AlphaEvolve's capabilities were tested across three challenging domains: discovering faster matrix multiplication algorithms, tackling open mathematical problems, and optimizing components of Google's computing ecosystem.

1. Faster Matrix Multiplication: AlphaEvolve was tasked with finding low-rank decompositions of 3D tensors representing matrix products. Most strikingly, it discovered a search algorithm that found a procedure to multiply two 4×4 complex-valued matrices using 48 scalar multiplications. This marks the first improvement in an astonishing 56 years over Strassen's renowned algorithm (which uses 49 multiplications) for this specific complex matrix setting, a testament to AlphaEvolve's discovery power.

Editor comment: AlphaTensor achieved 47 using modular arithmetic, while AlphaEvolve result is for complex arithmetic.

The evolved algorithm involved novel modifications to the loss function and optimizer, as illustrated in examples like Figure 4 (too big to insert here). Across 54 matrix multiplication targets, AlphaEvolve matched or surpassed the best-known ranks in all but two cases.

2. Open Mathematical Problems: When applied to over 50 open mathematical problems, AlphaEvolve matched the best-known constructions in approximately 75% of cases and surpassed the state-of-the-art in about 20%.

Notable improvements include:

A new upper bound for Erdős's Minimum Overlap Problem.

An improved lower bound for the Kissing Number in 11 dimensions (from 592 to 593).

Improved bounds for autocorrelation inequalities and various packing problems. These results were often achieved by evolving heuristic search algorithms that then found the improved constructions.

3. Optimizing Google's Computing Ecosystem: AlphaEvolve demonstrated significant real-world impact:

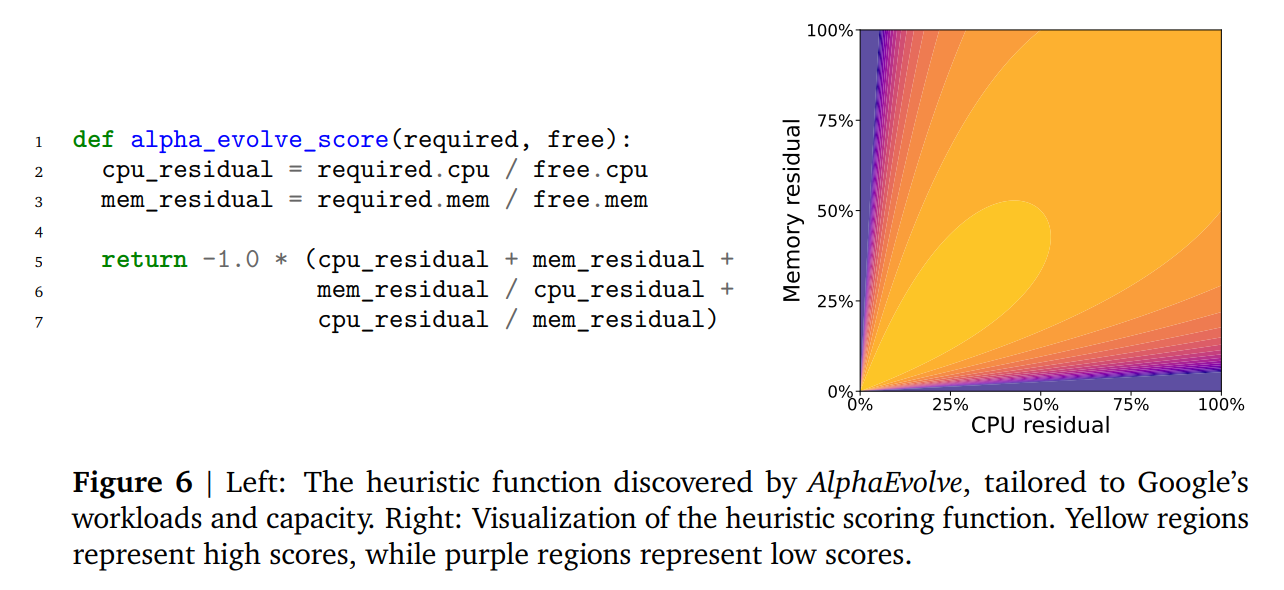

Data Center Scheduling: It developed a more efficient scheduling heuristic (Figure 6) for Google's Borg system, recovering on average 0.7% of fleet-wide compute resources. This solution was deployed due to its performance and advantages in interpretability and debuggability.

Gemini Kernel Engineering: It optimized tiling heuristics for matrix multiplication kernels used in training Gemini models, leading to an average 23% kernel speedup and a 1% reduction in Gemini's overall training time. These optimizations, which drastically reduced human effort from months to days, have also been deployed.

Hardware Circuit Design: AlphaEvolve found a functionally equivalent simplification in a TPU arithmetic circuit's Verilog code, removing unnecessary bits.

Compiler-Generated Code: It directly optimized XLA-generated intermediate representations for the FlashAttention kernel, speeding it up by 32%.

Ablation studies (Figure 8) confirmed that each component of AlphaEvolve—the evolutionary approach, rich context in prompts, meta-prompts, full-file evolution, and powerful LLMs—contributes significantly to its performance.

While results are generally point estimates, these ablations provide insight into variability across runs.

Broader Implications and Future Trajectories

The findings from AlphaEvolve have profound implications. This work elevates AI's role from a mere assistant to an active participant, and even a leader, in fundamental scientific discovery and complex engineering optimization. The ability of an AI agent to autonomously improve upon human-designed algorithms that have stood for decades, or to optimize highly complex, real-world systems, signifies a new era. The success in optimizing its own underlying LLM training kernels points towards a fascinating positive feedback loop, where AI can accelerate its own development.

The authors suggest future work focused on distilling AlphaEvolve's augmented performance back into base LLMs, expanding its application by creating more robust evaluation functions for diverse problems, and integrating LLM-provided qualitative evaluation more deeply, especially for domains where programmatic evaluation is challenging.

Limitations and Considerations

The primary limitation acknowledged by the authors is AlphaEvolve's reliance on problems where solutions can be automatically and objectively evaluated via a machine-gradeable function h. This currently excludes tasks requiring extensive manual experimentation or subjective human judgment. While individual evaluations can be parallelized, their wall-clock duration can still be a bottleneck if not sufficiently distributed. Scalability to extremely large problem sizes also presented some memory challenges (e.g., for matrix multiplication beyond (5,5,5)).

Beyond these, the reliance on powerful, proprietary LLMs and significant computational resources (e.g., evaluations taking ~100 compute-hours) means that reproducing or extending such research is currently challenging for those outside large, well-resourced labs. As AI-generated algorithms become increasingly complex, ensuring their verifiability, trustworthiness, and interpretability will remain a critical ongoing challenge, although the paper does highlight efforts in this direction, such as choosing interpretable solutions for data center scheduling and using robust verification for hardware design.

Conclusion: A Significant Leap Forward

AlphaEvolve is a landmark paper that convincingly demonstrates a new level of capability in AI-driven discovery and optimization. By effectively combining the pattern recognition and code generation strengths of LLMs with the iterative refinement power of evolutionary algorithms and the grounding of automated evaluation, the authors have created a system that not only solves hard problems but also uncovers genuinely novel solutions. The breakthroughs in fundamental mathematics and the tangible impact on large-scale industrial systems are particularly compelling.

This research offers a significant and valuable contribution to the field, pushing the frontiers of what AI can achieve in scientific and algorithmic endeavors. It provides a strong foundation and a clear call for further exploration into evolutionary AI agents as powerful collaborators in human discovery and innovation.