An Information Theoretic Perspective on Agentic System Design

Authors: Shizhe He, Avanika Narayan, Ishan S. Khare, Scott W. Linderman, Christopher Ré, Dan Biderman

Paper: https://arxiv.org/abs/2512.21720

TL;DR

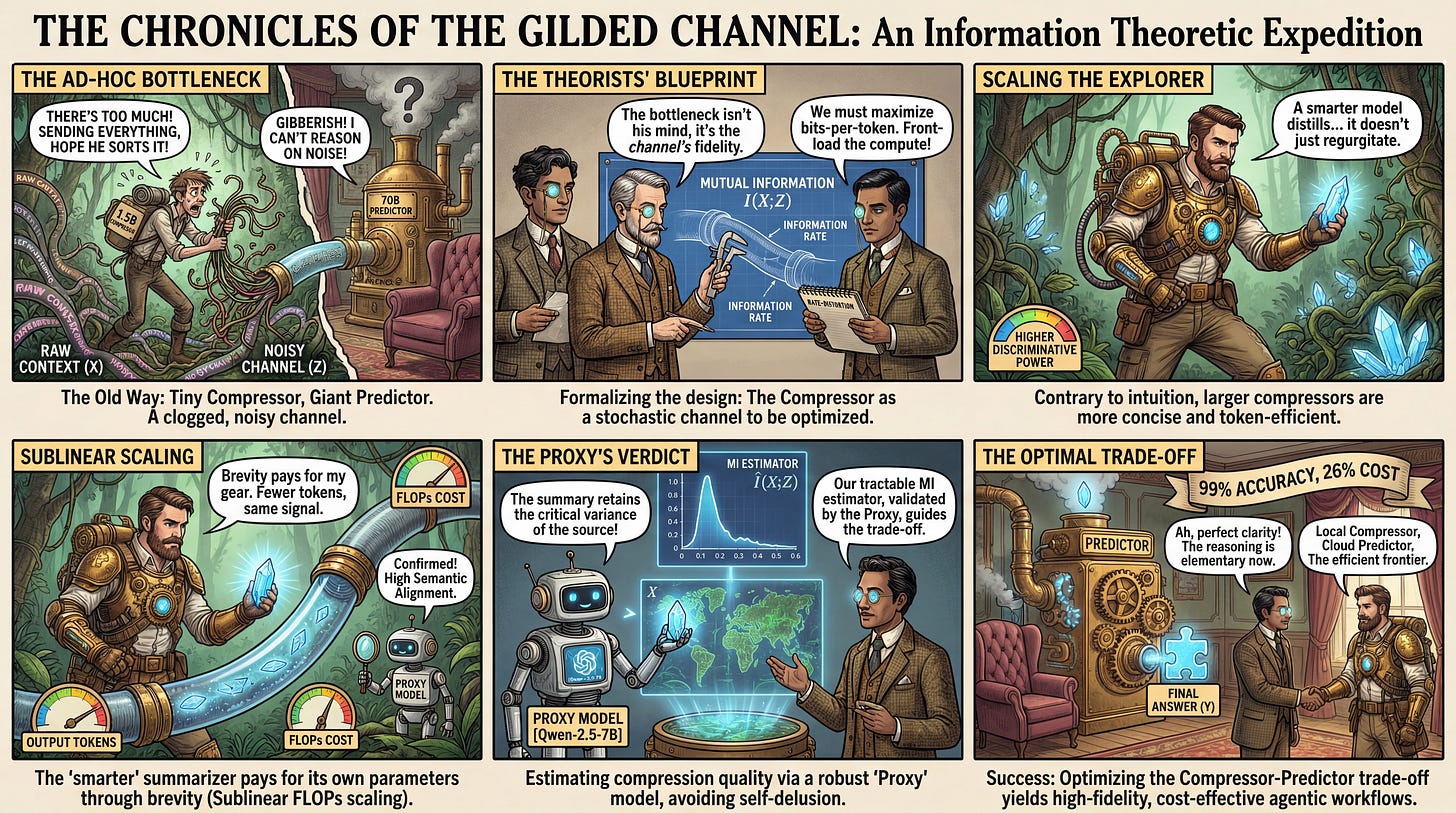

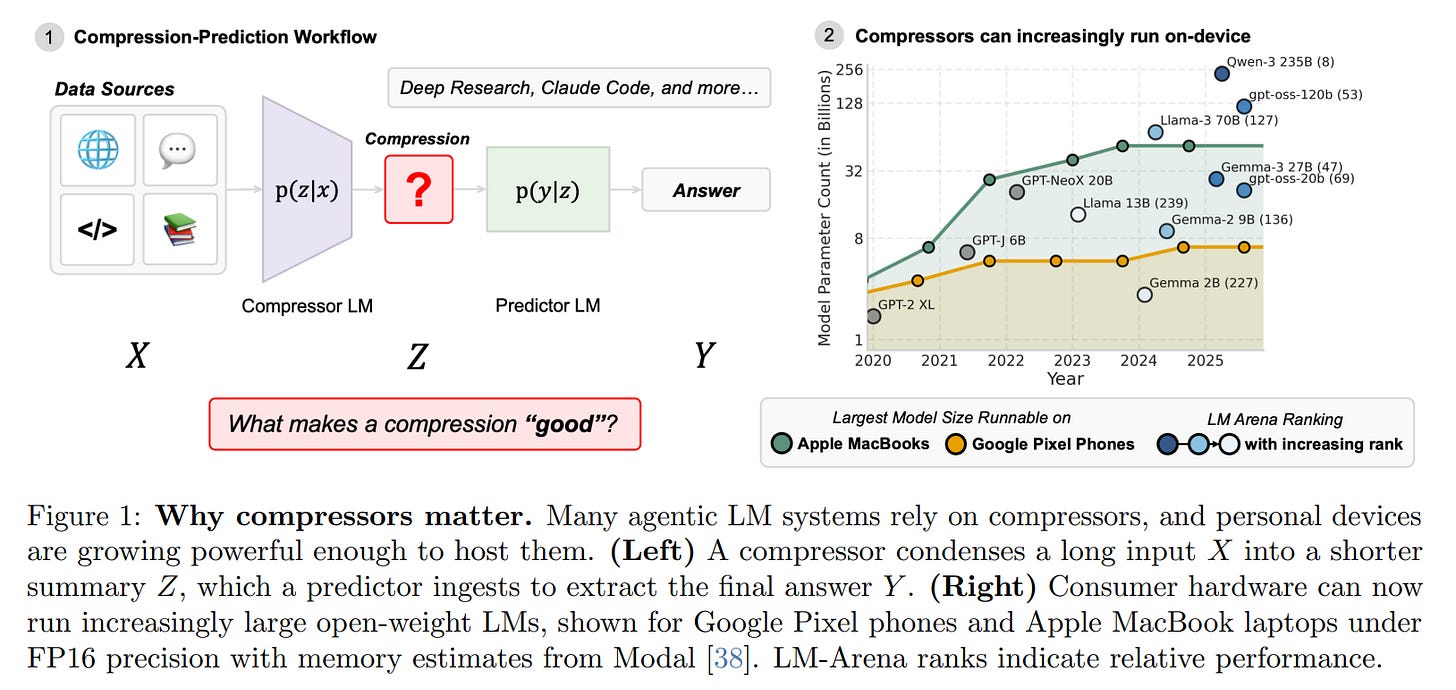

WHAT was done? The authors formalize the design of multi-step agentic systems (e.g., “Deep Research”) as an information-theoretic problem, modeling the summarization step as a noisy channel. They introduce a tractable estimator for Mutual Information (MI) to measure how well a “compressor” model retains context for a “predictor” model.

WHY it matters? This framework challenges the common heuristic of using the largest possible model for the final reasoning step. The study reveals that “front-loading” compute into the compressor is far more efficient; a 7B parameter compressor paired with a smaller predictor often outperforms massive end-to-end models. Practically, this allows local, edge-device models (3B) to act as compressors, recovering 99% of frontier-model accuracy at 26% of the API cost.

Details

The Compressor-Predictor Architecture Gap

Modern agentic workflows, such as OpenAI’s “Deep Research” or Anthropic’s “Claude Code,” rely on a bifurcated architecture to handle context limits and cost. These systems typically employ a Compressor-Predictor pattern: a “compressor” model (often running in parallel) ingests raw data (search results, logs) and distills it into a summary Z, which is then consumed by a “predictor” model to generate the final answer Y. Historically, the selection of these models has been ad-hoc, relying on expensive pairwise parameter sweeps or intuition.

The core bottleneck in this design is the “attribution problem.” When an agent fails, is it because the compressor dropped critical information (noisy channel), or because the predictor failed to reason over it (processing error)? Without a task-agnostic metric for compression quality, engineers are forced to treat the pipeline as a black box. This paper moves beyond empirical trial-and-error by applying Information Bottleneck principles, providing a theoretical substrate to optimize the trade-off between token efficiency (rate) and downstream accuracy (distortion).