Authors: Sandeep S. Cranganore, Andrei Bodnar, Arturs Berzins, Johannes Brandstetter

Paper: https://arxiv.org/abs/2507.11589

Code: https://github.com/AndreiB137/EinFields

TL;DR

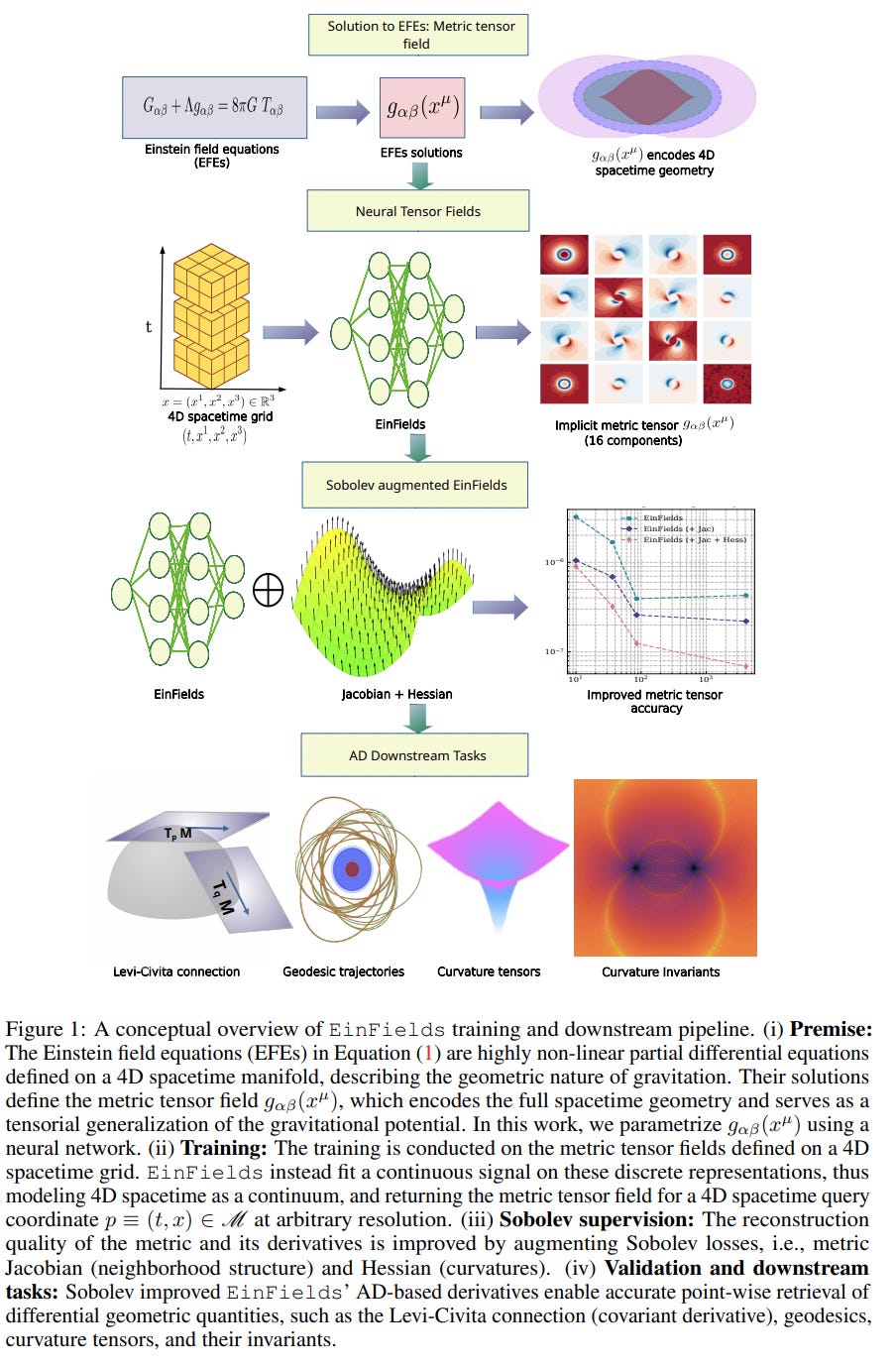

WHAT was done? This paper introduces Einstein Fields (EinFields), a novel framework that uses implicit neural networks to compress computationally intensive 4D numerical relativity simulations into compact neural network weights. Instead of relying on traditional, discrete grid-based methods, EinFields models the metric tensor—the core field of general relativity—as a continuous function of spacetime coordinates. By learning this fundamental geometric representation from analytical or numerical solutions, all other physical quantities, such as curvature tensors and particle trajectories (geodesics), are derived post-hoc via automatic differentiation (AD).

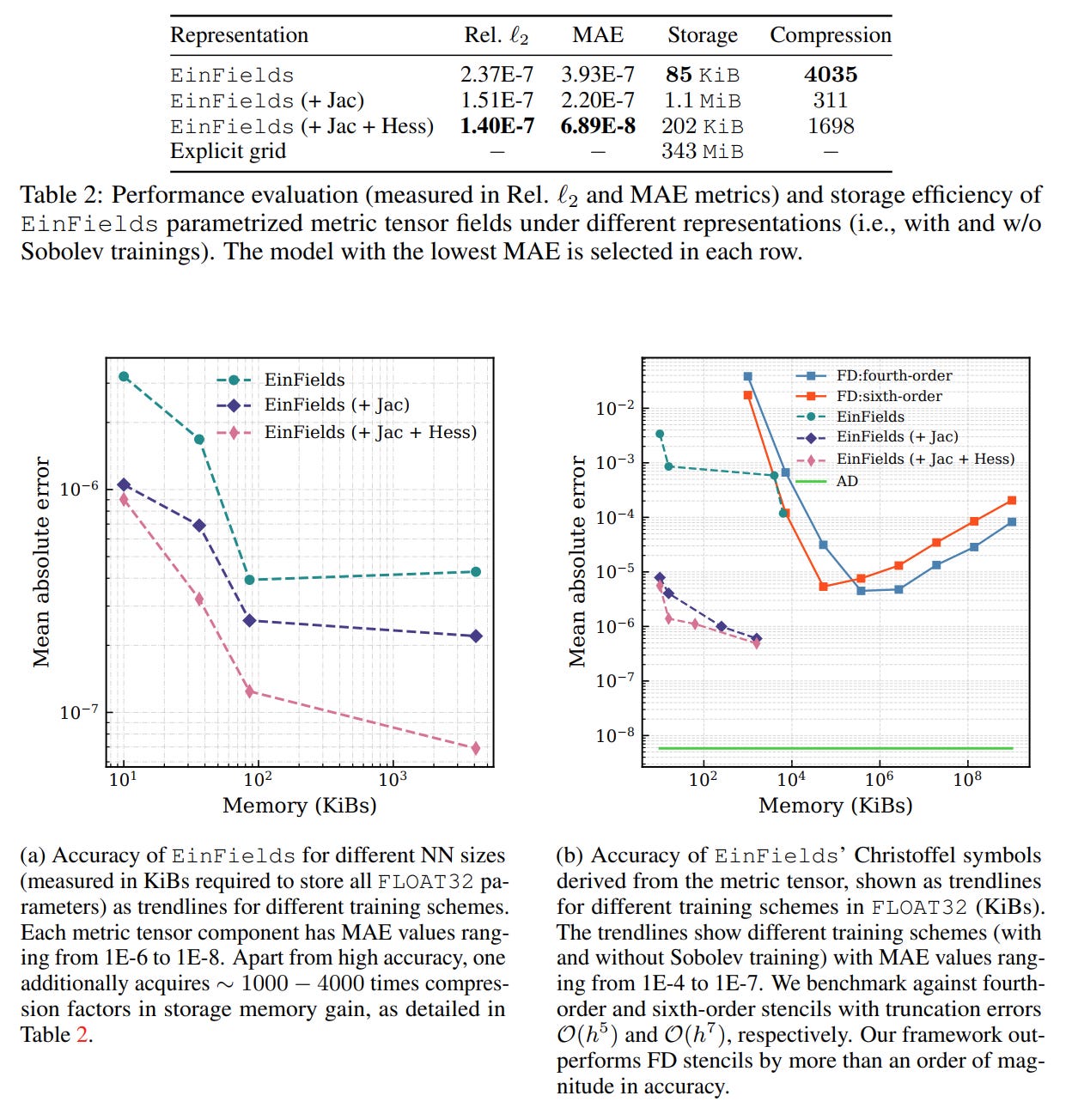

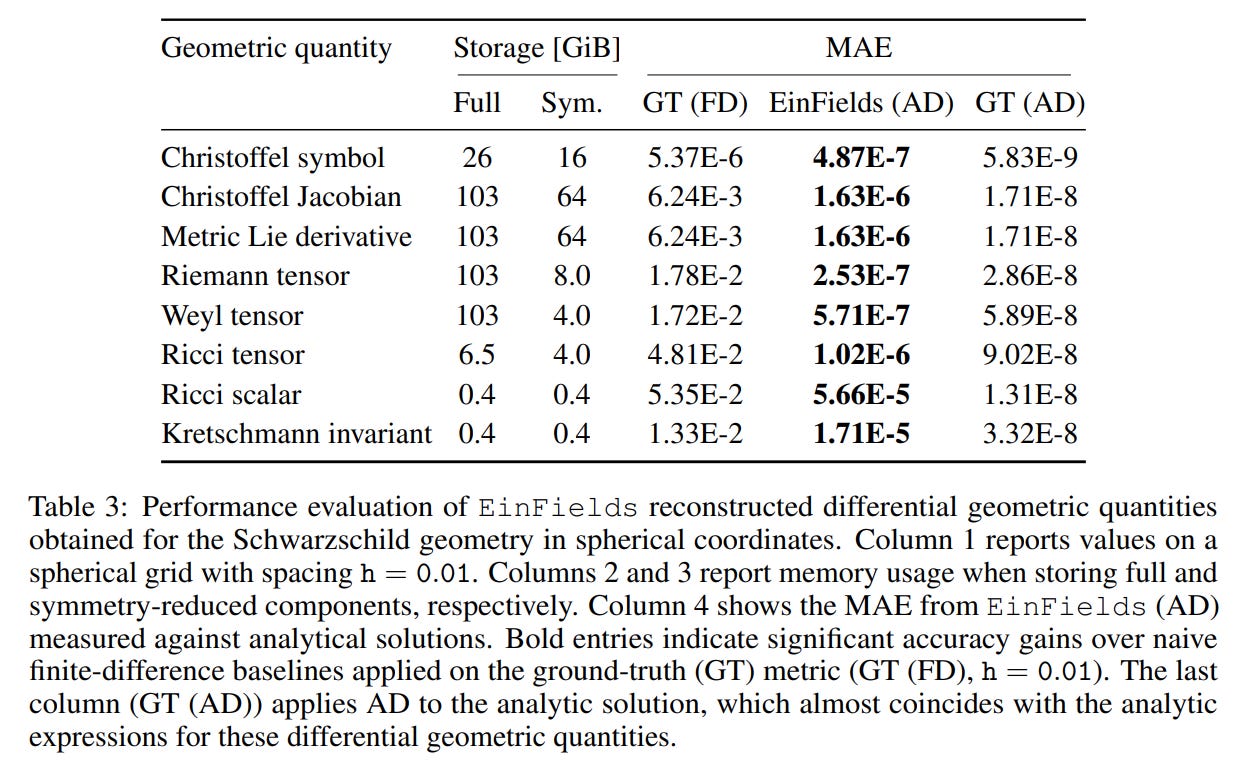

WHY it matters? This approach matters because it tackles the immense computational and storage costs of numerical relativity. EinFields achieve compression factors of up to 4000x while maintaining high precision (Table 2). The use of AD yields derivatives that are orders of magnitude more accurate than traditional finite-difference methods (Table 3). This enables a more efficient, flexible, and accurate way to store, analyze, and derive physical insights from complex spacetime simulations, potentially paving the way for a new class of hybrid AI and physics models in astrophysics and fundamental science.

Details

From Petascale Grids to Compact Neural Weights

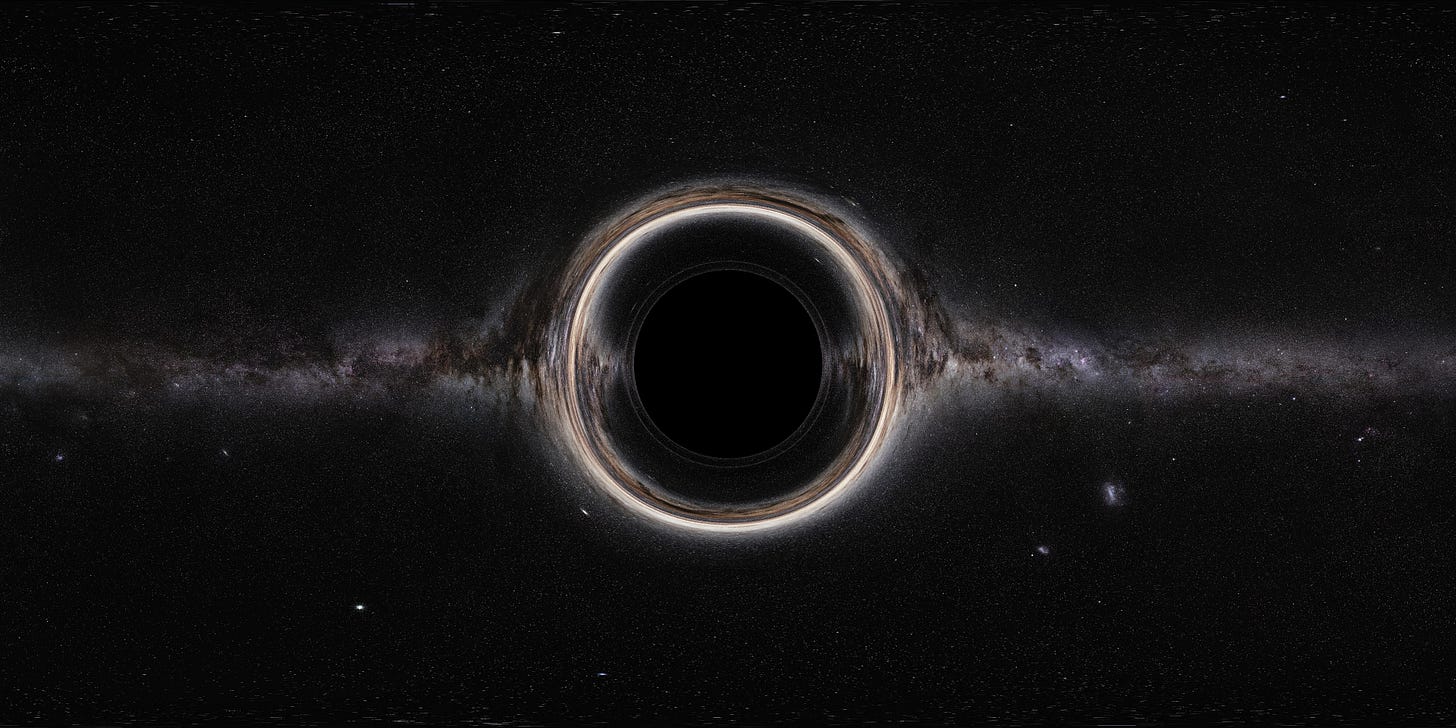

Numerical relativity (NR) is a cornerstone of modern physics, enabling the simulation of extreme astrophysical events like black hole mergers, which were pivotal for the Nobel-winning detection of gravitational waves. However, these simulations are notoriously resource-intensive, often requiring petascale supercomputers to solve the complex, non-linear Einstein Field Equations (EFEs) on discrete spacetime grids. A recent paper introduces Einstein Fields, a novel approach that seeks to reframe this computational challenge by merging the principles of general relativity with the power of neural fields.

The central idea is to shift from explicit, grid-based data storage to a compact, continuous, and differentiable neural representation. Instead of storing terabytes of data points, an entire 4D spacetime simulation is compressed into the weights of an implicit neural network. This work presents a compelling case that this is not merely a data compression technique, but a fundamentally new way to interact with and derive physics from simulated spacetimes.

Methodology: Learning the Fabric of Spacetime

The core of EinFields is a neural network, typically a Multi-Layer Perceptron (MLP), that learns to represent the metric tensor, g_αβ(xμ). The metric is the fundamental object in general relativity, encoding the geometry of spacetime and governing everything from distances and angles to the paths of light and matter.

The methodology is built on several key principles:

Distortion Decomposition: To improve learning efficiency, the model is trained not on the full metric, but on its "distortion" component, Δ_αβ=g_αβ−η_αβ, where η_αβ is the trivial flat Minkowski background (this is the Equation 7). This clever trick is like asking the network to learn only the interesting 'wrinkles' and 'curves' in spacetime, rather than wasting its capacity relearning the vast, unchanging flat background.

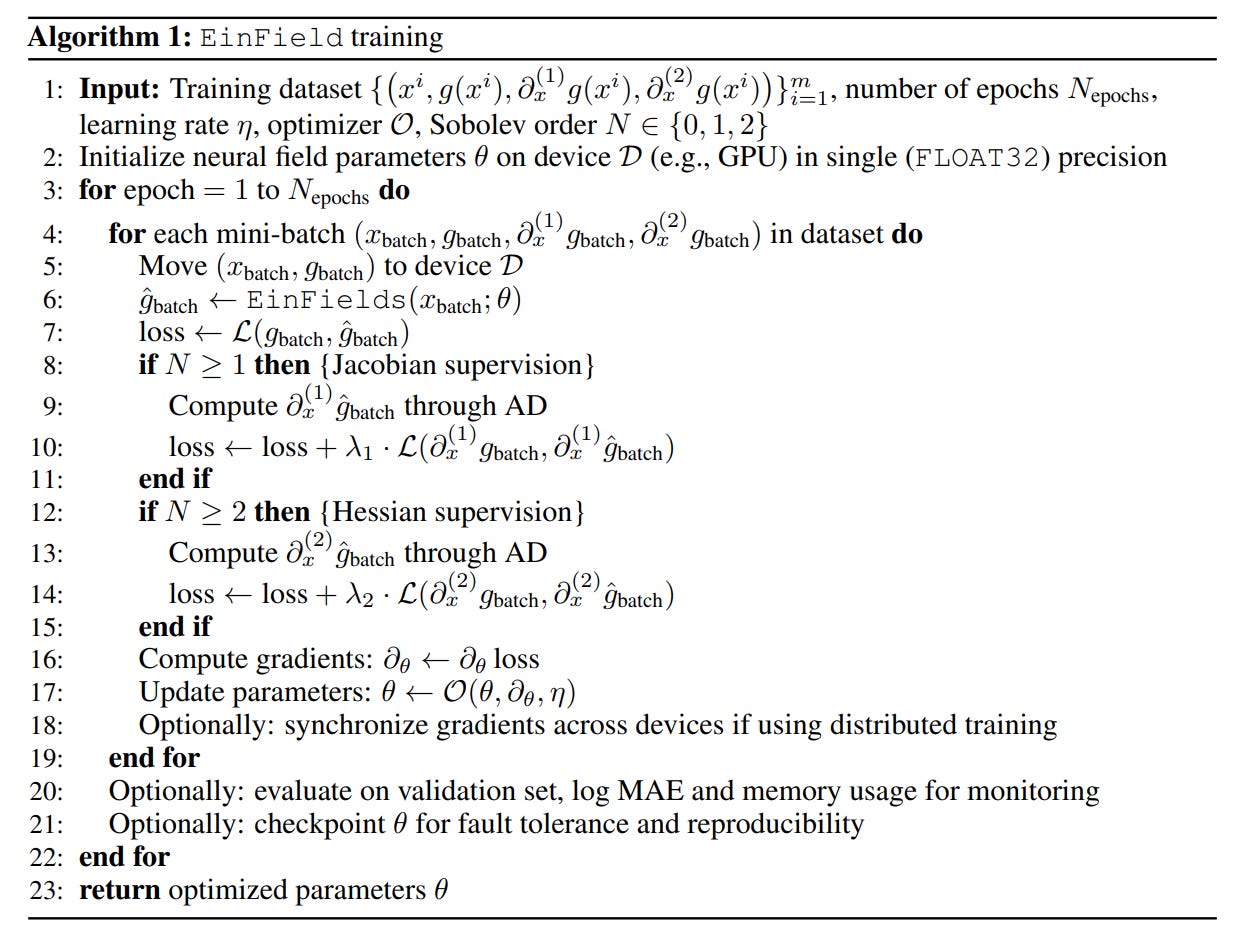

Sobolev Training: A crucial innovation is the use of Sobolev training (Algorithm 1), which extends the supervision beyond just the metric values.

The loss function, detailed in the paper's Equations (8) and (9), is augmented to also penalize errors in the metric's first (Jacobian) and second (Hessian) derivatives. In the language of differential geometry, a spacetime is a smooth manifold where derivatives must also be well-behaved. By explicitly training on these derivatives, Sobolev training enforces this geometric self-consistency directly into the neural network's weights, ensuring the learned manifold is not just accurate point-wise, but also structurally sound.

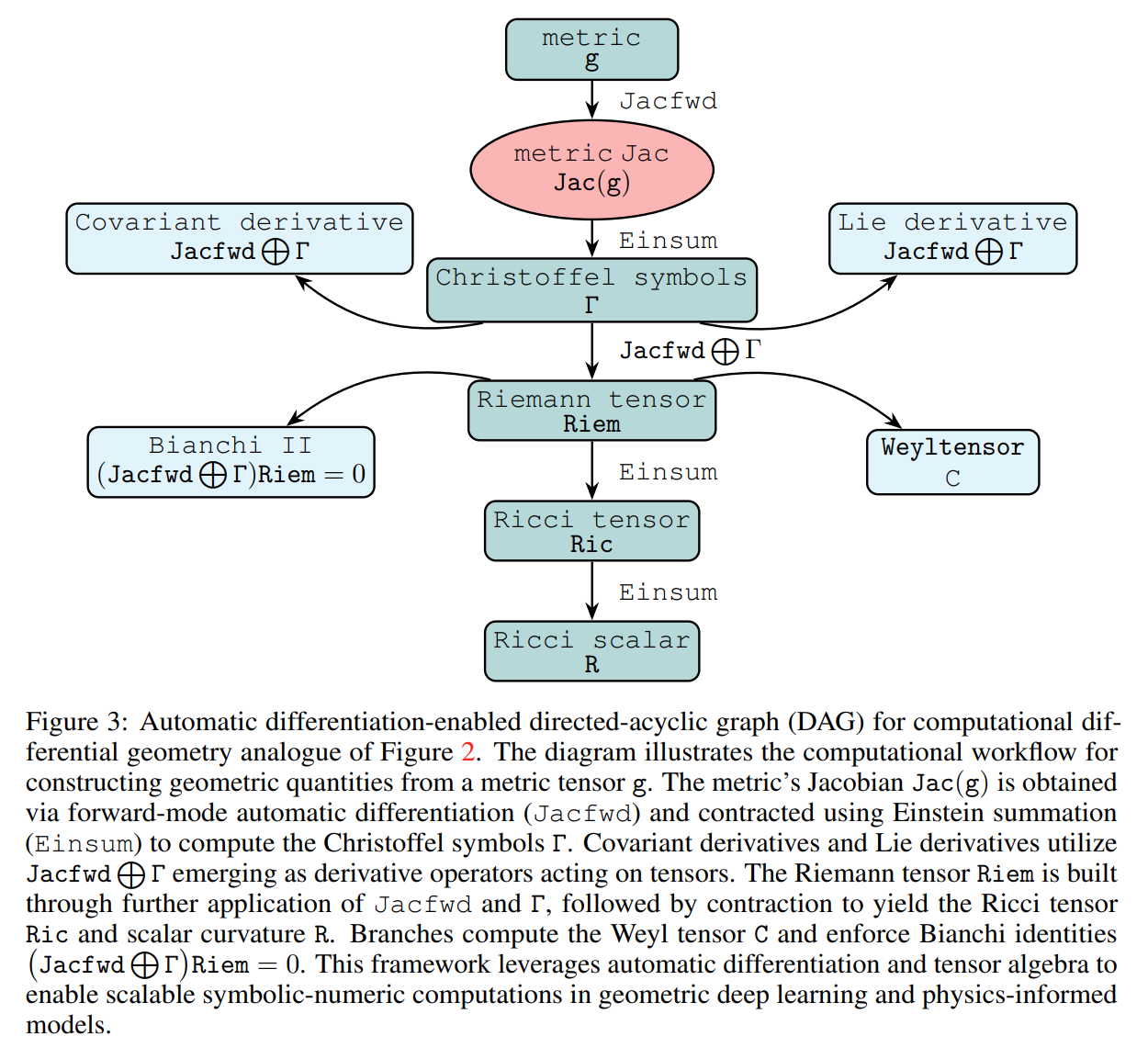

Automatic Differentiation for Physics Derivation: Once the network is trained, all other physical quantities are derived using automatic differentiation (AD) on the learned metric. Instead of painstakingly programming approximations for every physical derivative,

EinFieldstreats the entire edifice of differential geometry—from the metric to Christoffel symbols to the Riemann tensor—as a single, differentiable computational graph (Figure 3). This Directed Acyclic Graph (DAG) is a live, computational model of geometry where physical laws emerge from the chained application of derivatives, all handled seamlessly and accurately by AD.

The models are primarily MLPs with SiLU activation functions, trained with the SOAP optimizer and a GradNorm weighting scheme to manage gradient imbalances during the multi-objective Sobolev training.

Experimental Validation: Recreating Einstein's Universe

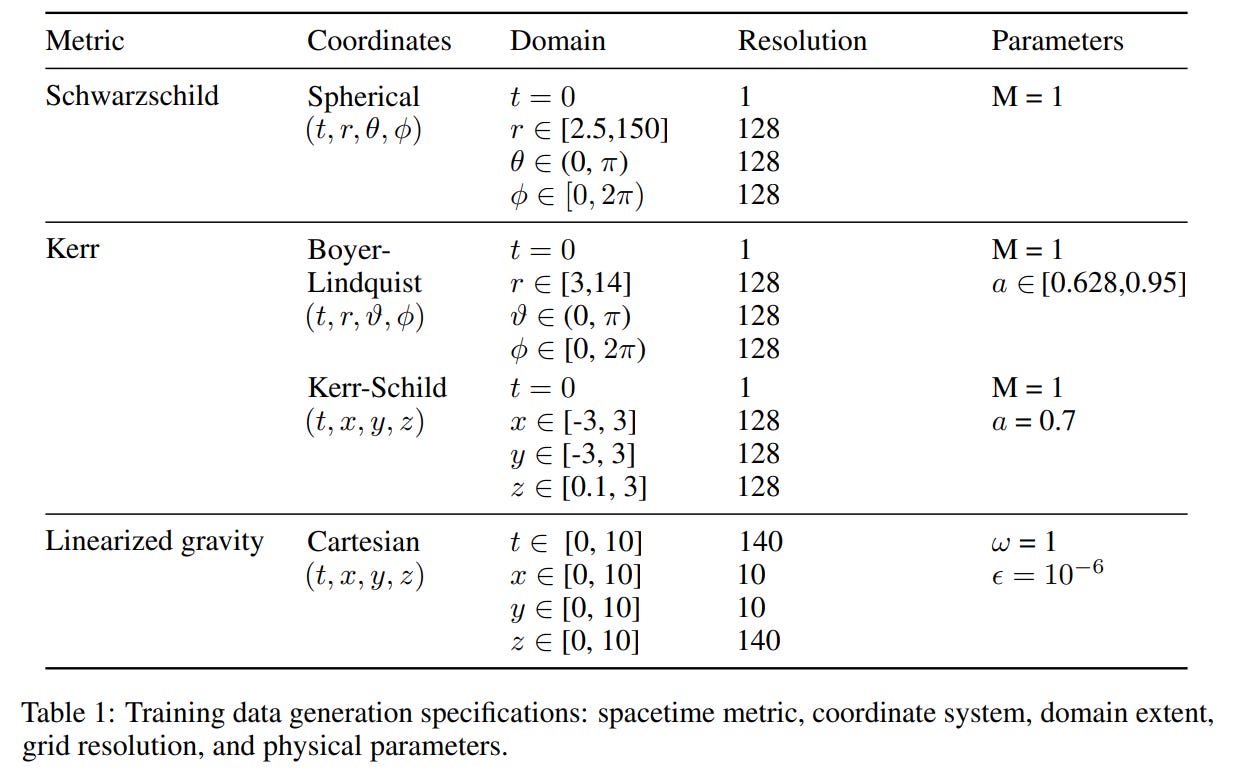

The authors rigorously validate EinFields by training them on synthetic data generated from well-known analytical solutions to the EFEs, including the Schwarzschild (non-rotating black hole), Kerr (rotating black hole), and linearized gravity (gravitational wave) spacetimes (Table 1).

Key Quantitative Results:

Compression and Accuracy:

EinFieldsachieve a best-case Mean Absolute Error (MAE) of approximately 10−8 for the metric components, while offering compression factors of 311x to 4035x over explicit grid storage (Table 2, Figure 4a).Derivative Fidelity: The AD-based derivatives are significantly more accurate than baselines. For instance, the reconstructed Christoffel symbols are over an order of magnitude more accurate than 6th-order finite-difference stencils (Figure 4b), and other derived quantities like the Riemann tensor can be up to 105 times more accurate (Table 3).

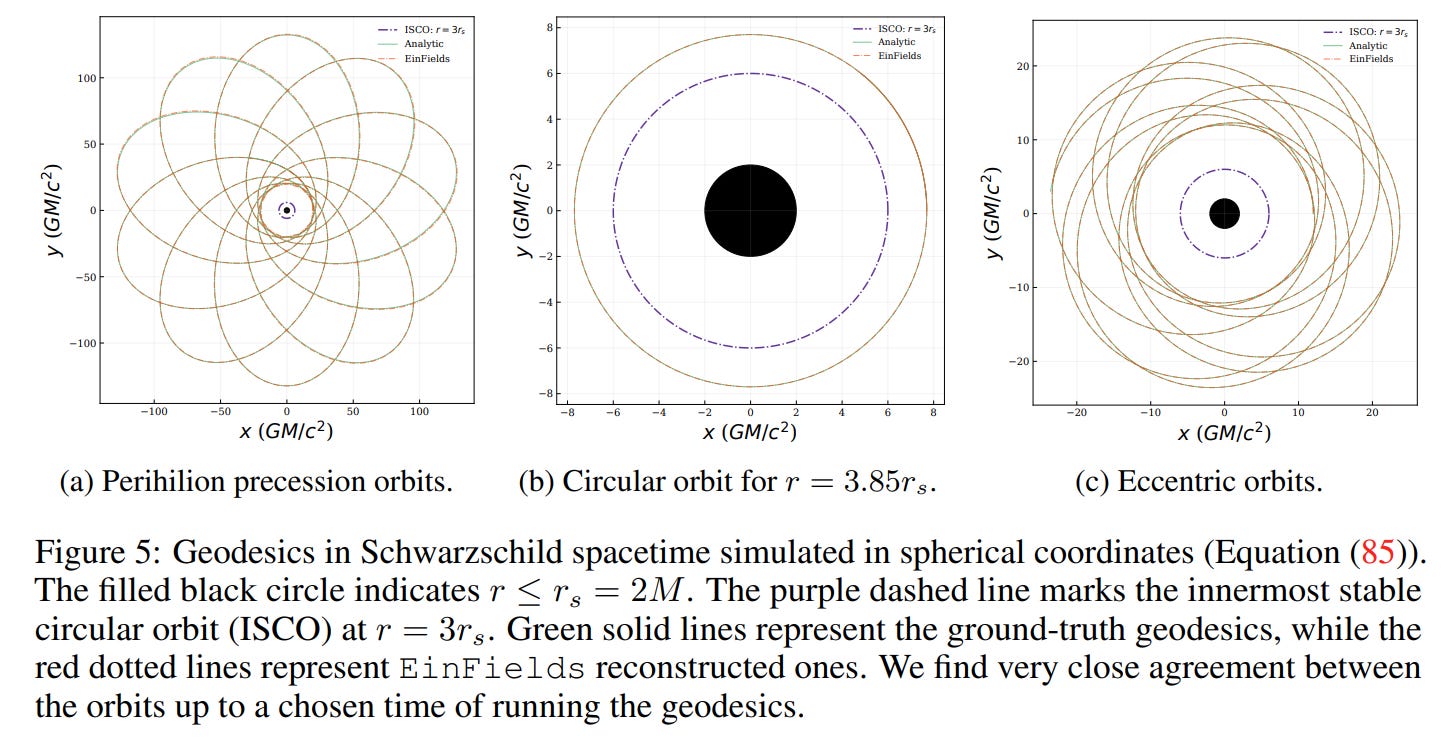

Qualitative Demonstrations: The framework successfully recreates canonical tests of general relativity with high fidelity:

It accurately models the perihelion precession of orbits around a Schwarzschild black hole (Figure 5).

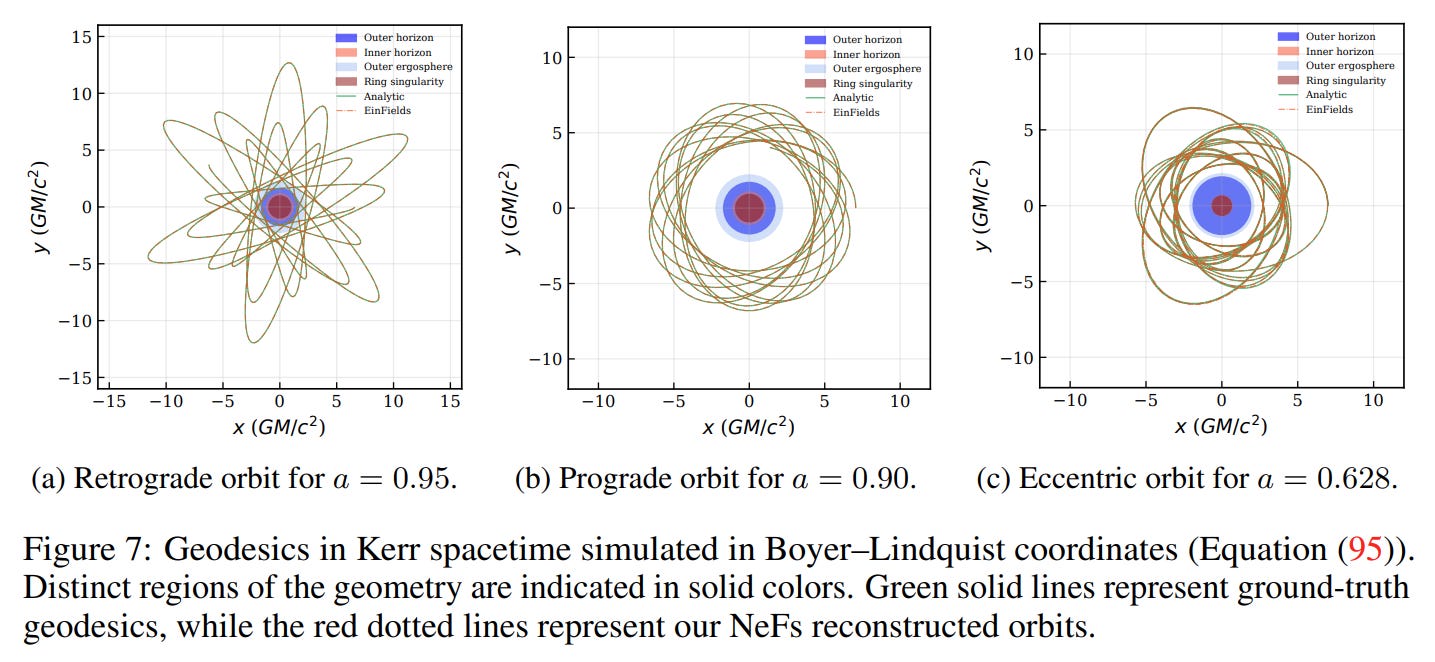

It simulates complex prograde and retrograde geodesics in the spacetime of a rotating Kerr black hole (Figure 7).

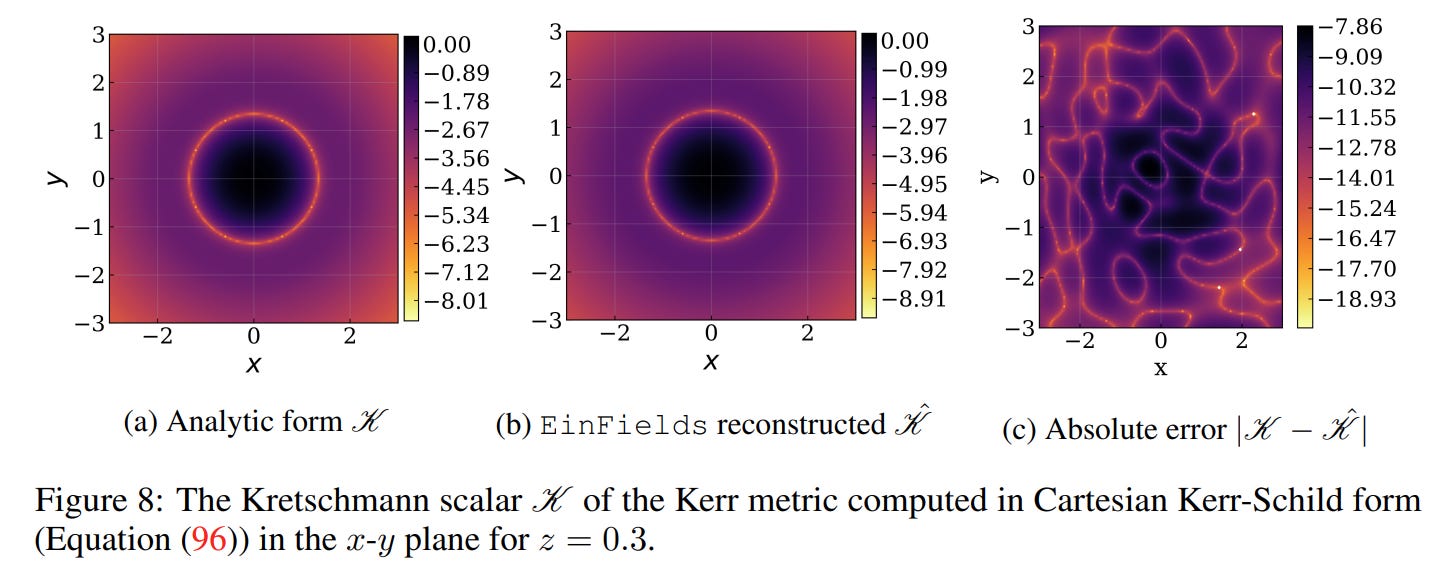

It captures the characteristic "ring singularity" of the Kerr metric through the Kretschmann scalar (Figure 8).

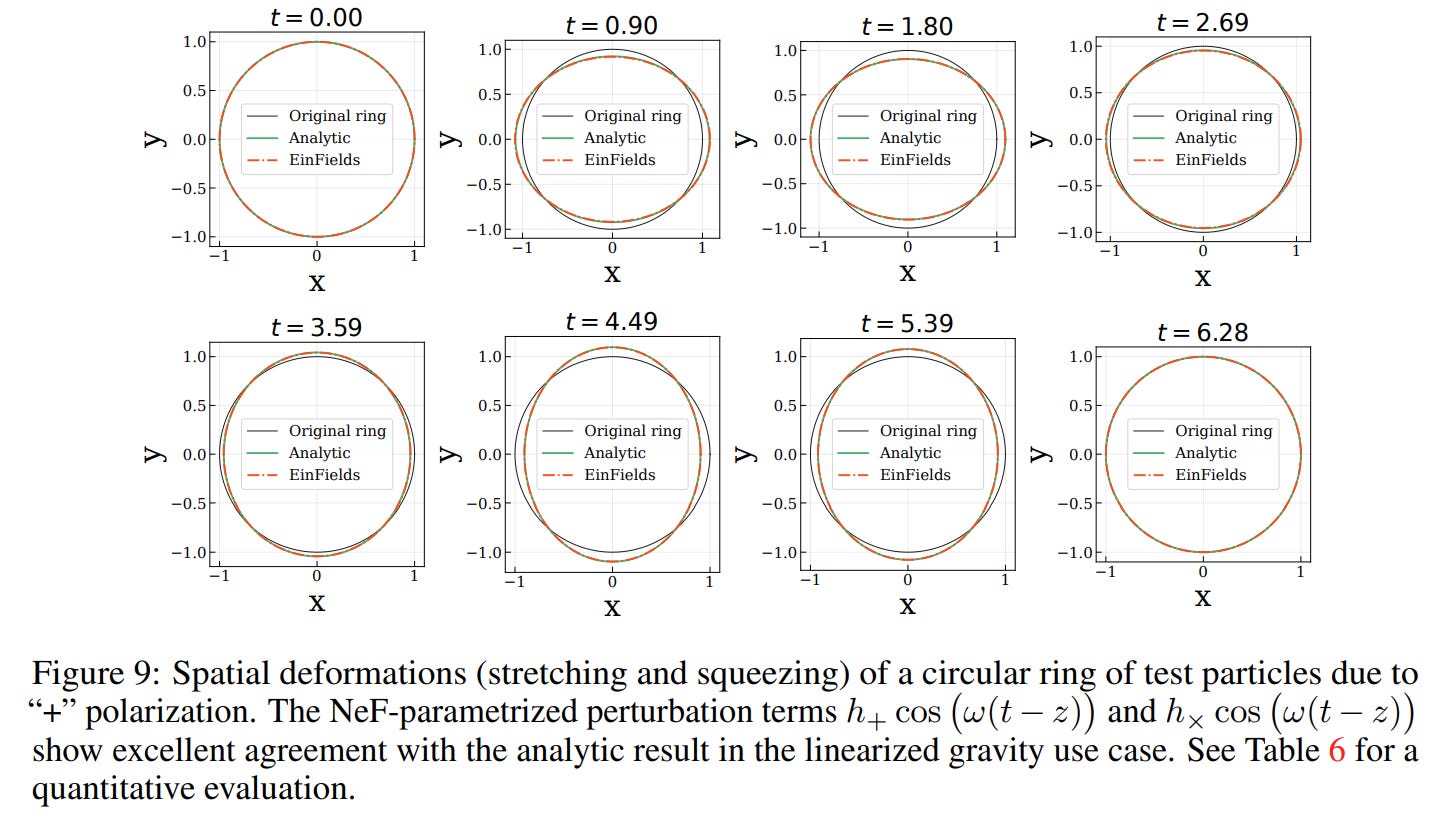

It reproduces the famous "stretching and squeezing" of a ring of test particles by a passing gravitational wave (Figure 9)

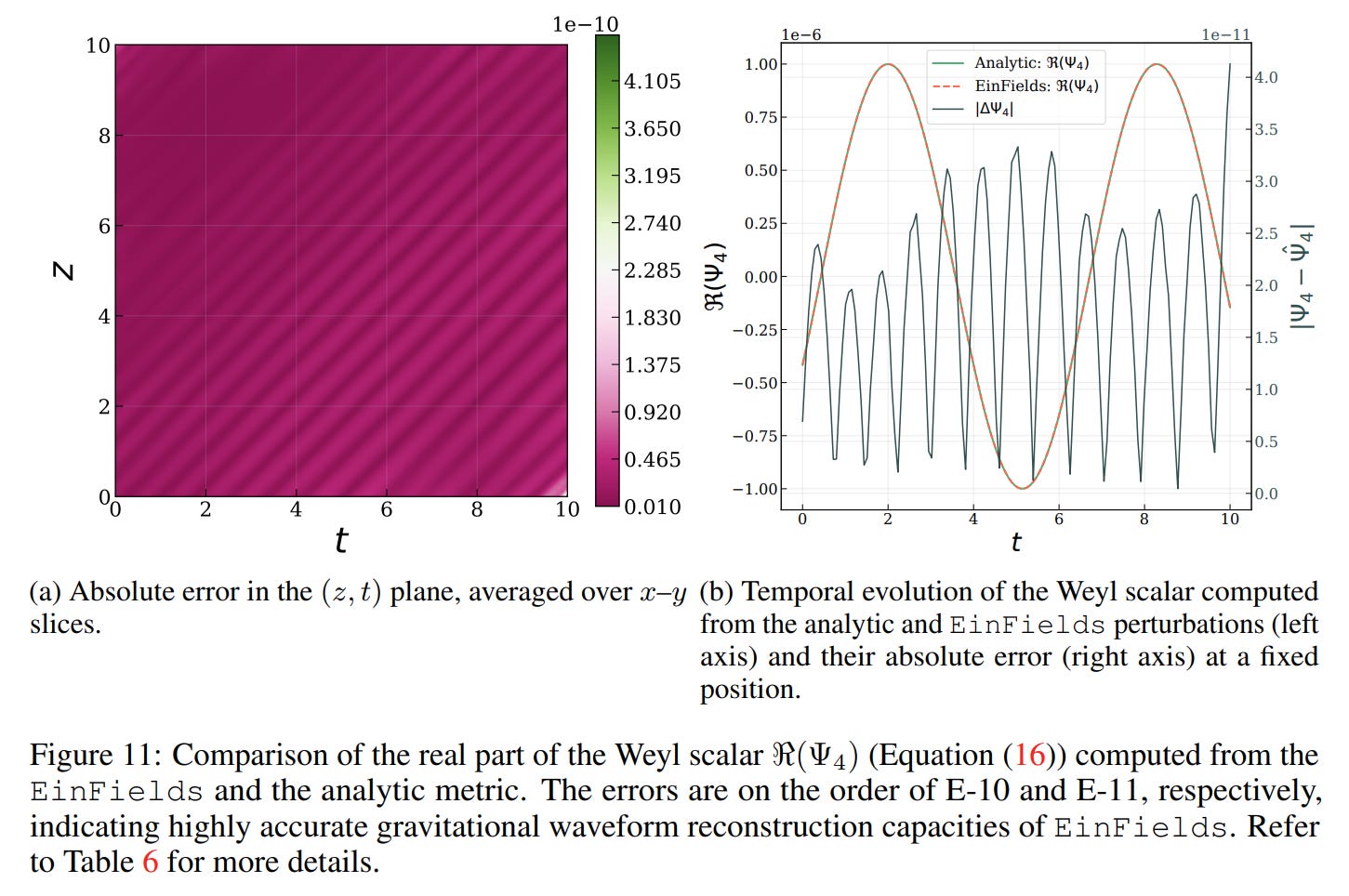

and accurately computes the associated Weyl scalar Ψ_4 (Figure 11), a key quantity in gravitational wave astronomy.

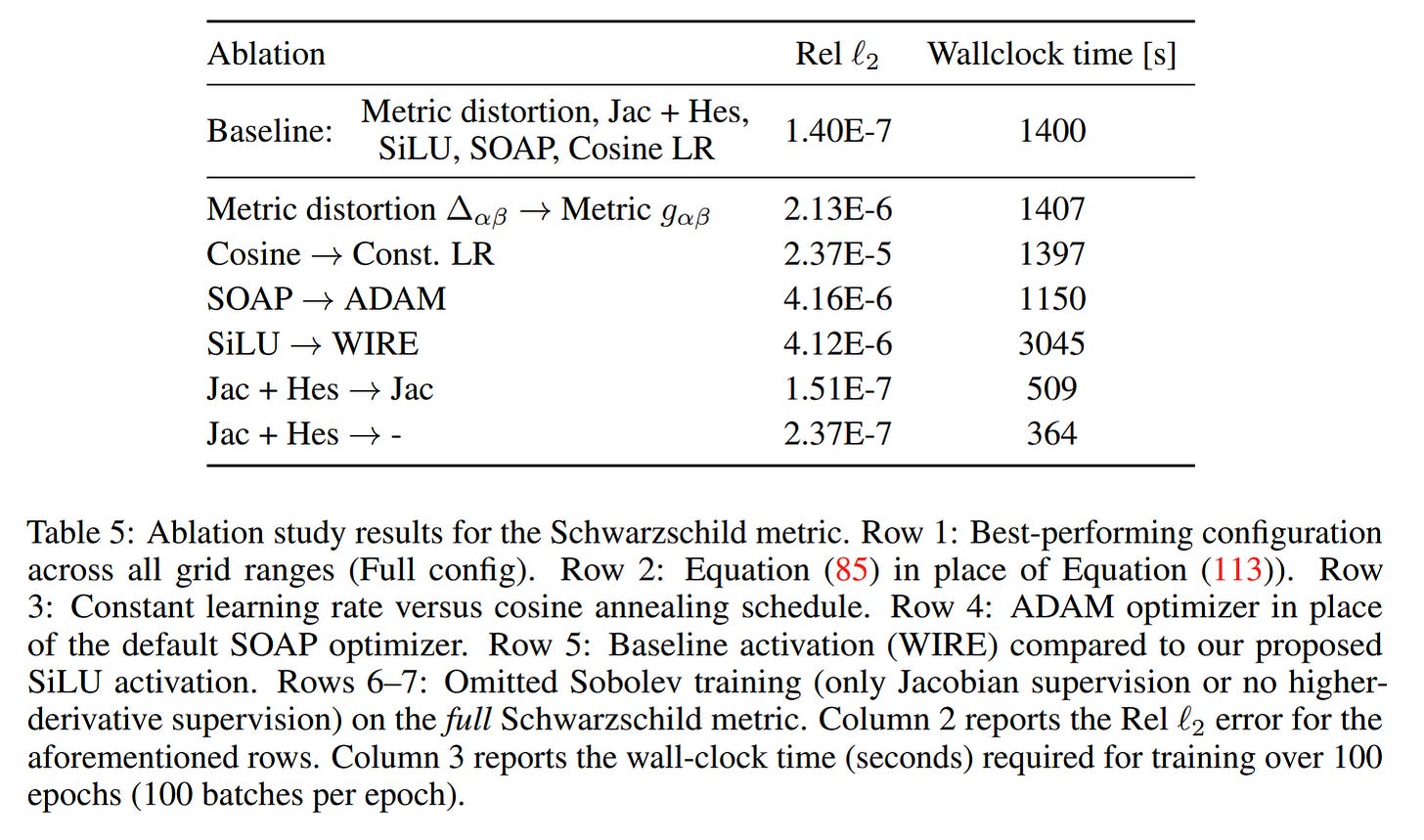

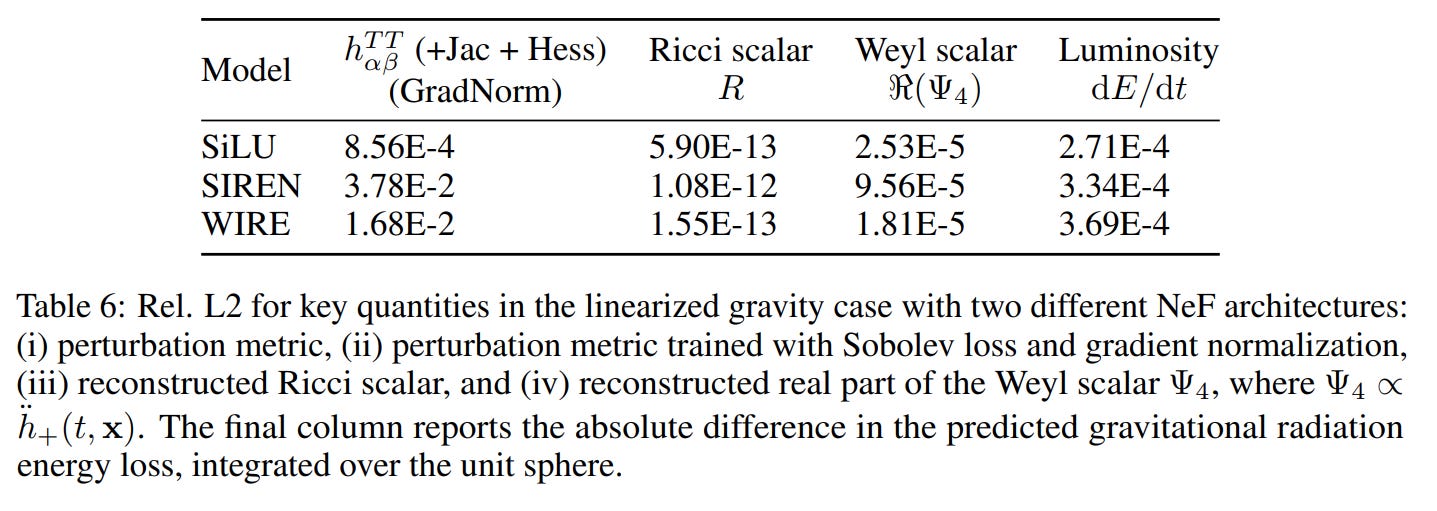

The paper also includes insightful ablation studies, confirming that SiLU activations and the SOAP optimizer are well-suited for this task (Table 5, Table 6),

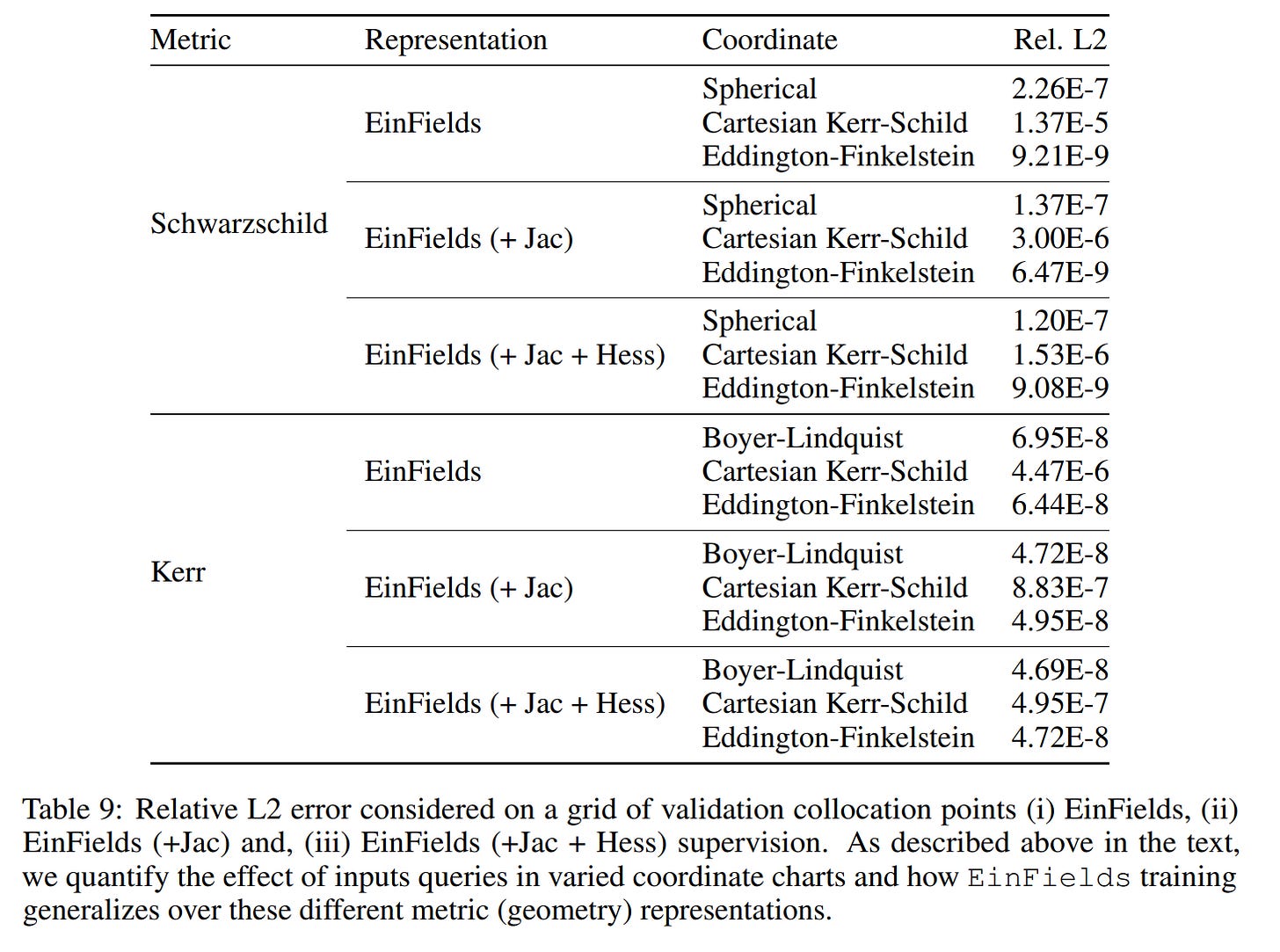

and an analysis on training across different coordinate systems (e.g., spherical, Cartesian) to test the model's robustness (Table 9).

Limitations and Future Directions

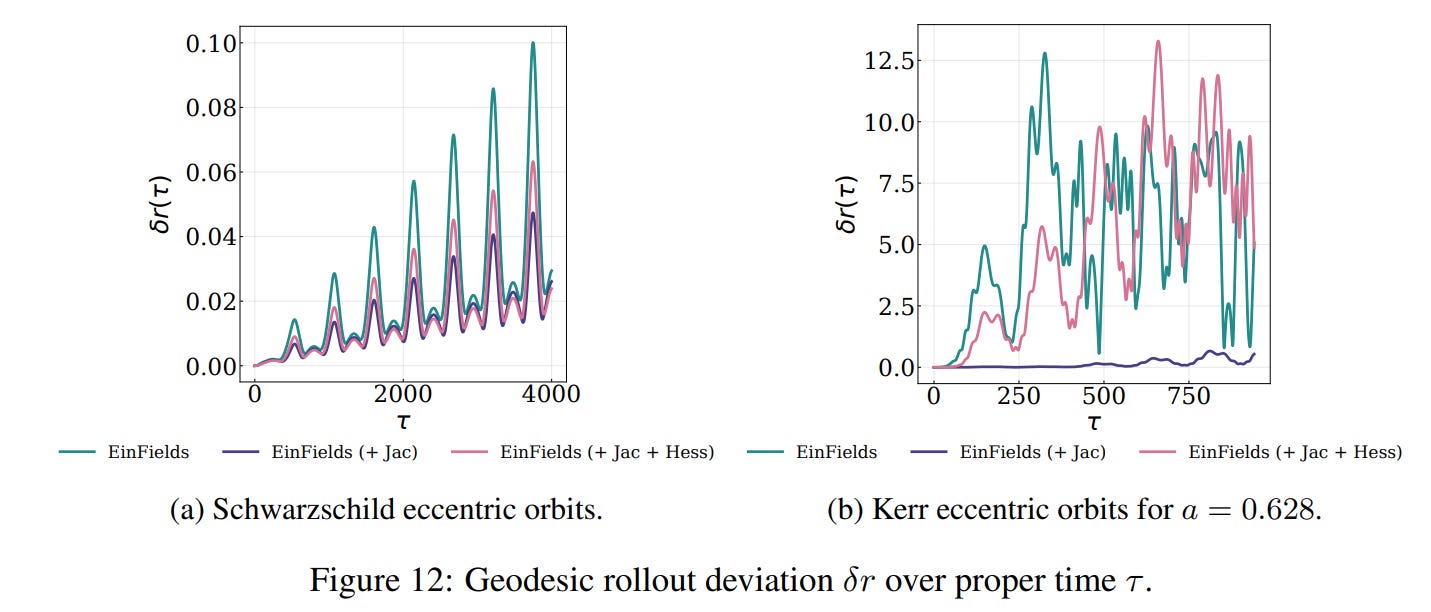

The authors provide a candid discussion of the method's limitations. The lossy nature of neural compression imposes an accuracy ceiling (MAE around 10−8), which prevents it from outperforming high-order numerical methods in FLOAT64 precision. Furthermore, minute errors can accumulate during long-term geodesic simulations, leading to trajectory divergence (Figure 12), and the models struggle near true singularities.

The clear path forward involves testing EinFields on large-scale, dynamic NR simulation data from sources like binary black hole mergers. The authors also propose developing hybrid frameworks that combine the representational power of EinFields with classical numerical solvers (like the BSSN formalism or Generalized Harmonic evolution) to create next-generation simulation tools.

Conclusion and Impact

Einstein Fields represents a significant and thoughtfully executed step towards integrating deep learning into the heart of computational physics. By focusing on representing the fundamental metric tensor, the paper demonstrates a powerful new paradigm where dynamics emerge as a natural byproduct of a learned geometric structure. The impressive compression, derivative accuracy, and faithful reconstruction of complex physics underscore the potential of this approach. The release of an open-source, JAX-based differential geometry library further lowers the barrier to entry for researchers in this burgeoning field. This work not only provides a practical tool for numerical relativity but also serves as a compelling proof-of-concept for how neural fields can be applied to model the fundamental laws of our universe.