Energy-Based Transformers are Scalable Learners and Thinkers

Authors: Alexi Gladstone, Ganesh Nanduru, Md Mofijul Islam, Peixuan Han, Hyeonjeong Ha, Aman Chadha, Yilun Du, Heng Ji, Jundong Li, Tariq Iqbal

Paper: https://arxiv.org/abs/2507.02092

Code: https://github.com/alexiglad/EBT

TL;DR

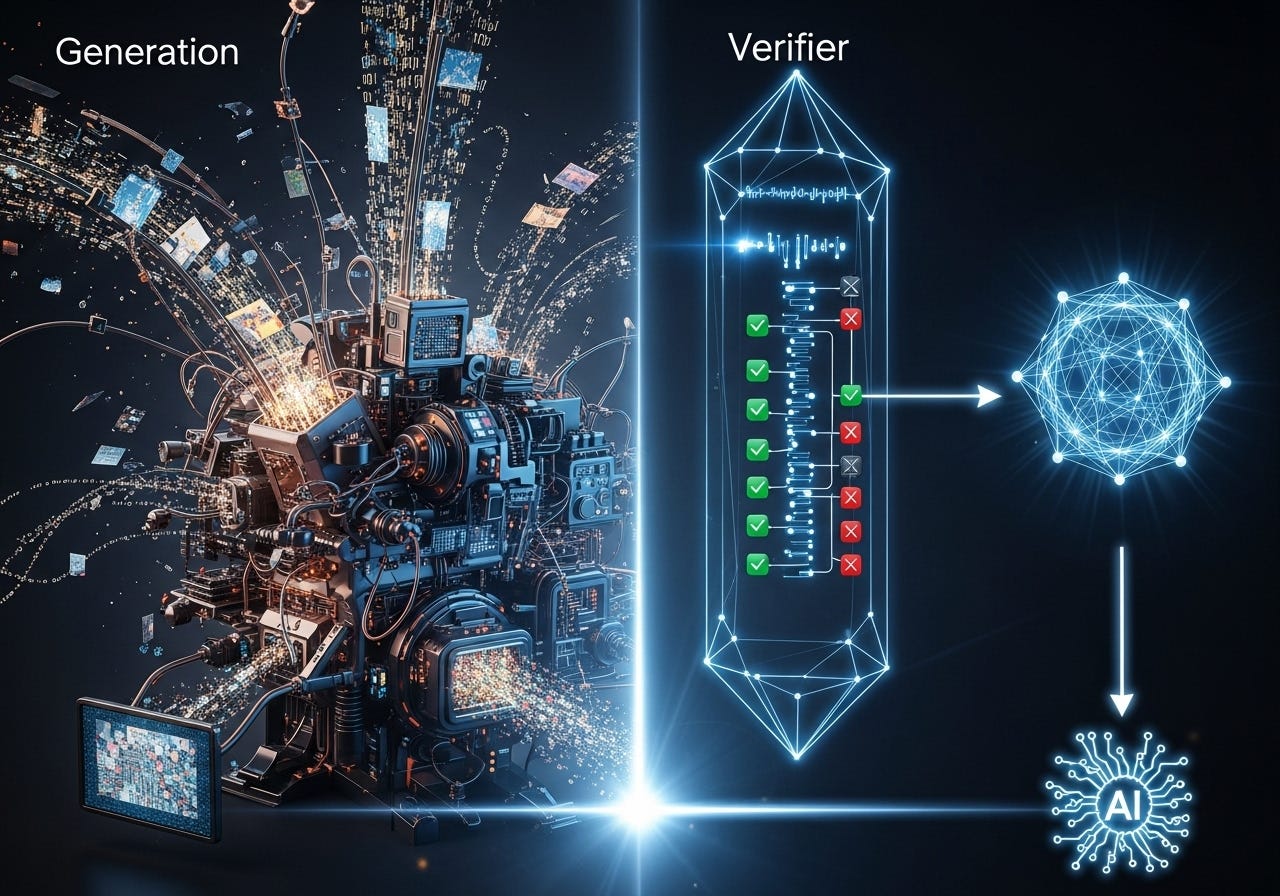

What was done? The paper introduces Energy-Based Transformers (EBTs), a new class of models that frame "thinking" as an optimization procedure. Instead of directly generating predictions, EBTs learn an energy function that acts as a verifier, assigning a compatibility score (unnormalized probability) to any input-prediction pair. Predictions are then made by starting with a random candidate and iteratively refining it through gradient descent to find the lowest energy (most compatible) state. This process allows three key facets of "System 2 thinking" to emerge entirely from unsupervised learning: dynamic computation allocation, inherent uncertainty modeling, and explicit prediction verification.

Why it matters? EBTs challenge the dominant paradigms of autoregressive models and Diffusion Transformers (DiTs) by leveraging a more fundamental principle: it is often computationally easier to verify a solution than to generate it. This leads to a more efficient and robust path to scalable AI. The most significant findings are that EBTs exhibit a learning scalability rate up to 35% higher than traditional Transformer++ models during pretraining. They outperform DiTs in image denoising with 99% fewer forward passes and show stronger generalization on out-of-distribution (OOD) data. This suggests that learning to verify is a more scalable and generalizable principle than learning to generate directly, offering a promising new direction for building more capable and reliable foundation models.

Details

A New Paradigm for AI Reasoning

Recent progress in AI has been marked by a push to imbue models with "System 2 thinking"—the slow, deliberate, and analytical reasoning characteristic of human cognition. However, most existing approaches are limited, and often expose "The Generative AI Paradox": the fact that models can generate complex data but fail at the simpler task of assessing the plausibility of their own outputs. This paper asks a foundational question: can we develop models that learn to "think" solely from unsupervised learning, in a way that is generalizable across problems and data types?

The authors' answer is a resounding "yes." Their Energy-Based Transformers (EBTs) move beyond the standard generative playbook and reframe thinking not as a single forward pass, but as an iterative optimization process over a learned energy landscape.

Keep reading with a 7-day free trial

Subscribe to ArXivIQ to keep reading this post and get 7 days of free access to the full post archives.