Exponential Dynamic Energy Network for High Capacity Sequence Memory

Authors: Arjun Karuvally, Pichsinee Lertsaroj, Terrence J. Sejnowski, Hava T. Siegelmann

Paper: https://arxiv.org/abs/2510.24965

Code: https://github.com/arjunkaruvally/EDEN_torch

TL;DR

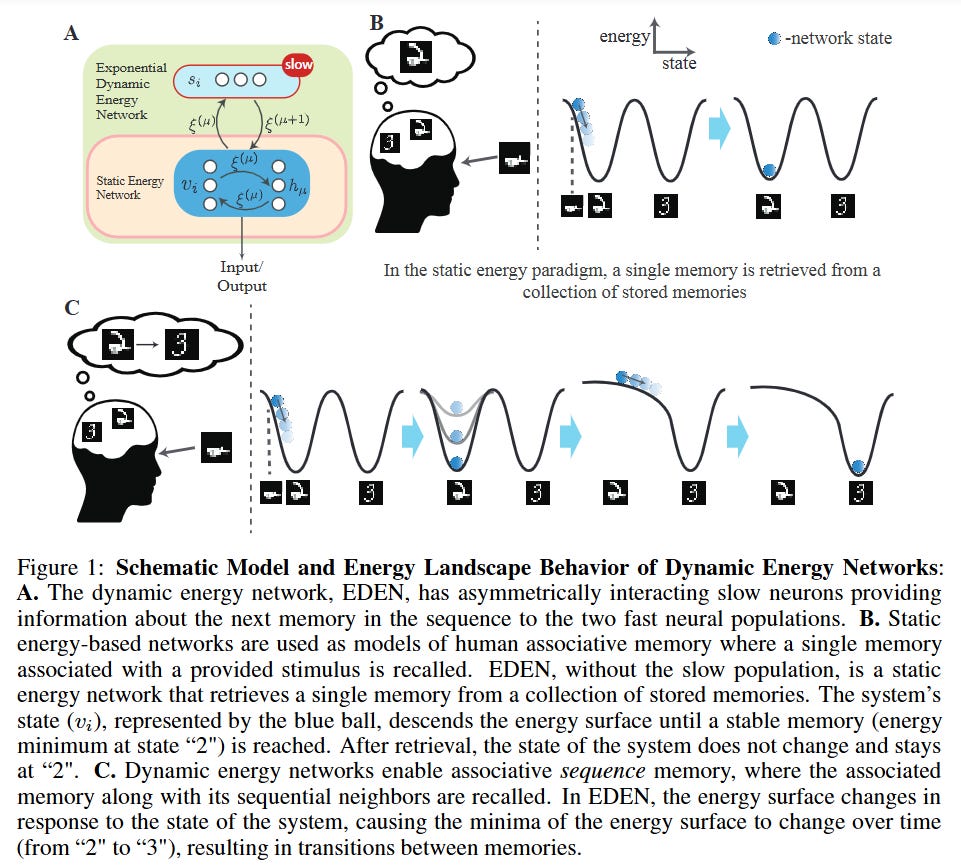

WHAT was done? The paper introduces the Exponential Dynamic Energy Network (EDEN), a novel neural architecture that extends the classical energy paradigm of Hopfield networks to model sequential memory. EDEN achieves this by combining a fast, high-capacity static energy network (responsible for storing individual memories) with a slow, asymmetrically interacting modulatory population. This slow population dynamically evolves the network’s energy function over time, causing the energy minima to shift in a controlled manner, thus enabling robust transitions between sequential memory states.

WHY it matters? This work presents several major breakthroughs. First, it solves a long-standing challenge by demonstrating exponential sequence memory capacity (O(γN)), a dramatic improvement over the linear capacity (O(N)) of conventional sequence models. This makes it a viable blueprint for scalable and reliable external memory in AI systems. Second, EDEN establishes a new Dynamic Energy Paradigm, providing a rigorous and interpretable theoretical framework for temporal memory. Crucially, the paper highlights that the high-capacity memory mechanism used is functionally equivalent to the self-attention mechanism in Transformers, forging an exciting link between classic theories of memory and cutting-edge AI. Finally, the model offers strong biological plausibility, as its fast and slow neural populations exhibit dynamics analogous to the “time cells” and “ramping cells” observed in the human brain during episodic memory tasks.

Details

The Limitation of a Static Worldview

The energy paradigm, introduced by Hopfield and Amari four decades ago, provided a powerful and principled framework for understanding memory in neural networks. The concept was elegant: memory retrieval is a process of descending an energy landscape until the network state settles into a stable minimum, or attractor, which represents a stored memory. While this approach offered theoretical guarantees of stability and noise robustness, its core principle—convergence on a fixed energy surface—made it fundamentally unsuitable for modeling sequential memory, which requires controlled, directional transitions between stable states (Figure 1).

This paper introduces the Exponential Dynamic Energy Network (EDEN), a novel architecture that revitalizes the energy paradigm by proposing that the energy landscape is not static but dynamic. EDEN reconciles the need for local stability with the necessity of global transitions, providing a unified framework for both static and sequential memory.

Keep reading with a 7-day free trial

Subscribe to ArXivIQ to keep reading this post and get 7 days of free access to the full post archives.