Group Representational Position Encoding

Unifying RoPE, ALiBi and FoX.

Authors: Yifan Zhang, Zixiang Chen, Yifeng Liu, Zhen Qin, Huizhuo Yuan, Kangping Xu, Yang Yuan, Quanquan Gu, Andrew Chi-Chih Yao

Paper: https://arxiv.org/abs/2512.07805

Code: https://github.com/model-architectures/GRAPE

TL;DR

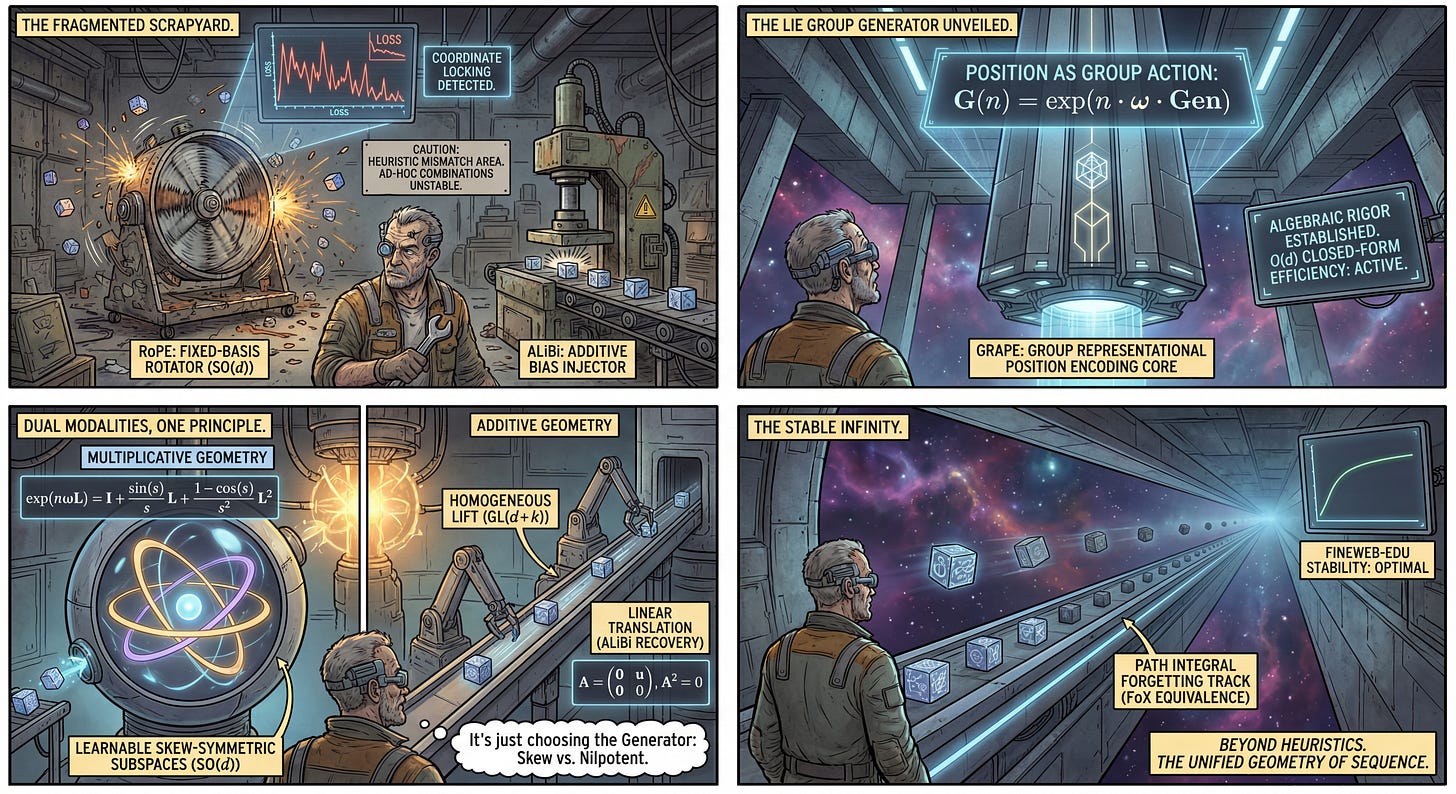

WHAT was done? The authors introduce GRAPE (Group Representational Position Encoding), a unified framework that derives positional encodings from group actions. By formalizing positions as elements of a Lie group acting on the token representation space, GRAPE unifies two distinct families: multiplicative rotations (recovering RoPE via SO(d)) and additive biases (recovering ALiBi and Forgetting Transformer via unipotent actions in GL(d+k)).

WHY it matters? This work moves positional encoding from heuristic design to rigorous algebraic structure. It demonstrates that widely used methods like RoPE and ALiBi are merely special cases of a broader generator formulation. Crucially, it introduces efficient closed-form matrix exponentials for learnable subspaces (allowing for non-commuting rotations) and proves that “forgetting” mechanisms in long-context modeling are mathematically equivalent to additive group actions, offering a principled path for designing next-generation context-aware architectures.

Details

The Geometry of Sequence Modeling

In the current landscape of Large Language Models, positional encoding (PE) suffers from a dichotomy of design. On one side, multiplicative mechanisms like RoPE treat position as a rotation in the complex plane, preserving norm and offering stability. On the other side, additive mechanisms like ALiBi inject linear biases directly into attention logits to improve length extrapolation. Recently, dynamic “forgetting” mechanisms like FoX have emerged to handle infinite contexts. The bottleneck is that these methods are often treated as distinct engineering heuristics rather than derivations of a single underlying principle. This fragmentation makes it difficult to design architectures that possess the benefits of both—specifically, the orthogonality of RoPE and the decay properties of additive biases—without relying on ad-hoc combinations.