Hypernetworks That Evolve Themselves

Authors: Joachim Winther Pedersen, Erwan Plantec, Eleni Nisioti, Marcello Barylli, Milton Montero, Kathrin Korte, Sebastian Risi

Paper: https://arxiv.org/abs/2512.16406

Code: https://github.com/Joachm/self-referential_GHNs (404 for now)

TL;DR

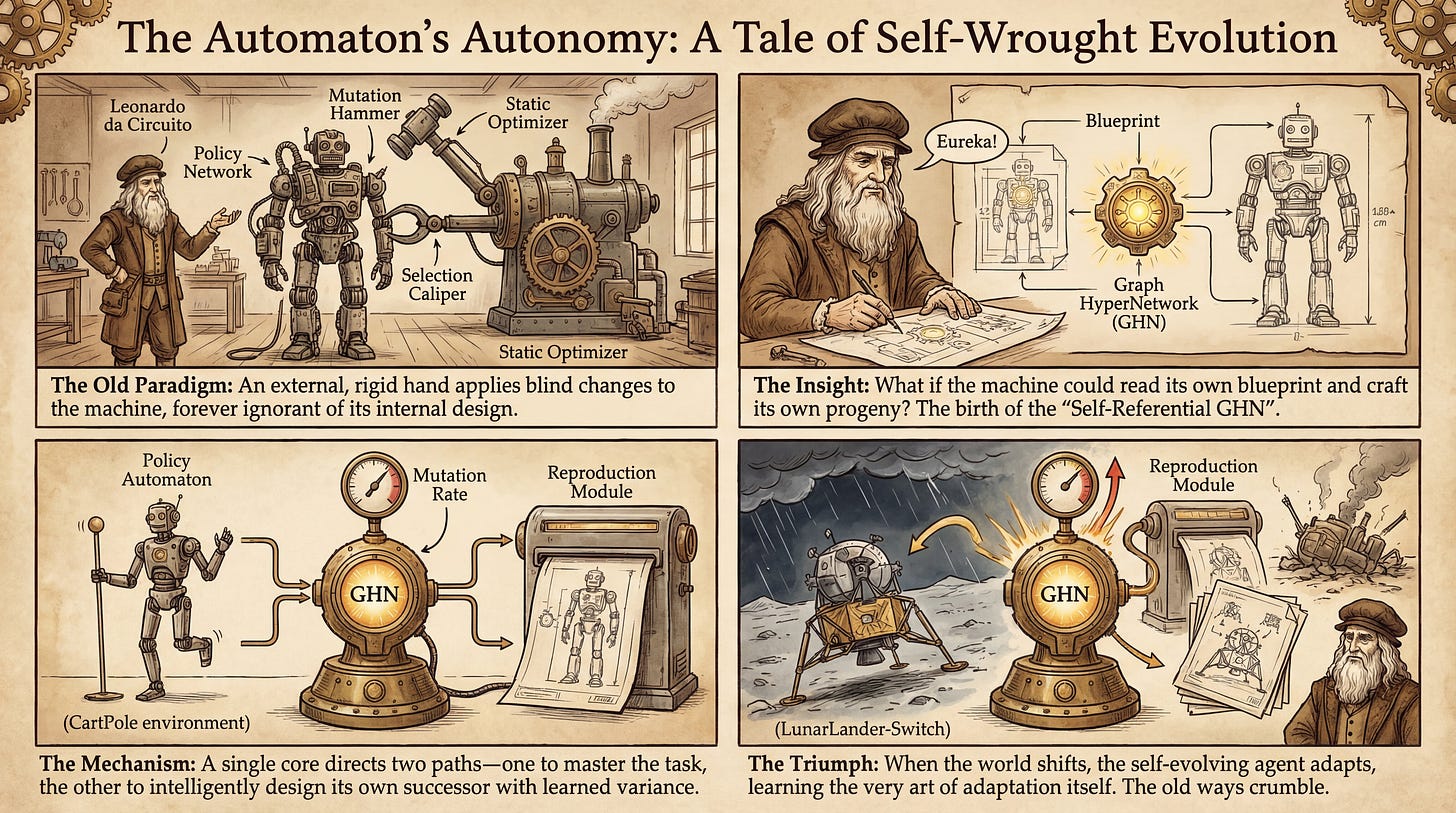

WHAT was done? The authors propose Self-Referential Graph HyperNetworks (GHNs), a class of neural networks capable of generating parameters not only for a policy network but also for their own offspring. By embedding a stochastic variation mechanism within the network architecture itself, the system internalizes the evolutionary operators (mutation and inheritance) that are typically handled by external algorithms.

WHY it matters? This represents a structural shift from “optimizing a fixed model” to creating “models that optimize themselves.” The approach demonstrates superior adaptation in non-stationary environments (where rules change abruptly) compared to traditional evolutionary strategies like CMA-ES or OpenES. It suggests that “evolvability”—the ability to effectively explore the search space—can be learned as a context-dependent trait rather than applied as a fixed heuristic.

Details

The Static Operator Bottleneck

In the standard paradigm of Neuroevolution, there is a strict separation between the optimizer (the algorithm, e.g., a Genetic Algorithm or Evolution Strategy) and the optimizee (the neural network). The optimizer is usually a static, hard-coded procedure that applies Gaussian noise blindly to the weights of the optimizee. While effective for static tasks, this external loop lacks context; it does not “know” the structure of the network it is mutating, nor does it inherently understand when to explore aggressively or exploit conservatively. This limitation becomes glaring in open-ended or non-stationary environments where the fitness landscape shifts. If the environment changes, a standard optimizer requires a lag time to adjust its covariance matrix or step size, often leading to performance collapse.

The authors of this paper argue that to achieve true open-ended learning, we must collapse the boundary between the machine and the machinery that builds it. By moving the variation-producing mechanism inside the neural substrate, the network gains the ability to modulate its own evolutionary trajectory. This concept builds upon previous work on Neural Quines and Self-Referential Weight Matrices, but leverages the architectural flexibility of Graph HyperNetworks (GHNs) to solve the scalability and fertility issues that plagued earlier self-replicating attempts.