Learning Latent Action World Models In The Wild

Authors: Quentin Garrido, Tushar Nagarajan, Basile Terver, Nicolas Ballas, Yann LeCun, Michael Rabbat

Paper: https://arxiv.org/abs/2601.05230

TL;DR

WHAT was done? The authors successfully trained Latent Action Models (LAMs) on uncurated, in-the-wild video data (YouTube-Temporal-1B) without action labels. Crucially, they demonstrate that continuous latent spaces (regularized via sparsity or noise) significantly outperform the standard Vector Quantization (VQ) approaches used in prior work like Genie when dealing with complex, real-world scene dynamics.

WHY it matters? This work removes the reliance on massive action-labeled datasets or narrow simulation environments for training World Models. By training a lightweight “controller” to map real robot actions to these learned latent actions, the authors show that a model learned purely from YouTube videos can achieve planning performance on robotics tasks (DROID, RECON) comparable to models trained with ground-truth actions, effectively unlocking the internet as a training source for robotic control.

Details

The In-the-Wild Bottleneck

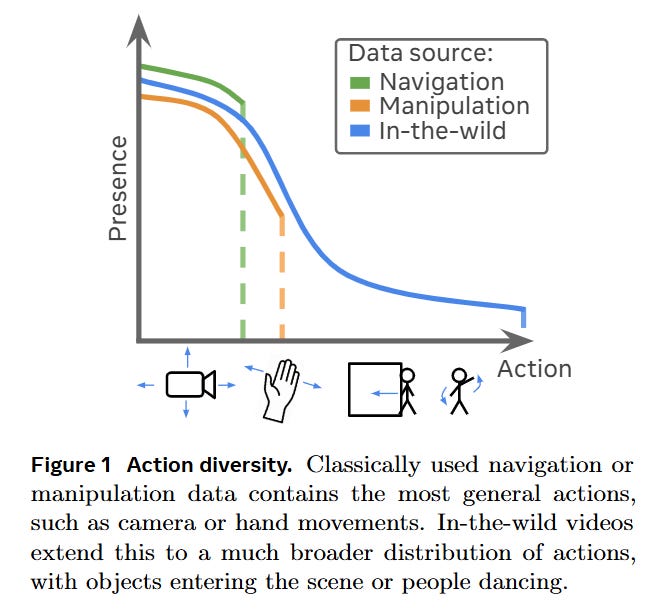

The current trajectory of World Model research faces a significant data bottleneck: while we have abundant video data, we lack corresponding action labels. Previous attempts to learn “latent actions” (inferring the action that caused a transition between frames) have largely been restricted to low-entropy environments like video games or fixed-arm robotics. When applied to “in-the-wild” video, the definition of an action becomes ambiguous. Is the “action” the camera moving, a person entering the frame, or leaves rustling in the wind?

The authors propose that the standard architectural solution to this ambiguity—discretizing the action space via Vector Quantization (VQ)—is insufficient for natural video. VQ bottlenecks tend to collapse detailed motion into generic “blobs” or fail to capture subtle dynamics. This paper challenges that orthodoxy, providing a rigorous empirical study showing that continuous latent actions, when properly constrained, provide the necessary expressivity to model the messy reality of open-world video while remaining controllable.