Mathematical Foundations of Geometric Deep Learning

Authors: Haitz Sáez de Ocáriz Borde and Michael Bronstein

Paper: https://arxiv.org/abs/2508.02723

TL;DR

WHAT was done? This paper provides a comprehensive and pedagogical review of the core mathematical concepts that form the bedrock of Geometric Deep Learning (GDL). It systematically navigates through algebraic structures (sets, groups), geometric and analytical tools (norms, metrics, inner products), vector calculus, topology, differential geometry (manifolds), functional analysis, spectral theory, and graph theory. Rather than introducing a new algorithm, the authors meticulously build the mathematical scaffolding required to understand how neural networks can be designed for non-Euclidean data, such as graphs and manifolds, as comprehensively outlined in their proto-book on the topic (https://arxiv.org/abs/2104.13478).

WHY it matters? The work bridges a crucial knowledge gap often present in standard computer science curricula. It presents GDL not as a collection of disparate architectures, but as a unified field grounded in the principles of symmetry, invariance, and equivariance. For researchers and practitioners, this foundational understanding is critical for moving AI beyond simple grid-like data (images, text) to tackle complex, structured problems in science, engineering, and biology. By formalizing the "geometric priors" that make models like GNNs and Transformers effective, this work provides the language to create more robust, data-efficient, and generalizable models that respect the intrinsic structure of the data they process.

Details

Introduction: From a "Zoo" of Architectures to a Unified Framework

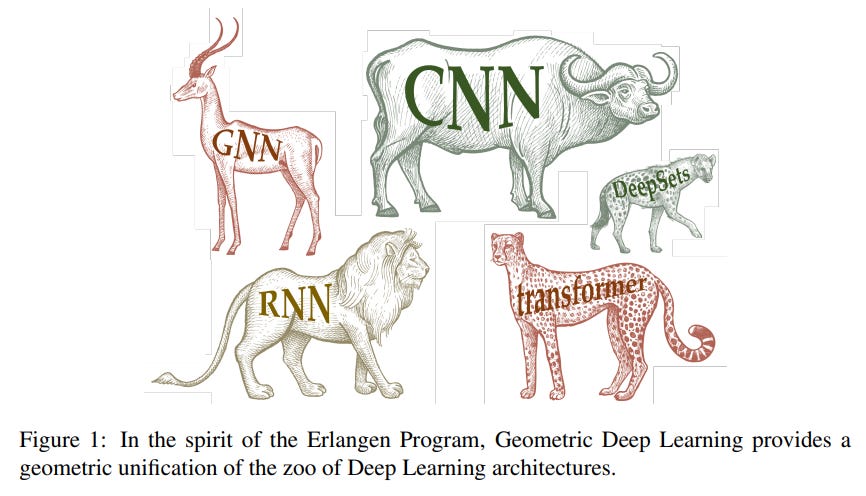

Over 150 years ago, mathematician Felix Klein proposed a revolutionary idea: geometry isn't just about points and lines, but the study of symmetries. The paper "Mathematical Foundations of Geometric Deep Learning" masterfully revives this Erlangen Program for the modern era of AI, recasting the "zoo" of neural network architectures as a unified field governed by the universal language of symmetry. While modern AI has achieved remarkable success on grid-like data like images and text, a vast amount of real-world data—from social networks and molecular structures to 3D meshes—does not conform to this rigid structure. This has led to the rise of Geometric Deep Learning (GDL), a field dedicated to creating neural networks for these complex, non-Euclidean domains.

This paper serves as a masterclass in the principles underlying this paradigm shift. It is not a typical research paper presenting a novel algorithm, but rather a meticulously crafted educational resource. The authors argue that many successful deep learning architectures can be seen as specific instances of a more general, geometric framework (Figure 1), and by understanding the underlying mathematical language, we can move from empirical design to a more principled, unified approach.

The Core Philosophy: Symmetry as an Inductive Bias

At the heart of GDL lies the idea that knowledge of data symmetries should be embedded directly into the model architecture. This is achieved through the concepts of invariance and equivariance.

An invariant function's output is unaffected by transformations of its input. For example, a classifier should identify an object regardless of its position (

f(g·x) = f(x)).An equivariant function's output transforms in a predictable way as the input is transformed. For instance, in image segmentation, rotating the input image should result in a correspondingly rotated segmentation map (

f(g·x) = g·f(x)).

By designing layers to respect these symmetries, we impose strong inductive biases. This restricts the model's hypothesis space, leading to more efficient learning, especially in data-scarce regimes, and enhancing generalization and robustness.

A Tour of the Mathematical Landscape

The paper takes the reader on a systematic journey, building the necessary mathematical toolkit piece by piece.

1. Algebraic Structures: The Language of Symmetry

The journey begins with fundamental algebraic structures. While concepts like sets and vector spaces are foundational, the crucial element for GDL is group theory.

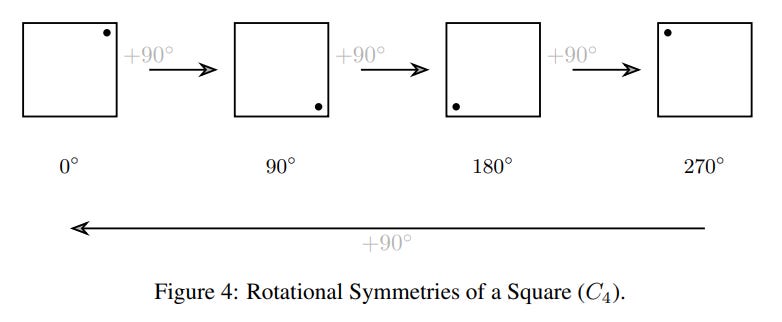

A group formalizes the notion of transformations (like rotations, as shown in Figure 4 and Figure 5) and provides the mathematical language to define symmetries.

2. Geometric and Analytical Structures: Introducing Measurement

To move from abstract transformations to tangible geometry, we need tools for measurement. The authors introduce:

Norms: Functions

||·||that measure the "size" of a vector, fundamental to regularization techniques like weight decay.Metrics: Functions

d(u, v)that measure the "distance" between two elements, such as the shortest path distance on a graph.Inner Products: Operations

⟨u, v⟩that generalize the dot product, enabling the measurement of "angles" and orthogonality. This concept is crucial for cosine similarity, which underpins the attention mechanisms in the groundbreaking Attention Is All You Need paper that introduced the Transformer architecture.

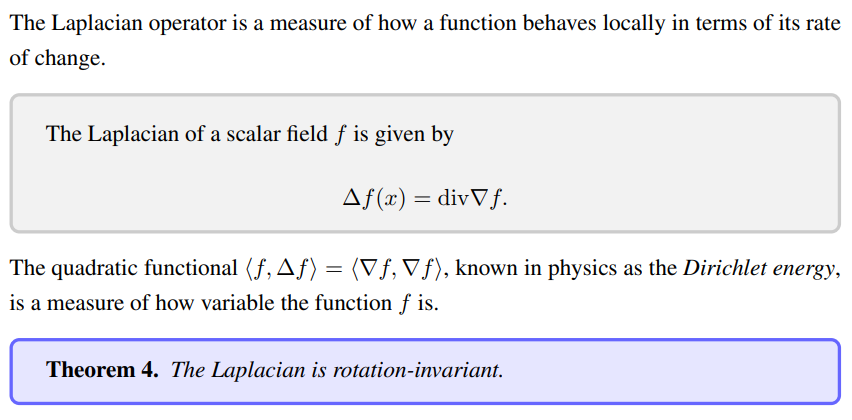

3. Vector Calculus: Understanding Change and Optimization

Vector calculus provides the tools to analyze how functions change locally. This is fundamental to deep learning's optimization engine, gradient descent, which uses the gradient ∇L(w) of a loss function to iteratively update model parameters w_t+1 = w_t - η∇L(w_t). The paper also introduces key differential operators like the Laplacian (Δf = div∇f), which is shown to be a rotation-invariant operator (Theorem 4), making it a natural choice for defining operations on geometric domains.

4. Topology and Differential Geometry: The World of Manifolds

This section is central to GDL's ability to handle curved spaces.

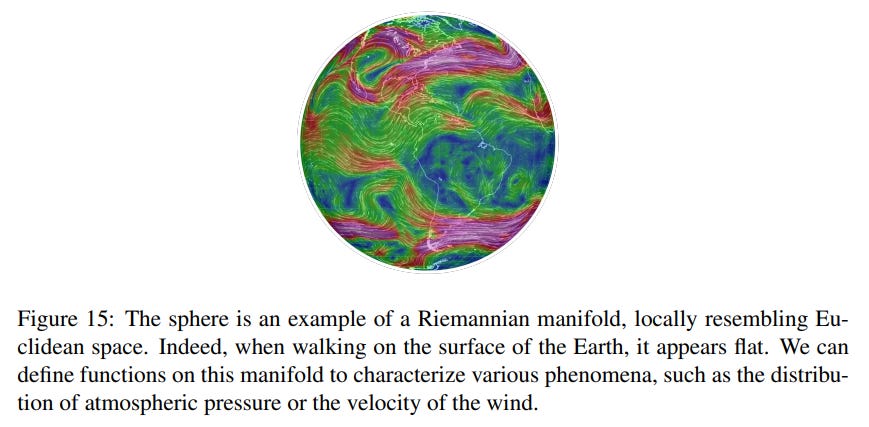

A manifold is a space that, while globally curved, locally resembles flat Euclidean space (Figure 13, 14, 15).

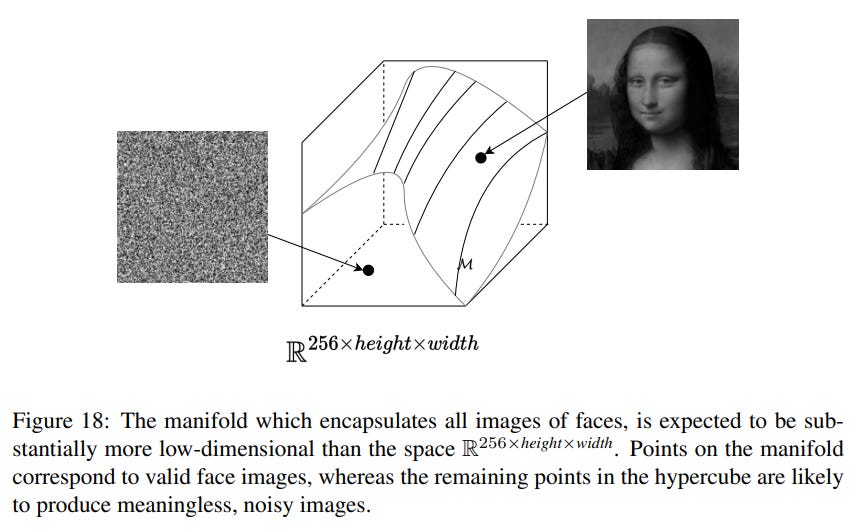

The Manifold Hypothesis is a key assumption in machine learning, suggesting that high-dimensional data often lies on an intrinsic low-dimensional manifold (Figure 18).

Traversing this manifold allows for meaningful interpolation, unlike linear interpolation in the ambient pixel space (Figure 19).

Tools from differential geometry, like geodesics (the shortest path on a manifold, Figure 12) and the exponential map (Figure 16), allow us to perform calculations in locally flat tangent spaces and project the results back onto the manifold.

5. Functional Analysis: The Infinite-Dimensional Playground

To analyze functions and signals on the continuous manifolds or large graphs described previously, we need to upgrade from standard linear algebra. Functional analysis extends the concepts of vector spaces to infinite-dimensional function spaces. The cornerstone here is the Hilbert space, an infinite-dimensional vector space that is "complete" (it has no "holes") and is equipped with an inner product. This provides a powerful framework where functions can be treated as vectors. This perspective allows us to generalize familiar geometric notions like distance, angle, and orthogonality to functions, providing the essential foundation for the spectral methods that follow.

6. Spectral Theory and Graph Theory: GDL in Practice

The final sections connect these abstract concepts to practical applications on graphs.

Spectral theory generalizes Fourier analysis to non-Euclidean domains. Think of the eigenvectors of the graph Laplacian matrix (

L = D - A) as the fundamental 'vibrational modes' of the graph. The eigenvectors with low eigenvalues (low frequencies) correspond to smooth signals that vary slowly across the graph, like the fundamental pitch of a guitar string. Those with high eigenvalues (high frequencies) represent noisy, oscillatory signals, akin to the string's higher-pitched overtones. This perspective transforms graph convolution from a black-box operation into a principled form of frequency-based filtering.The GNN 'Magic Recipe': The paper culminates by presenting the elegant and powerful message-passing framework, the engine behind most Graph Neural Networks (GNNs) first formalized in works like The Graph Neural Network Model. The core idea is simple: for each node, you 'gather' information from its neighbors and use it to update the node's own features. The general recipe for a GNN layer

lcan be written as:x_i⁽ˡ⁺¹⁾ = φ(x_i⁽ˡ⁾, ⊕_(j∈N(v_i)) ψ(x_i⁽ˡ⁾, x_j⁽ˡ⁾))

Broader Implications for AI

This foundational work has profound implications. By providing a rigorous mathematical framework, it empowers researchers to:

Design Models for Scientific Discovery: Imagine designing a novel protein in a computer that perfectly binds to a virus, or discovering new physical laws by letting a GNN analyze the geometric patterns in particle collision data. This is the future that a principled GDL framework unlocks.

Enhance Generative AI: Learning generative models directly on the data manifold can produce more realistic and structurally valid outputs, from 3D assets to protein structures.

Build More Trustworthy AI: By baking symmetries like invariance and equivariance into the architecture, GDL creates models that are provably robust to certain transformations, offering stronger guarantees than data augmentation alone.

Limitations and Final Assessment

The paper acknowledges that in practice, the assumptions of GDL are often idealizations. The "manifold hypothesis" is used loosely in ML, and real-world data may not conform to a perfectly smooth manifold. Similarly, many GNNs implicitly assume homophily (connected nodes are similar), which limits their effectiveness on certain graphs.

Despite this, the paper's contribution is invaluable. It is an exceptionally well-written, clear, and comprehensive guide that connects abstract mathematics to practical AI. While it doesn't introduce a new state-of-the-art model, it provides something arguably more important: a solid intellectual foundation for the next generation of AI research. This work is an essential read for any student or researcher looking to understand the principles driving the future of deep learning on structured data. It offers a valuable contribution to the field by making the sophisticated mathematics of Geometric Deep Learning accessible and demonstrating its power to unify and advance AI.