Memorization Dynamics in Knowledge Distillation for Language Models

Authors: Jaydeep Borkar, Karan Chadha, Niloofar Mireshghallah, Yuchen Zhang, Irina-Elena Veliche, Archi Mitra, David A. Smith, Zheng Xu, Diego Garcia-Olano

Affiliation: Meta Superintelligence Labs

Paper: https://arxiv.org/abs/2601.15394

TL;DR

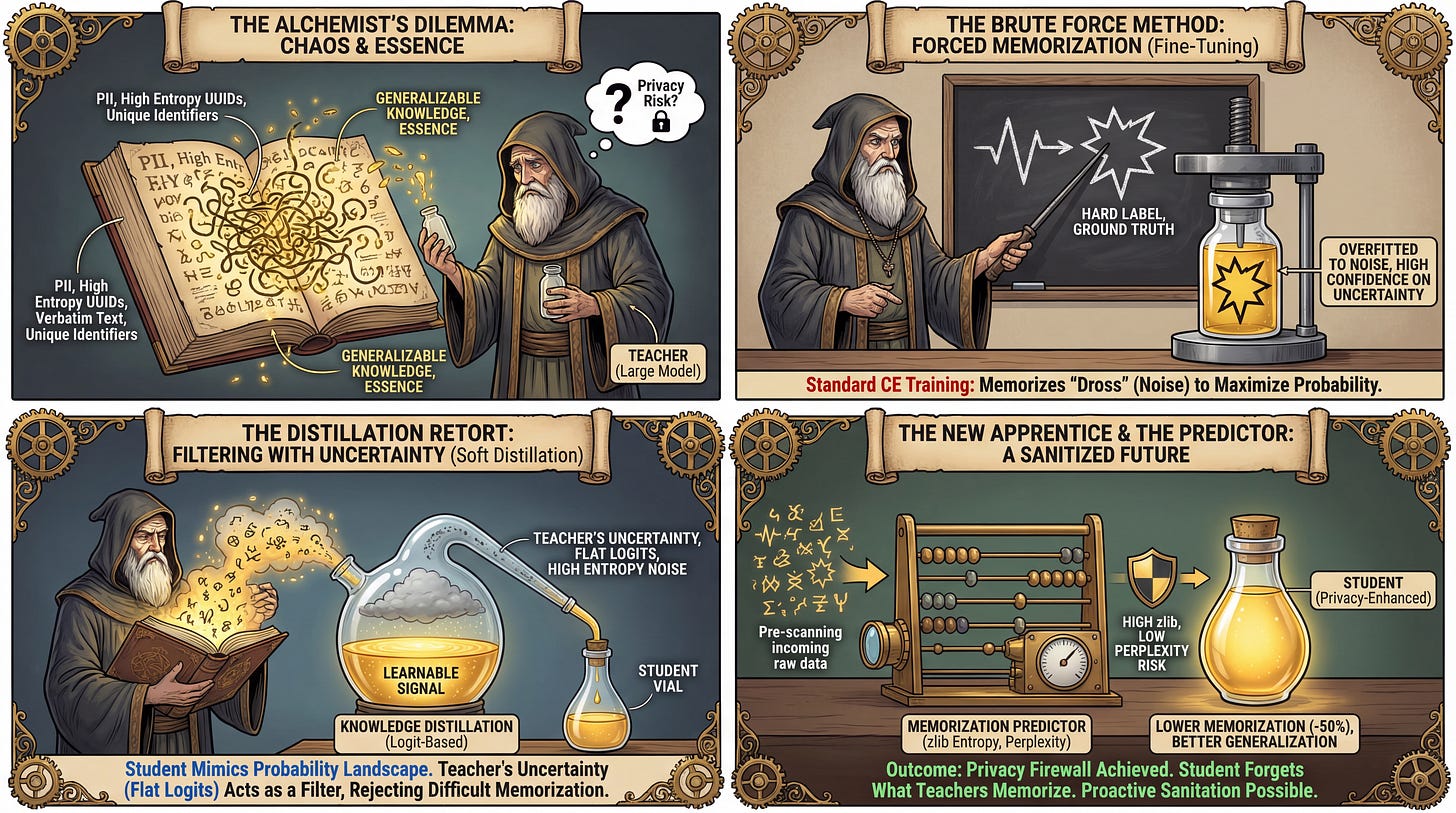

WHAT was done? The authors systematically investigated training data memorization in Large Language Models (LLMs) trained via Knowledge Distillation (KD). By comparing distilled “Student” models against independently fine-tuned “Baseline” models and their “Teachers” (using Pythia, OLMo-2, and Qwen-3 families), they discovered that distillation reduces memorization of training data by over 50%.

WHY it matters? This overturns the assumption that student models inevitably inherit the privacy vulnerabilities of their teachers. The research demonstrates that KD acts as a regularizer that selectively filters out high-entropy “noise” (difficult-to-learn examples) while retaining generalizable knowledge. Furthermore, the authors show that memorization is highly predictable using pre-training metrics like zlib entropy, allowing for proactive data sanitation.

Details

The Privacy Bottleneck in Model Compression

As we race to compress massive reasoning models into efficient edge-deployable versions, a critical question arises regarding data leakage. We know that large models—the “Teachers”—have high capacities to memorize unique identifiers, personally identifiable information (PII), and verbatim text from their training corpora. When we use these Teachers to train smaller “Students” via Knowledge Distillation (KD), the prevailing fear is that the Student will inherit these privacy violations. The core conflict addressed by this paper is whether KD amplifies privacy risks by forcing a small model to parrot a large one, or if the compression process itself alters the memorization dynamics. Previous works have established that standard fine-tuning leads to significant memorization; this paper isolates the “Delta” introduced specifically by the distillation objective compared to standard Cross-Entropy (CE) training.