Modeling Language as a Sequence of Thoughts

Authors: Nasim Borazjanizadeh, James L. McClelland

Paper: https://arxiv.org/abs/2512.25026

TL;DR

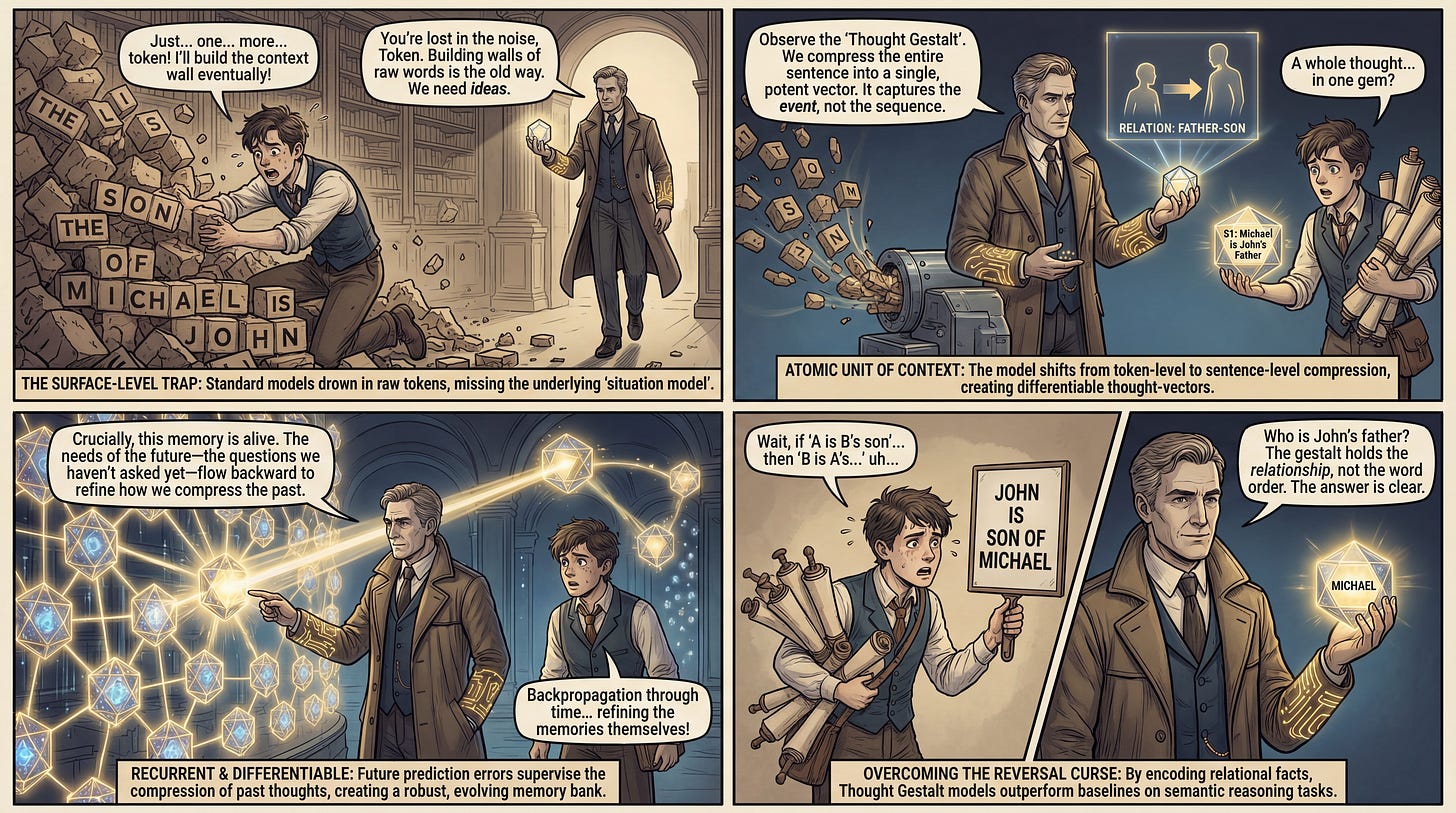

WHAT was done? The authors introduce the Thought Gestalt (TG) model, a recurrent Transformer architecture that processes text one sentence at a time. Instead of maintaining a full history of past tokens (KV-cache), TG compresses each processed sentence into a single vector representation—a “gestalt”—which is stored in a differentiable memory. Crucially, the model is trained end-to-end; gradients from future token predictions flow backward through the memory to optimize the parameters that generated earlier sentence representations.

WHY it matters? This approach challenges the dominance of the static context window by demonstrating that “event-level” recurrence can be more data-efficient than raw token attention. The authors show that TG outperforms GPT-2 baselines in data scaling laws (requiring ~5-8% less data for matched perplexity) and significantly mitigates the Reversal Curse (where models fail to infer B→A after learning A→B), suggesting that compressing context into latent “thoughts” creates more robust semantic representations than surface-level token statistics.

Details

The Surface-Level Statistical Trap

The prevailing paradigm in Large Language Models (LLMs) treats language comprehension as the modeling of a flat sequence of tokens, optimizing the conditional probability P(wt∣w<t). While effective, this approach suffers from significant theoretical and practical bottlenecks. Cognitive science suggests that humans do not store verbatim transcripts of past context; rather, we compress linguistic inputs into “situation models”—mental representations of events, entities, and relations. Standard Transformers, by contrast, rely on “contextualization” via attention over specific token positions. This reliance leads to phenomena like the Reversal Curse, where a model trained on “The son of Michael is John” fails to retrieve “John” when queried “The father of John is...”. The model learns the surface order, not the underlying relational fact. Furthermore, the inefficiency of learning world models solely through next-token prediction on raw text requires training on trillions of tokens, orders of magnitude beyond human learning curves.