Next-Embedding Prediction Makes Strong Vision Learners

Next-Embedding Predictive Autoregression (NEPA)

Authors: Sihan Xu, Ziqiao Ma, Wenhao Chai, Xuweiyi Chen, Weiyang Jin, Joyce Chai, Saining Xie, Stella X. Yu

Paper: https://arxiv.org/abs/2512.16922

Code: https://github.com/sihanxu/nepa

Model: https://huggingface.co/collections/SixAILab/nepa

Site: https://sihanxu.github.io/nepa

TL;DR

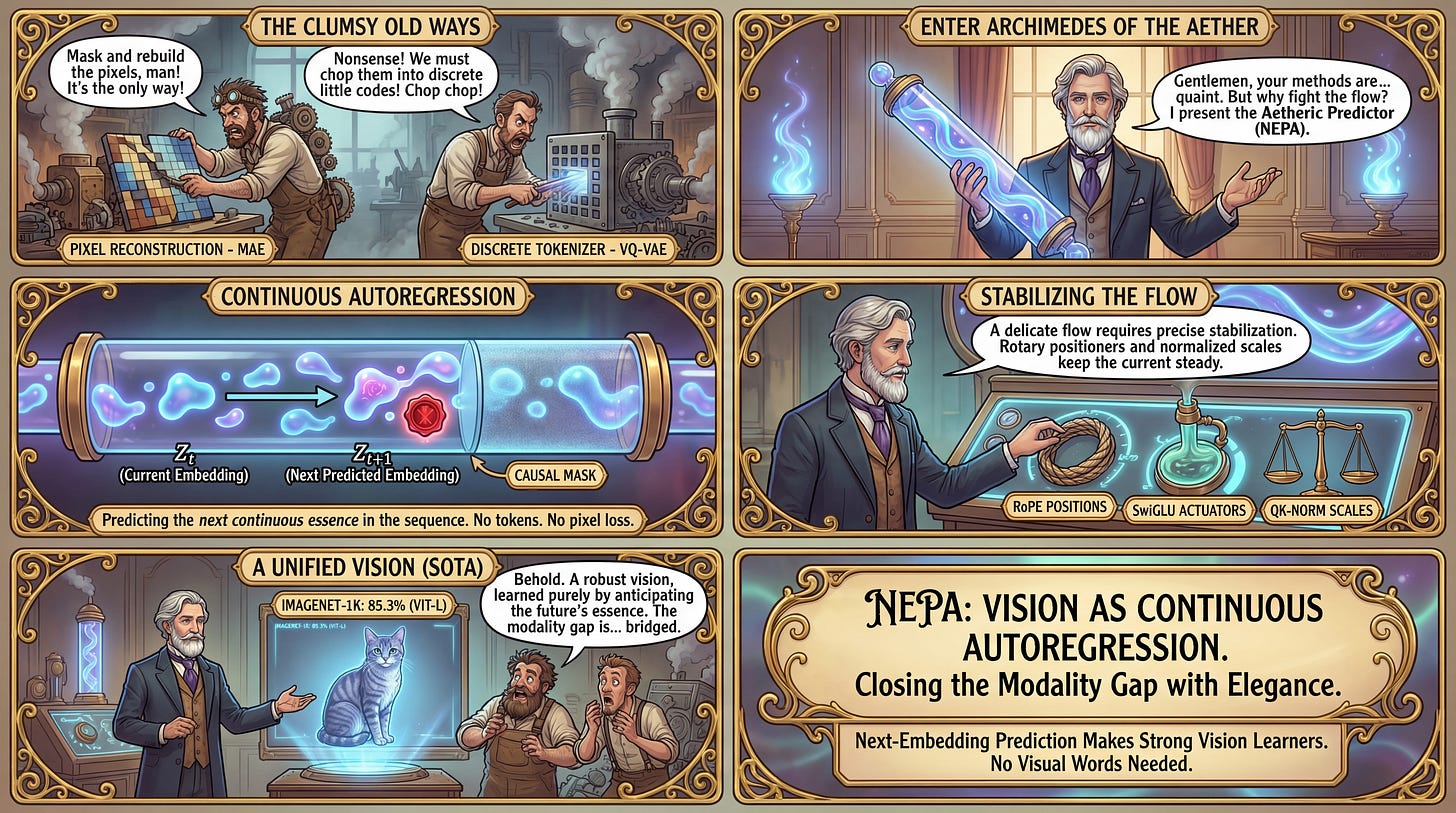

WHAT was done? The authors introduce NEPA (Next-Embedding Predictive Autoregression), a self-supervised learning framework that trains Vision Transformers (ViT) by predicting the embedding of the next image patch conditioned on previous patches. Unlike standard generative approaches, NEPA operates entirely in a continuous latent space without discrete tokenizers (like VQ-VAE) or pixel-level reconstruction (like MAE).

WHY it matters? This approach effectively unifies the training objective of vision and language models. By demonstrating that a pure “next-token prediction” objective works on continuous visual representations without auxiliary momentum encoders or contrastive negative pairs, NEPA offers a scalable, simplified paradigm. It achieves state-of-the-art results (85.3% Top-1 on ImageNet-1K with ViT-L), proving that causal modeling is sufficient for learning robust visual semantics.

Details

The Modality Gap in Pretraining

The current landscape of self-supervised vision is dominated by a dichotomy that separates it from the success of Large Language Models (LLMs). While LLMs thrive on a simple, unified objective—next-token prediction—vision models fracture into masked image modeling (MIM), which focuses on low-level pixel reconstruction like MAE, or joint-embedding architectures. Methods like JEPA predict latent targets from context but often require momentum encoders (however, LeJEPA got rid of all the additional heuristics), while SimSiam relies on Siamese networks and invariance constraints. Attempts to bridge this gap, such as BEiT or VAR, often rely on discrete visual tokenizers (VQ-VAEs) to force images into a “vocabulary,” introducing quantization artifacts and architectural overhead.

The authors of NEPA propose a method that adheres strictly to the autoregressive philosophy of GPT but bypasses the need for discrete tokens. The hypothesis is that the “next token” in vision does not need to be a word or a codebook index; it can be a continuous vector in the embedding space. By predicting the future in latent space, the model is forced to internalize semantic dependencies—understanding that a dog’s ear likely precedes a dog’s eye—rather than merely modeling high-frequency texture correlations required for pixel reconstruction.