On the Origin of Algorithmic Progress in AI

Authors: Hans Gundlach, Alex Fogelson, Jayson Lynch, Ana Trišović, Jonathan Rosenfeld, Anmol Sandhu, Neil Thompson

Paper: https://arxiv.org/abs/2511.21622

Code: https://github.com/hansgundlach/Experimental_Progress

TL;DR

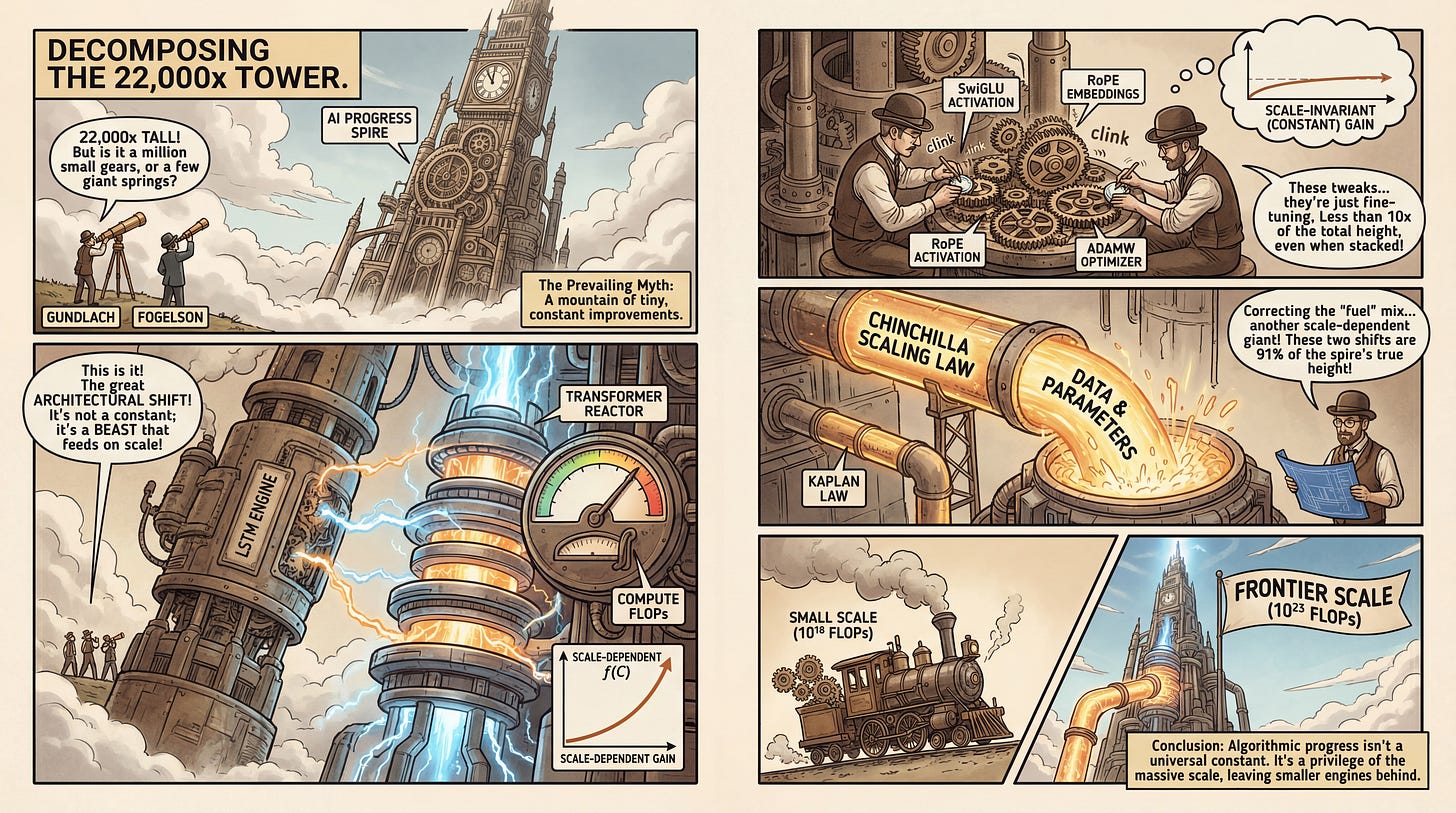

WHAT was done? The authors deconstruct the widely cited estimate that algorithmic efficiency increased by roughly 22,000× between 2012 and 2023. By conducting ablation studies on modern Transformers (reverting components like SwiGLU and Rotary Embeddings) and performing scaling experiments against LSTMs, they determine that the vast majority of “progress” is not the sum of many small improvements. Instead, 91% of extrapolated gains at the frontier (1023 FLOPs) stem from two specific, scale-dependent shifts: the architectural transition from LSTMs to Transformers and the optimization shift from Kaplan to Chinchilla scaling laws.

WHY it matters? This challenges the prevailing narrative that AI progress is driven by a steady exponential stream of algorithmic refinements independent of hardware. The findings suggest that many algorithmic innovations yield negligible benefits at small scales and only compound at massive compute budgets. Consequently, algorithmic progress is not a universal constant; it is a function of compute scale, implying that future efficiency gains may be inextricably linked to our ability to continue scaling hardware resources.