ORION: Teaching Language Models to Reason Efficiently in the Language of Thought

Authors: Kumar Tanmay, Kriti Aggarwal, Paul Pu Liang, Subhabrata Mukherjee

Paper: https://arxiv.org/abs/2511.22891

Code: https://github.com/Hippocratic-AI-Research/Orion

TL;DR

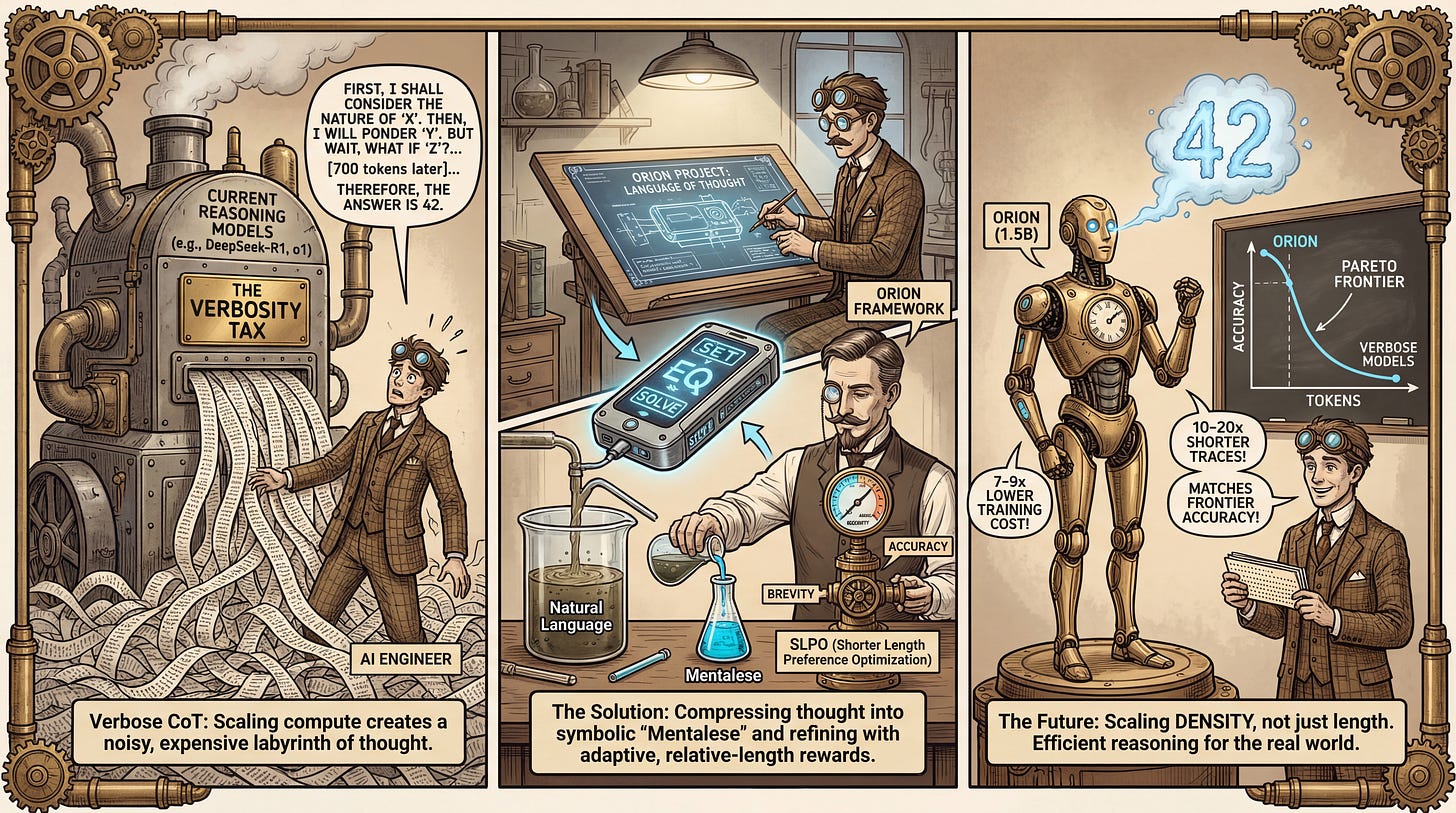

WHAT was done? The authors introduce ORION, a framework that compresses the reasoning traces of Large Reasoning Models (LRMs) by aligning them to a symbolic “Language of Thought” called Mentalese. They achieve this via a two-stage process: Supervised Fine-Tuning (SFT) on a new dataset of 40k compressed reasoning traces, followed by a novel reinforcement learning objective called Shorter Length Preference Optimization (SLPO), which dynamically rewards brevity without sacrificing accuracy.

WHY it matters? Current reasoning models (like DeepSeek-R1 or OpenAI o1) achieve performance by scaling test-time compute, often resulting in verbose, redundant, and expensive outputs. ORION demonstrates that a 1.5B parameter model can match or exceed the accuracy of much larger models (including GPT-4o and Claude 3.5 Sonnet on math benchmarks) while generating reasoning traces that are 10–20x shorter. This drastically reduces inference latency and training costs (by 7-9x), offering a viable path for deploying reasoning agents in resource-constrained or real-time environments.

Details

The Verbosity Tax in Reasoning Models

The current paradigm in Large Reasoning Models (LRMs) is dominated by the “scaling test-time compute” approach. Models like DeepSeek-R1 and OpenAI’s o1 series generate extensive Chain-of-Thought (CoT) traces to break down complex problems. While effective, this introduces a significant “verbosity tax.” As illustrated in Figure 2, a model might generate hundreds of tokens of redundant natural language just to solve a simple arithmetic problem, whereas a human cognitive process is likely far more compressed and symbolic.

This verbosity creates high inference latency and complicates the training pipeline, as generating long rollouts during Reinforcement Learning (RL) leaves GPUs idle for extended periods. The authors argue that high performance does not inherently require high verbosity; rather, models need to be taught to reason in a structured, compressed format closer to the “Language of Thought” hypothesis (LOTH).