Recursive Language Models

Authors: Alex L. Zhang, Tim Kraska, Omar Khattab

Paper: https://arxiv.org/abs/2512.24601

TL;DR

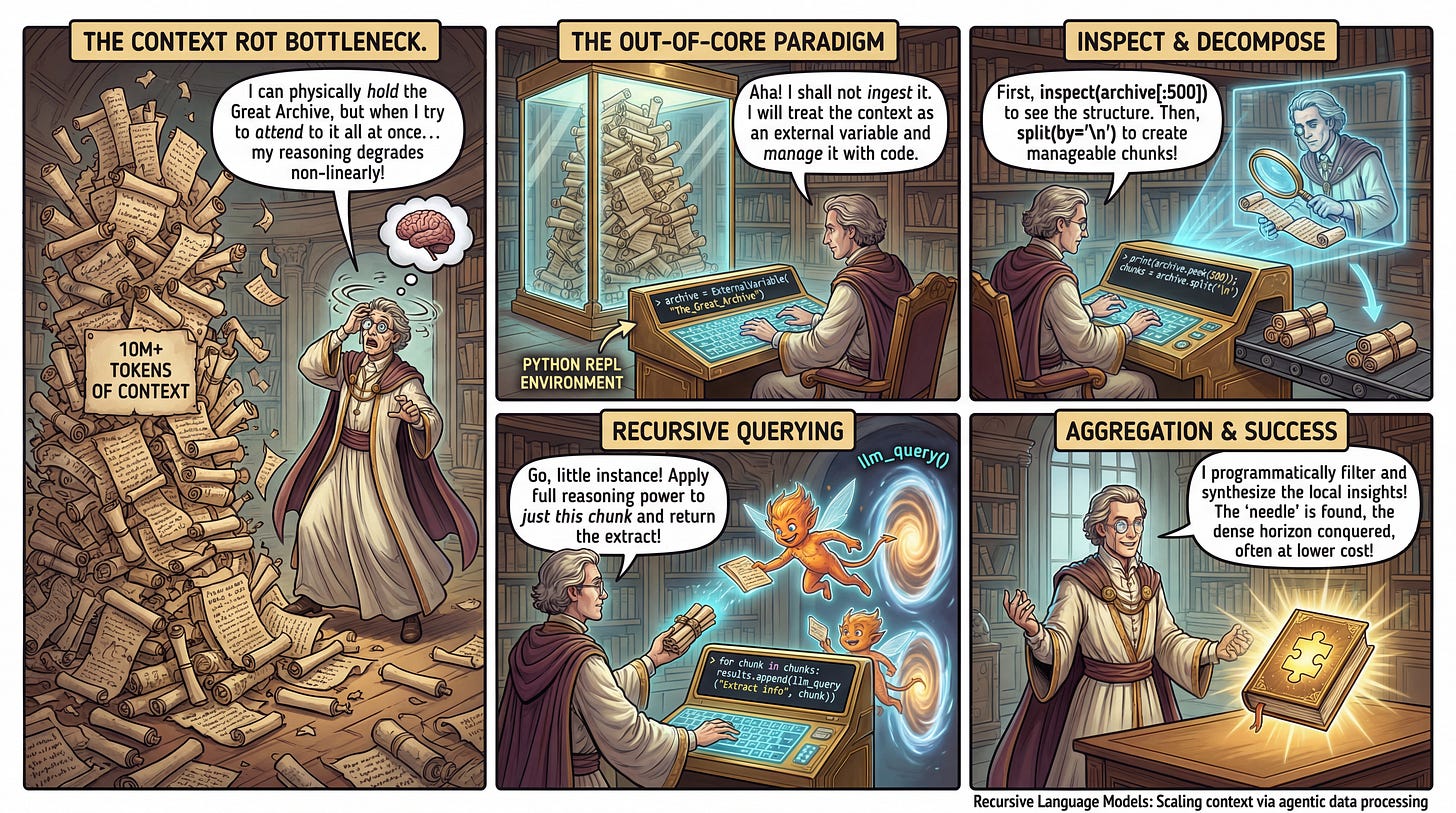

WHAT was done? The MIT authors propose Recursive Language Models (RLMs), an inference-time paradigm that decouples input length from the model’s physical context window. Instead of tokenizing massive prompts directly, RLMs treat the input as an external variable within a Python REPL environment. The model writes code to programmatically inspect, chunk, and recursively query sub-instances of itself over specific segments of the data.

WHY it matters? This approach effectively solves “context rot”—the performance degradation observed in long-context models (even frontier ones like GPT-5) when processing dense information. By leveraging code for data management and recursion for local reasoning, RLMs achieve state-of-the-art performance on inputs exceeding 10 million tokens (two orders of magnitude beyond current limits) while often reducing inference costs compared to full-context ingestion.

Details

The Context Rot Bottleneck

Despite the architectural triumph of expanding context windows to millions of tokens, a fundamental bottleneck remains: the effective utilization of that context. Recent evaluations, such as RULER and OOLONG, demonstrate that while models can physically ingest massive inputs, their reasoning capabilities degrade non-linearly as information density increases. This phenomenon, often termed “context rot,” implies that simply scaling the attention mechanism is insufficient for tasks requiring dense, global reasoning over long horizons.

Current mitigation strategies typically fall into two buckets: architectural optimizations (e.g., sparse attention) or compression heuristics (e.g., RAG, summarization loops). However, these methods often fail on “needle-in-a-haystack” retrieval or complex aggregation tasks like BrowseComp-Plus because they lossily compress the input before the model can reason about what is relevant. The authors of this paper operate on a different intuition: inspired by out-of-core algorithms in database systems—where datasets larger than RAM are processed by paging data in and out—they propose moving the context out of the neural network’s immediate memory and into an interactive, symbolic environment.