Scaling Latent Reasoning via Looped Language Models

Authors: Rui-Jie Zhu, Zixuan Wang, Kai Hua, Tianyu Zhang, Ziniu Li, Haoran Que, Boyi Wei, Zixin Wen, Fan Yin, He Xing, Lu Li, Jiajun Shi, Kaijing Ma, Shanda Li, Taylor Kergan, Andrew Smith, Xingwei Qu, Mude Hui, Bohong Wu, Qiyang Min, Hongzhi Huang, Xun Zhou, Wei Ye, Jiaheng Liu, Jian Yang, Yunfeng Shi, Chenghua Lin, Enduo Zhao, Tianle Cai, Ge Zhang, Wenhao Huang, Yoshua Bengio, Jason Eshraghian

Paper: https://arxiv.org/abs/2510.25741

Project Page: http://ouro-llm.github.io

TL;DR

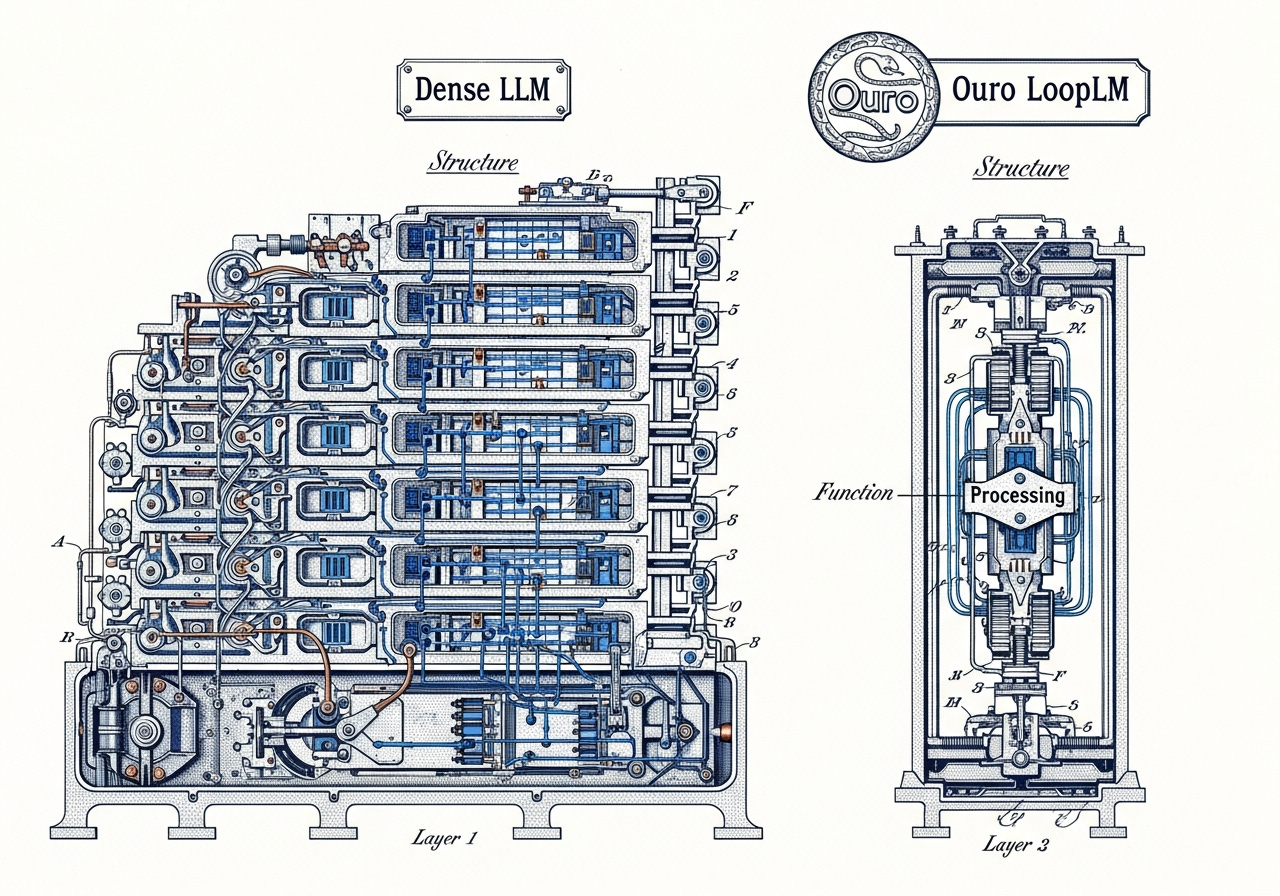

WHAT was done? The paper introduces Ouro, a family of Looped Language Models (LoopLMs) trained on 7.7T tokens. Instead of scaling parameter counts, Ouro uses a recurrent architecture where a shared block of layers is applied iteratively within the latent space. This approach integrates reasoning directly into the pre-training phase, governed by an adaptive early-exit mechanism. This mechanism is trained via a two-stage process: an initial entropy-regularized objective to ensure broad exploration of computational depths, followed by a focused training stage that tunes the exit gate based on realized performance gains.

WHY it matters? This work establishes iterative latent computation as a critical third scaling axis beyond just parameters and data. It demonstrates exceptional 2-3x parameter efficiency, with 1.4B and 2.6B Ouro models matching or outperforming standard 4B to 8B transformers on complex reasoning tasks. Crucially, through controlled experiments, the authors show this advantage stems not from increased knowledge storage capacity (which remains constant) but from a fundamentally superior capability for knowledge manipulation and composition. The resulting latent reasoning traces are also more causally faithful than traditional Chain-of-Thought, and model safety uniquely improves with deeper computation, offering a path to more efficient, trustworthy, and powerful LLMs.

Details

Introduction: The Third Scaling Axis

The prevailing wisdom in large language model (LLM) development has centered on two primary scaling axes: increasing model parameters and expanding the training data corpus. While effective, this paradigm faces diminishing returns and mounting resource constraints. Inference-time strategies like Chain-of-Thought (CoT) (https://arxiv.org/abs/2201.11903) have offered a way to dedicate more compute to complex problems, but they defer reasoning to post-training and can suffer from a lack of causal faithfulness.

This paper presents a compelling alternative, arguing for a third scaling axis: iterative latent computation. The authors introduce Ouro, a family of Looped Language Models (LoopLMs) that build reasoning directly into the pre-training phase. By recursively applying a set of shared parameters, Ouro decouples computational depth from parameter count, enabling a new paradigm of “scaling to substance.”

Keep reading with a 7-day free trial

Subscribe to ArXivIQ to keep reading this post and get 7 days of free access to the full post archives.