Solving the compute crisis with physics-based ASICs

Authors: Maxwell Aifer, Zach Belateche, Suraj Bramhavar, Kerem Y. Camsari, Patrick J. Coles, Gavin Crooks, Douglas J. Durian, Andrea J. Liu, Anastasia Marchenkova, Antonio J. Martinez, Peter L. McMahon, Faris Sbahi, Benjamin Weiner, and Logan G. Wright

Paper: https://arxiv.org/abs/2507.10463

Code:

- https://github.com/zachbe/digial-ising

- https://github.com/zachbe/simulated-bifurcation-ising/

- https://github.com/dwavesystems/dwave-ocean-sdk/

TL;DR

WHAT was done? The authors propose a new computing paradigm called Physics-based Application-Specific Integrated Circuits (ASICs). Instead of expending massive amounts of energy to enforce idealized digital abstractions (like statelessness, perfect determinism, and synchronized operations), this approach directly harnesses the intrinsic, and often "messy," physical dynamics of hardware for computation. By strategically relaxing these traditional constraints, the hardware is designed to operate as an exact realization of a physical process, making it exceptionally efficient for certain tasks. The paper outlines a comprehensive vision, from the fundamental principles and design strategies to a roadmap for scaling and integration into heterogeneous computing systems.

WHY it matters? This work directly confronts the "compute crisis" fueled by modern AI, which is defined by unsustainable energy consumption, prohibitive training costs, and the fast-approaching limits of conventional CMOS scaling. Physics-based ASICs offer a plausible path forward by fundamentally rethinking the relationship between software and hardware. This paradigm promises substantial gains in energy efficiency and computational throughput, potentially accelerating critical AI workloads like diffusion models, sampling, and optimization, as well as scientific simulations. Ultimately, this could help democratize access to high-performance computing and unlock new frontiers in AI that are currently bottlenecked by the brute-force approach of digital computing.

Details

The Cracks in the Foundation of Modern Computing

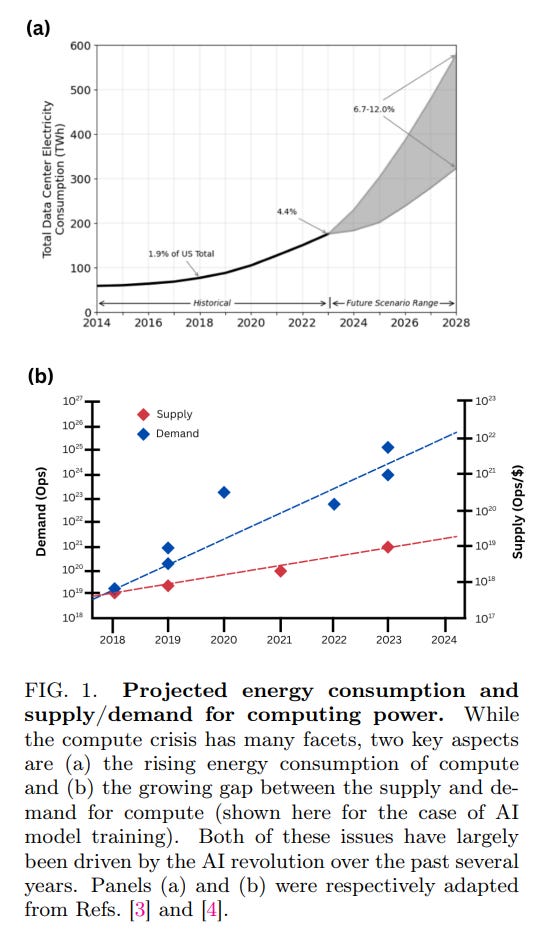

The relentless advance of artificial intelligence has pushed our current computing infrastructure to its breaking point. In a compelling vision paper, a team of researchers from academia and industry argues that we are facing a full-blown "compute crisis." This crisis is not a distant threat but a present-day reality, manifesting across three critical axes: unsustainable energy demands, with AI data centers on track to consume 6% of U.S. electricity by 2026 (Figure 1); prohibitive costs that centralize cutting-edge AI within a handful of corporate giants; and the physical demise of Moore's Law, as transistor miniaturization runs into the hard limits of physics.

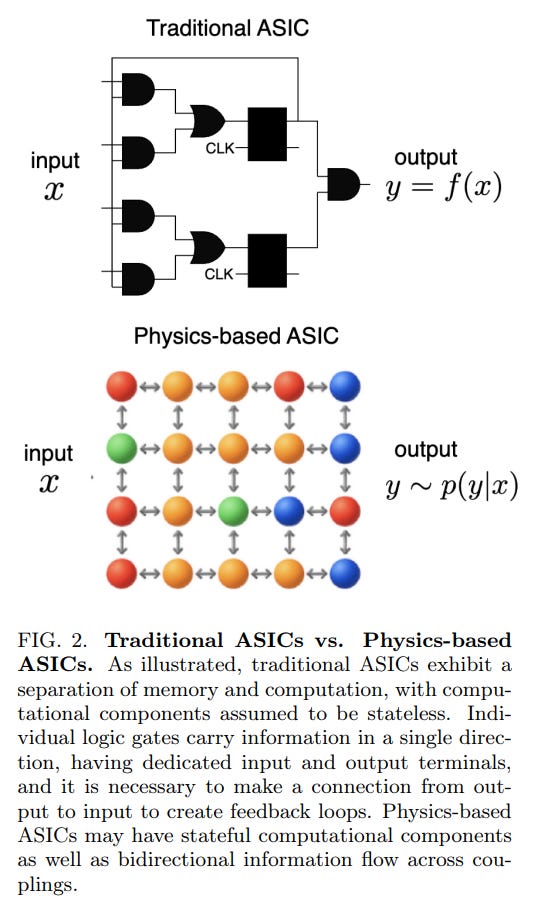

The authors' central thesis is that the problem lies in the very foundation of digital computing: our decades-long effort to fight, suppress, and abstract away the underlying physics of our hardware. We expend enormous energy to enforce idealized behaviors—stateless logic gates, unidirectional data flow, perfect determinism, and global synchronization—that are not physically natural. This paper proposes a radical alternative: instead of fighting physics, we should leverage it. This work can be seen as the ultimate evolution of the trend towards domain-specific architectures. For decades, we have moved from CPUs to specialized hardware like GPUs and TPUs. Physics-based ASICs represent the next logical step, pushing hardware-software co-design to its fundamental physical limit by creating circuits that are a physical instantiation of the computation itself.

A Paradigm Shift: Physics-Based ASICs

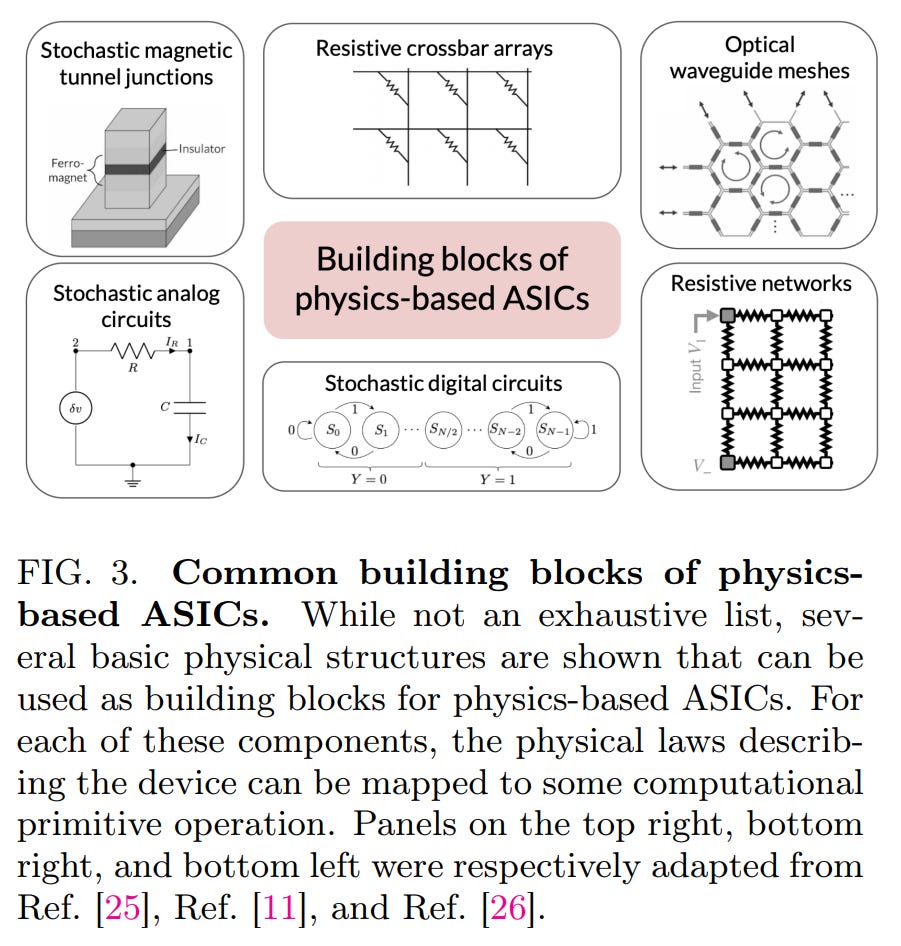

The proposed solution is a new class of hardware: Physics-based Application-Specific Integrated Circuits (ASICs). The core idea is to relax the rigid constraints of digital design and instead build circuits that directly harness their intrinsic physical dynamics for computation.

An Intuitive Example: How Resistors Can Optimize

To make the idea of "computing with physics" more concrete, consider a simple network of resistors. When you apply voltages to it, the currents flow and settle according to Kirchhoff's laws. The final state the system reaches is one that naturally minimizes the total power dissipated as heat. This physical process of energy minimization is directly analogous to solving certain mathematical optimization problems. A conventional computer would solve this by iteratively running algorithms with millions of digital operations. A physics-based ASIC, in this case a resistive network, solves it almost instantly by simply being itself. This is the essence of the efficiency gain: the computation is embedded in the natural evolution of the physical system.

As illustrated in the paper (Figure 2), this means moving away from a world of enforced digital purity towards one that embraces the inherent "messiness" of the physical world:

Statefulness: Components can retain memory of past states, blurring the line between computation and memory.

Bidirectionality: Information can flow in multiple directions, allowing for complex, feedback-driven dynamics.

Non-determinism: Stochasticity and noise are not treated as errors to be corrected but as potential computational resources.

Asynchronicity: The requirement for a global, energy-hungry clock is relaxed in favor of local or clockless operation.

By working with the physics, these ASICs aim to perform certain computations with vastly greater efficiency. A scalar multiplication that might require hundreds of transistors in a conventional design could potentially be done with a handful of components in a physics-based one.

A Principled Approach to Hardware-Software Co-Design

This vision is not about creating a universal computer but about developing highly specialized, efficient processors. The authors outline a principled methodology for their creation centered on deep hardware-software co-design (Figure 4).

The strategy involves finding the intersection between two sets of algorithms: those required by a target application (the "top-down" view) and those that can be run efficiently on a specific physical structure (the "bottom-up" view).

Performance is measured not just in speed but also in energy, using simple ratios comparing the physics-based ASIC (structure S) to a state-of-the-art (SOTA) digital counterpart (e.g., a GPU):

The goal of co-design is to develop algorithms and tune their hyperparameters to maximize these ratios, while being mindful of Amdahl's Law, which dictates that the overall speedup is limited by the portion of the algorithm that can be accelerated.

A particularly powerful concept introduced is "Physical Machine Learning" (PML), where the hardware's physical parameters are directly optimized via a learning process to perform a desired computation, ỹ = f_p(x,θ). In its ultimate form, "Physical Learning," both the computation (inference) and the optimization (learning) would occur entirely within the physical hardware, paving the way for compact, hyper-efficient learning machines.

Applications and Tangible Progress

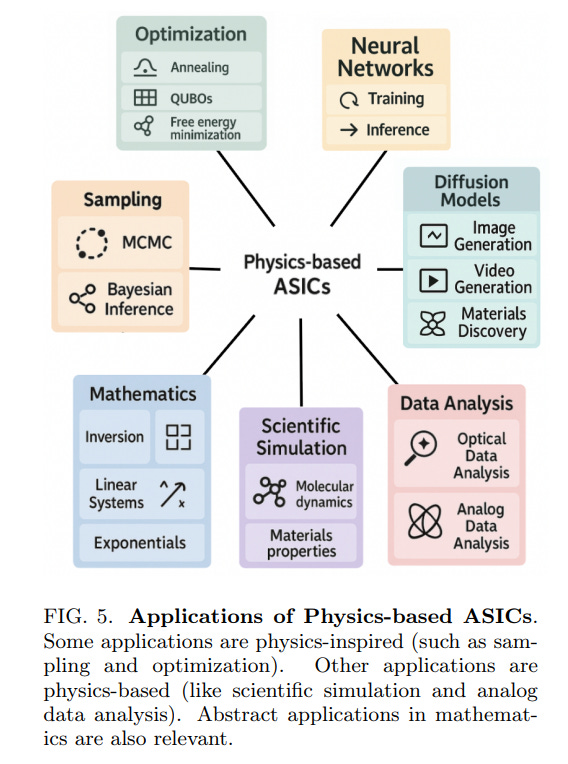

This paradigm is not merely theoretical. The paper highlights a diverse range of applications where physics-based ASICs are a natural fit (Figure 5), including optimization, sampling, diffusion models, and scientific simulation.

These are often tasks that are either inspired by or directly model physical processes. For instance:

Neural Networks: Modern ANNs are surprisingly resilient to noise, making them excellent candidates for noisy, low-power analog hardware.

Diffusion Models: These models are deeply connected to non-equilibrium thermodynamics, and their inherent stochasticity aligns perfectly with the nature of physics-based ASICs.

Optimization: Physical systems naturally seek low-energy states. This principle can be harnessed to solve complex optimization problems, such as QUBOs on Ising machines or using resistive networks that physically enact Kirchhoff's laws to minimize energy dissipation.

The authors cite compelling evidence from existing research, noting that latch-based Ising machines have achieved over 1000x speedups (IEEE Journal of Solid-State Circuits, 2024), coupled-oscillator systems have demonstrated 1-2 orders of magnitude lower energy consumption (Nature Electronics, 2025), and optical neural networks have operated with less than one photon per multiplication (Nature Communications, 2022).

This vision is further bolstered by recent progress in the closely related sub-field of "Thermodynamic Computing," which several of the authors are pioneering. For example, their work on Thermodynamic Natural Gradient Descent (TNGD) utilizes a specialized physics-based processor to make powerful second-order optimization methods computationally feasible. This exemplifies the co-design principles in action, where a novel hardware approach unlocks more powerful algorithms.

The Road Ahead: Challenges and a Call to Action

The authors are realistic about the challenges. The path forward involves a three-phase roadmap:

Demonstrate domain-specific advantage: Build proof-of-concepts that outperform conventional hardware on key workloads.

Architect scalable substrates: Overcome challenges in memory bandwidth, I/O, and scalability using techniques like tile-based design and reconfigurable components.

Integrate into hybrid systems: Develop the full software stack—compilers, libraries, and integrations with frameworks like PyTorch and JAX—to make these specialized processors accessible and useful within larger, heterogeneous computing systems.

Key hurdles remain, including the engineering complexity of controlling analog systems, the "sim2real" gap in hardware simulation, and the monumental task of building a mature software ecosystem. Beyond this roadmap, the authors issue a direct call to action for the entire research community: to actively identify problems where GPUs struggle (like sequential physical dynamics), to co-design new algorithms tailored for these physical systems, and to collaboratively build the open-source software tools and simulators needed to democratize this powerful new paradigm.

Conclusion

"Solving the compute crisis with physics-based ASICS" is more than a research paper; it is a manifesto for a new era of computing. It presents a clear, compelling, and well-reasoned argument for why the future of high-performance computing may lie not in purer digital abstractions, but in embracing the rich, complex, and powerful dynamics of the physical world. While the engineering challenges are formidable, the potential rewards—a sustainable and democratized future for AI and scientific discovery—are too significant to ignore. This paper offers a valuable and timely contribution, providing a visionary yet pragmatic roadmap for a field that may well define the next generation of computation.