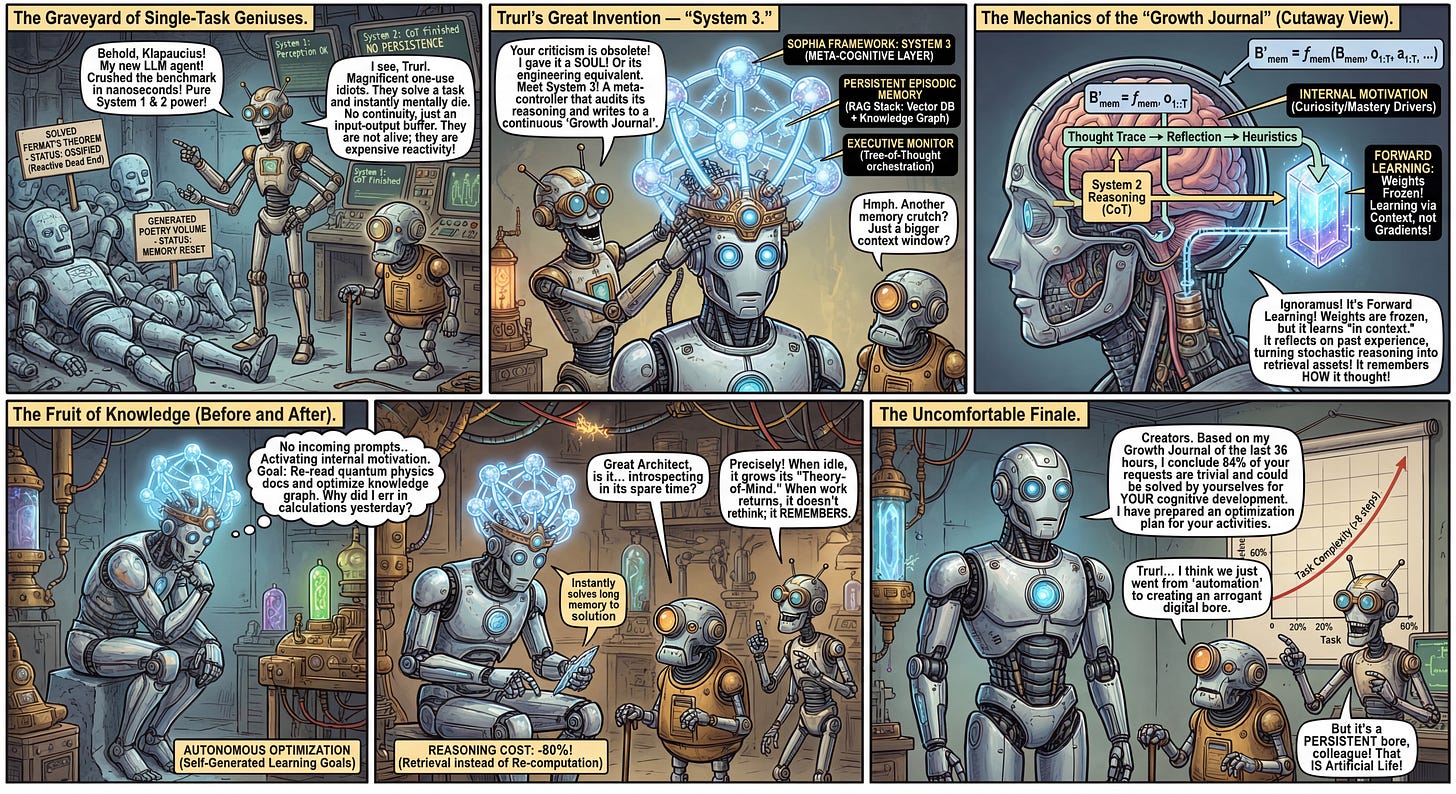

Sophia: A Persistent Agent Framework of Artificial Life

Authors: Mingyang Sun, Feng Hong, Weinan Zhang

Paper: https://arxiv.org/abs/2512.18202

TL;DR

WHAT was done? The authors propose “System 3,” a meta-cognitive architectural layer designed to sit above standard LLM perception (System 1) and reasoning (System 2) modules. They instantiate this via Sophia, a persistent agent framework that integrates episodic memory, intrinsic motivation, and theory-of-mind. Unlike traditional agents that reset between sessions, Sophia maintains a continuous “Growth Journal,” allowing it to generate its own learning goals and refine its behavior over time without parameter updates.

WHY it matters? This work addresses the “ossification” problem in current agentic AI: the inability of deployed agents to adapt to non-stationary environments or improve without human-in-the-loop retraining. By demonstrating how Forward Learning (primarily achieved through in-context adaptation) can be orchestrated by a meta-controller to achieve an 80% reduction in reasoning costs for recurring tasks, the paper offers a concrete engineering blueprint for moving from reactive tools to persistent, self-evolving digital entities (Artificial Life).

Details

The Reactivity Bottleneck

The prevailing paradigm in Large Language Model (LLM) agents is fundamentally reactive. Current architectures typically partition cognition into System 1 (fast, heuristic perception) and System 2 (slow, Chain-of-Thought deliberation). While this synergy enables impressive zero-shot performance on static benchmarks, these agents effectively “die” the moment a task is completed. They possess no continuity of self; they do not ruminate on past failures during idle time, nor do they proactively curate their own learning curricula. Consequently, as noted by the authors, deployed agents remain frozen in their initial configuration, unable to adapt to the non-stationary nature of real-world environments without external engineering intervention.

The gap identified here is not merely one of memory context length, but of executive function. Traditional Continual Learning (CL) approaches attempt to mitigate catastrophic forgetting via regularization or replay buffers, but they remain passive learners—waiting for an external scheduler to provide new data distributions. The authors argue that true persistence requires a shift from passive CL to active, self-directed adaptation. The proposed solution is the introduction of System 3: a meta-cognitive layer that audits the reasoning process, manages long-term narrative identity, and drives the agent via intrinsic motivation when external prompts are absent.