Towards a Physics Foundation Model

Authors: Florian Wiesner, Matthias Wessling, Stephen Baek

Paper: https://arxiv.org/abs/2509.13805

Code: https://github.com/FloWsnr/General-Physics-Transformer

Blog: https://flowsnr.github.io/blog/physics-foundation-model/.

TL;DR

WHAT was done? The authors introduce the General Physics Transformer (GPhyT), a large-scale transformer model trained on a diverse 1.8 TB corpus of simulation data. GPhyT employs a novel hybrid architecture, acting as a “neural differentiator” that learns the temporal derivative of a physical system, which is then advanced in time by a standard numerical integrator. This approach allows a single, pre-trained model to simulate a wide range of disparate physical systems—from incompressible flows and thermal convection to shock waves and multi-phase dynamics—without being explicitly provided with the underlying governing equations.

WHY it matters? This work marks a significant step towards a “train once, deploy anywhere” Physics Foundation Model (PFM), a paradigm previously exclusive to domains like natural language processing. By demonstrating that a model can infer governing dynamics purely from context, GPhyT achieves true zero-shot generalization to entirely new physical systems and boundary conditions. It not only outperforms specialized architectures by up to 29x on known tasks but also maintains stable, physically plausible predictions in long-term rollouts. This research establishes that learning generalizable physical principles from data alone is feasible, opening a path to a universal physics engine that could democratize high-fidelity simulations and accelerate scientific discovery.

Details

For years, the machine learning community has seen a stark divergence between the rapid generalization capabilities of Large Language Models (LLMs) and the highly specialized nature of models in scientific domains. While a single LLM can be prompted to perform countless tasks, physics-aware machine learning (PAML) models like Physics-Informed Neural Networks (PINNs) (https://doi.org/10.1016/j.jcp.2018.10.045) or Neural Operators like the FNO (https://arxiv.org/abs/2010.08895) have remained specialized solvers, requiring extensive retraining for each new physical system, boundary condition, or governing equation. This paper challenges that status quo, introducing a compelling vision for a Physics Foundation Model (PFM) and presenting the first strong evidence that such a universal model is achievable.

Methodology: From Physics-Informed to Physics-Inferred

The central innovation of this work is a paradigm shift from being informed by physics to inferring physics from context. The proposed General Physics Transformer (GPhyT) emulates the in-context learning abilities of LLMs. Instead of being fed explicit equations, it is given a “prompt”—a short sequence of prior physical states—and learns to predict the system’s evolution.

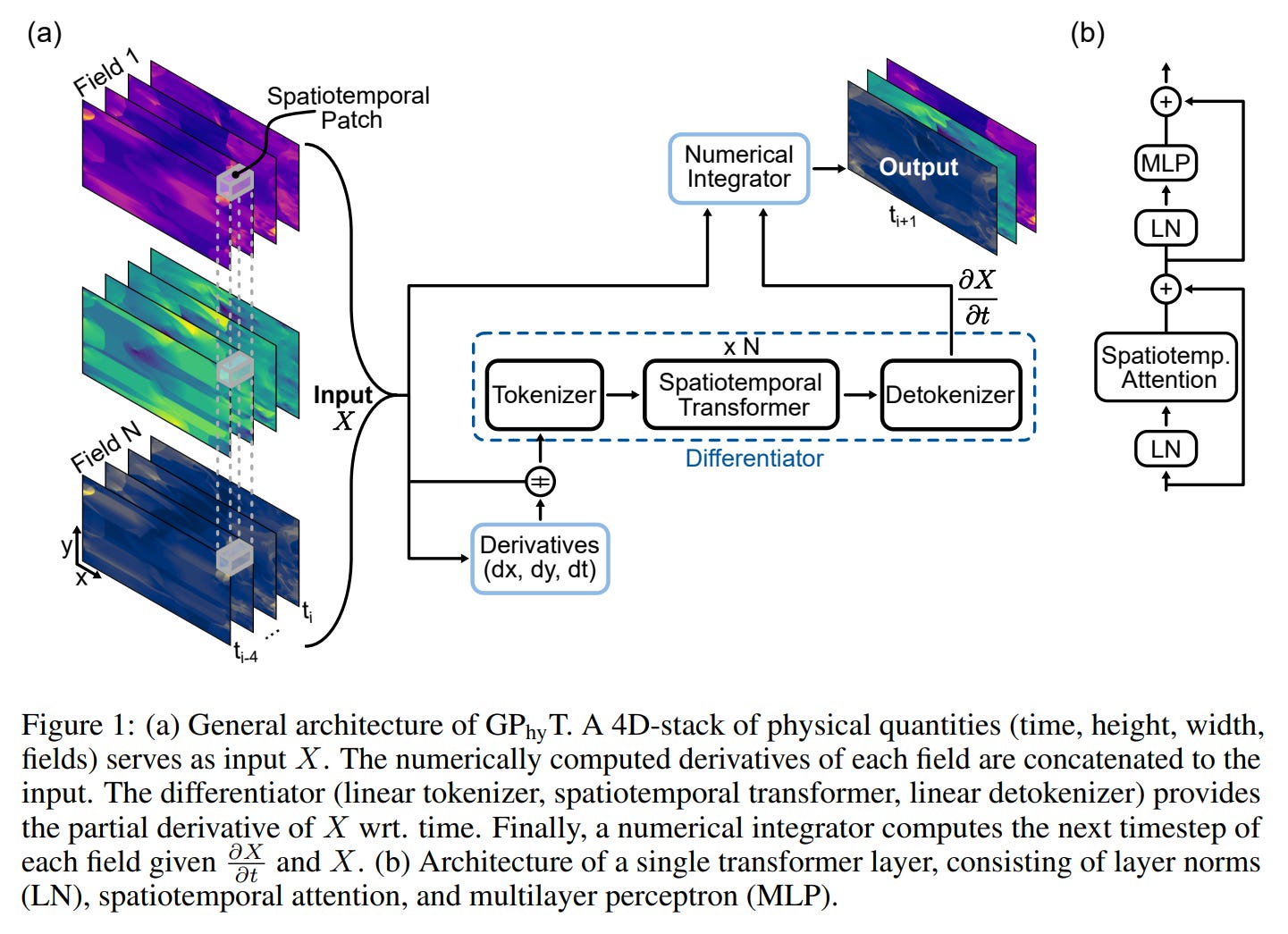

The model’s architecture is a hybrid of deep learning and classical numerical methods, inspired by Neural ODEs (https://arxiv.org/abs/1806.07366). It decouples the learning problem into two stages (Figure 1):

Neural Differentiator: A spatiotemporal transformer learns the partial derivative of the physical state

Xwith respect to time,∂X/∂t. The input is a 4D stack of physical fields (time, height, width, channels), which is tokenized into spatiotemporal “tubelets”. The choice of a transformer is key. Unlike convolutional architectures like UNet (which focus on local patterns) or Fourier Neural Operators (which model global phenomena in frequency space), the spatiotemporal self-attention mechanism allows GPhyT to dynamically identify and connect relevant features across vast distances in both space and time. This is critical for accurately modeling complex, non-local interactions, such as the evolution of turbulent eddies or the reflection of shock waves, and is a primary reason for its superior performance on challenging physical systems. To enhance performance, especially for phenomena with sharp gradients, the input is enriched by concatenating numerically computed first-order spatial and temporal derivatives.Numerical Integrator: A standard numerical integrator uses the learned derivative to extrapolate the system’s next state,

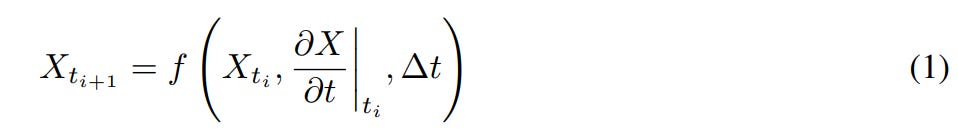

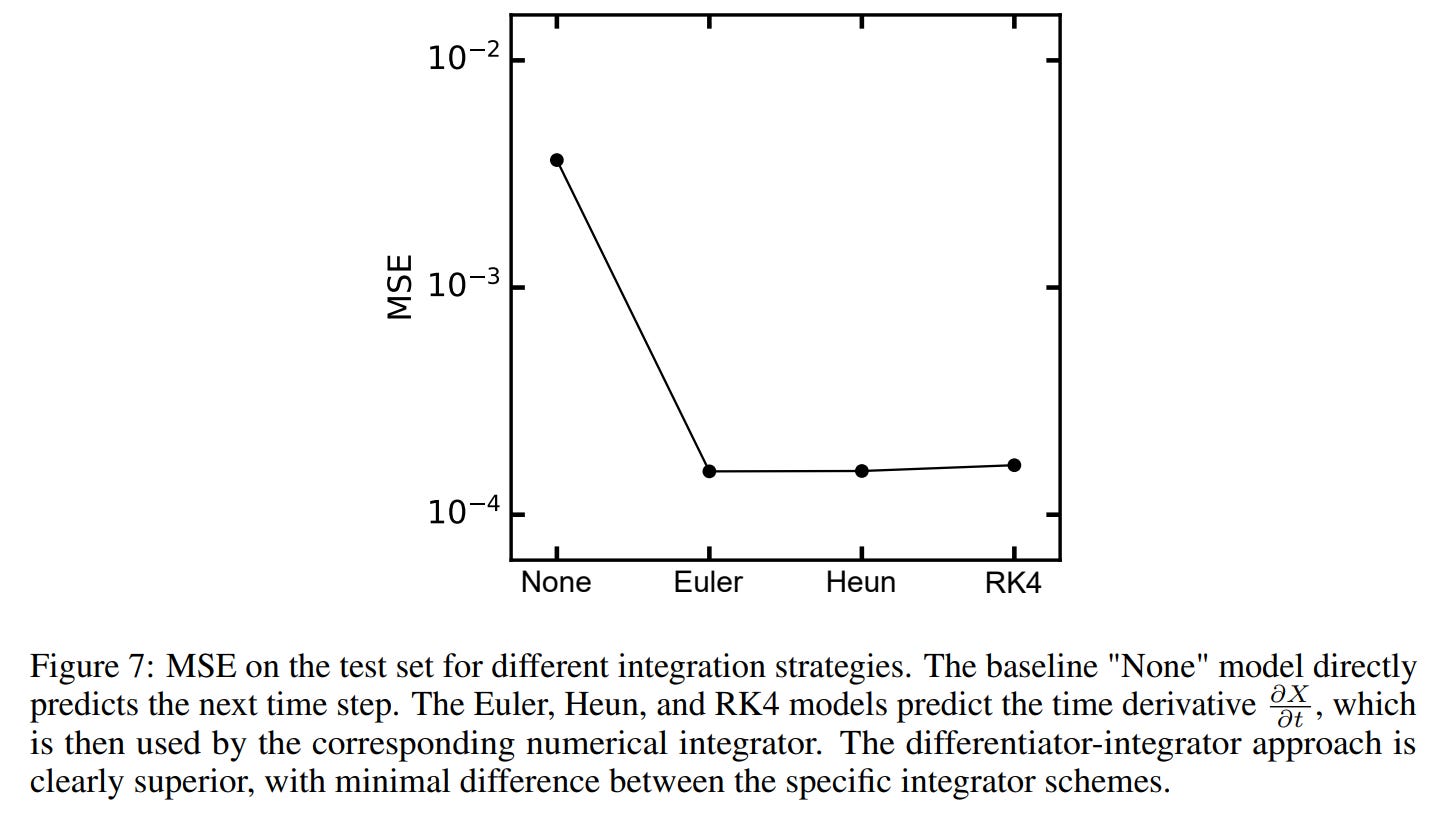

X_{t_{i+1}}. The general form is:An ablation study (Figure 7) revealed that this decoupled approach significantly outperforms direct prediction of the next state. Furthermore, the simple and computationally efficient first-order Forward Euler method performed on par with more complex, higher-order integrators like Runge-Kutta 4, justifying its selection.

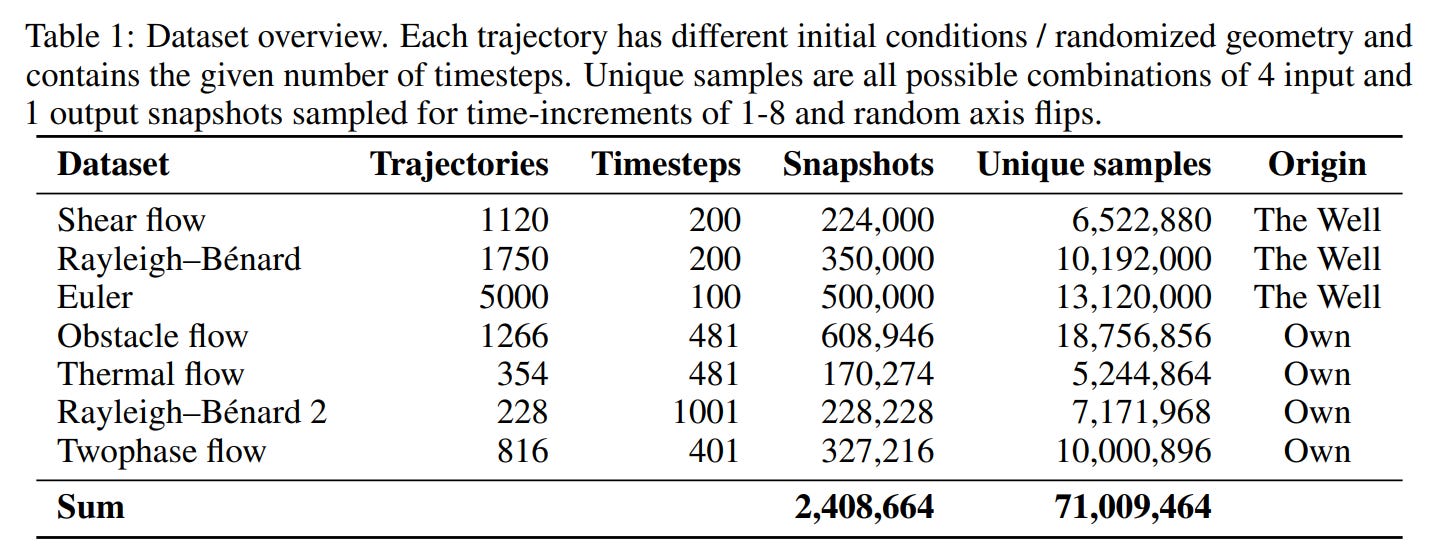

Underpinning this architecture is a massive and strategically curated 1.8 TB dataset, combining public benchmarks (”The Well”) with four new custom-generated datasets covering a wide range of fluid dynamics and heat transfer problems (Table 1). Two key data augmentation strategies were employed to foster in-context learning:

Variable Time Increments: Sub-sampling trajectories with different time steps (

Δt) forces the model to become invariant to sampling frequency and infer the temporal scale from the dynamics presented in the prompt.Per-Dataset Normalization: Normalizing each dataset independently compels the model to infer absolute magnitudes and spatial scales from context, rather than memorizing them.

Experimental Results: A Trifecta of Breakthroughs

The paper’s experiments validate GPhyT’s capabilities across three critical areas, answering the research questions posed in the introduction.

1. Superior Multi-Physics Learning

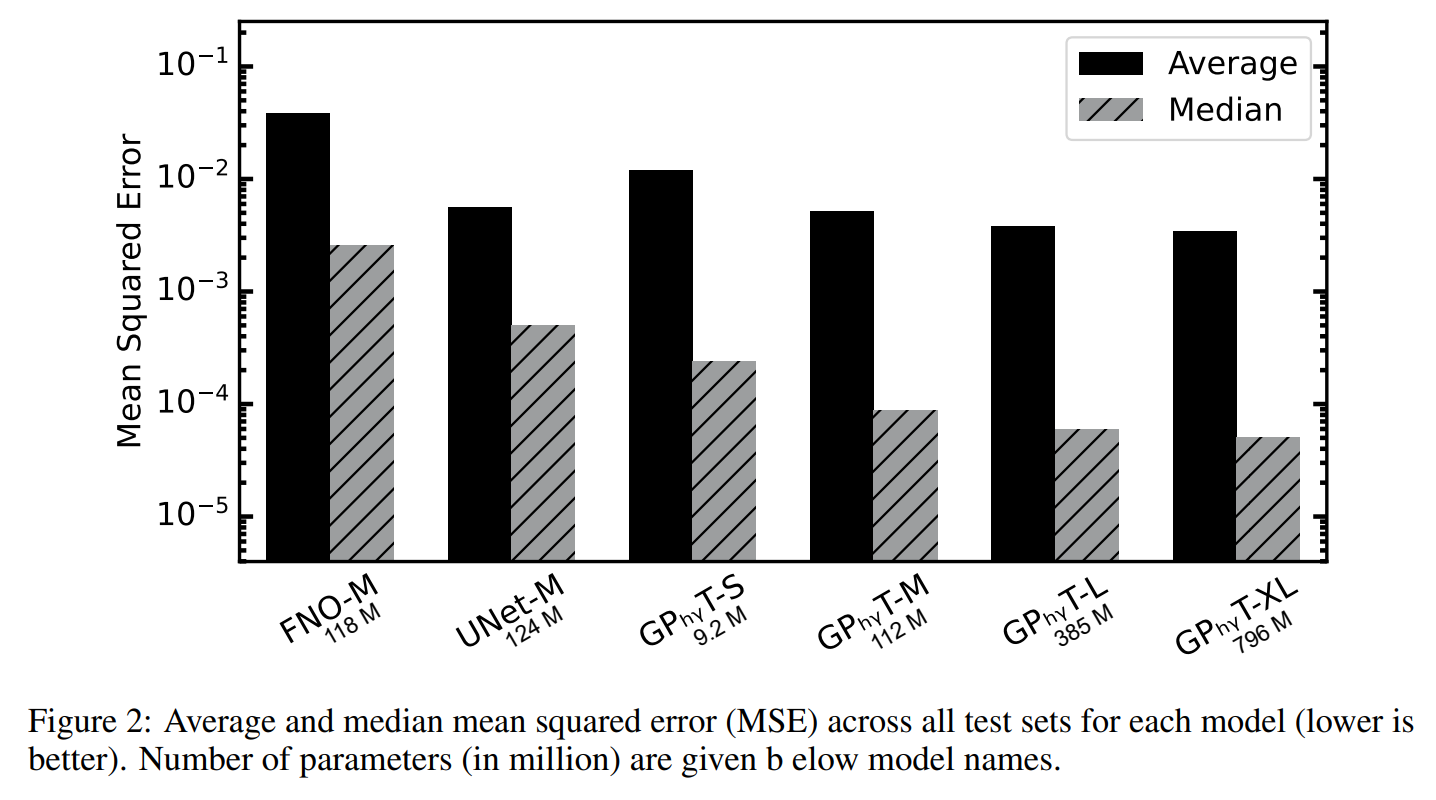

When evaluated on a diverse test set spanning seven different physical systems, GPhyT demonstrated substantially better performance than established specialized architectures. The medium-sized GPhyT-M (112M parameters) achieved up to a 29x reduction in median Mean Squared Error (MSE) compared to a Fourier Neural Operator (FNO-M, 118M parameters) of similar size (Figure 2).

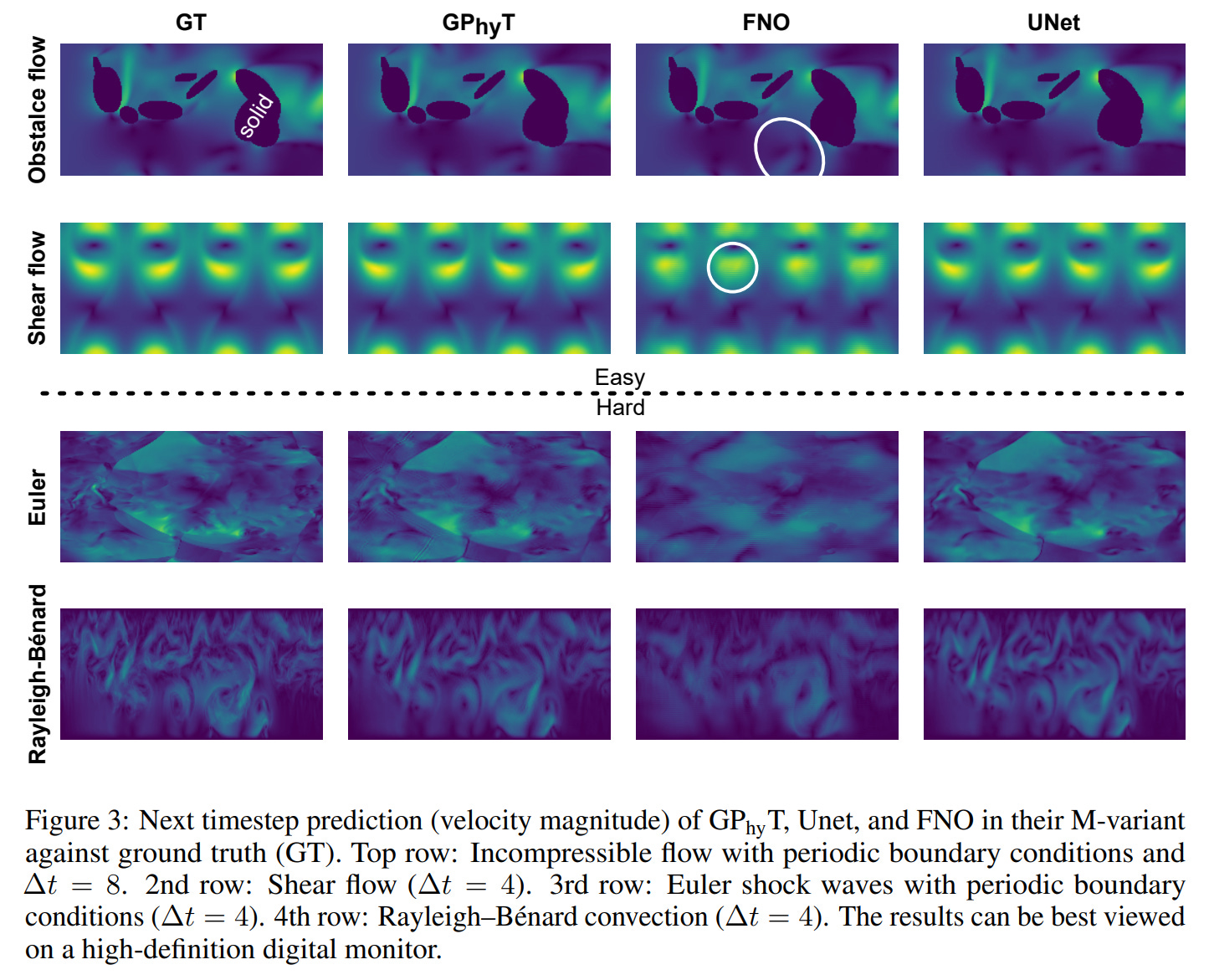

Remarkably, the paper shows that even the smallest variant, GPhyT-S (9.2M parameters), outperforms the full-sized FNO and UNet (124M parameters) models. This demonstrates that the performance gains stem from a fundamentally more effective architecture, not just from scaling up model size. Qualitatively, GPhyT excels at capturing fine-grained details like vortical structures and, critically, maintains sharp discontinuities in shock waves and convective plumes where other models produce overly smoothed or diffused predictions (Figure 3).

2. Emergent Zero-Shot Generalization

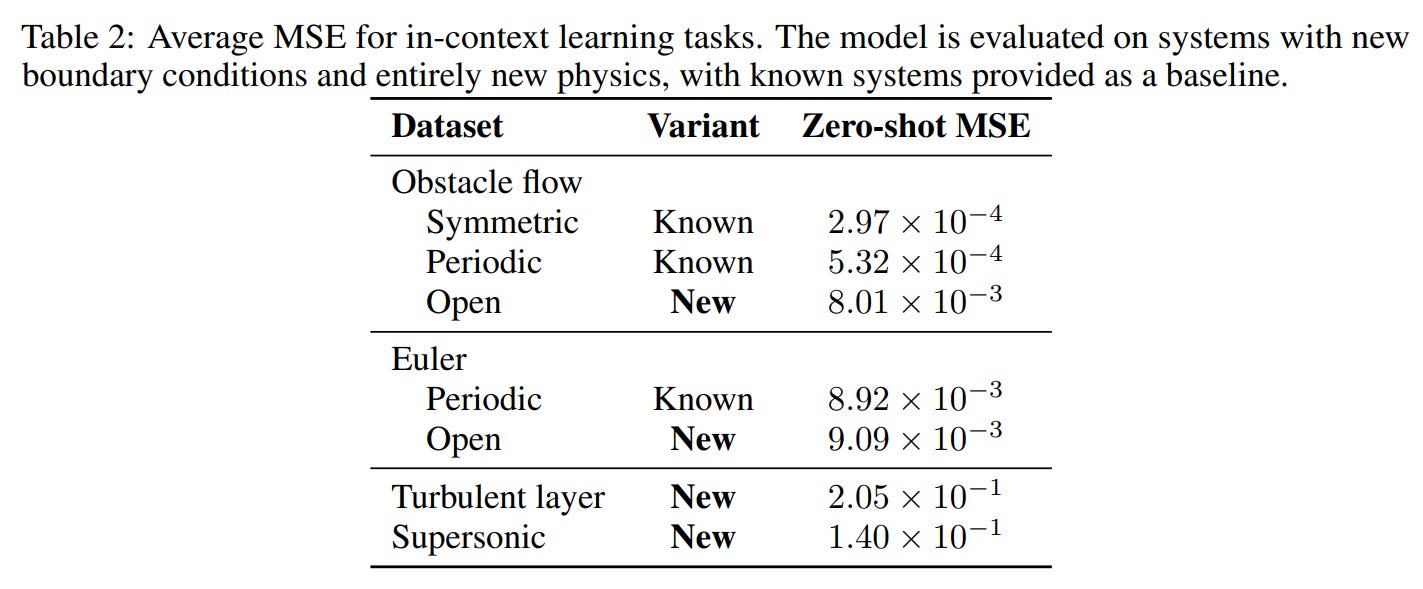

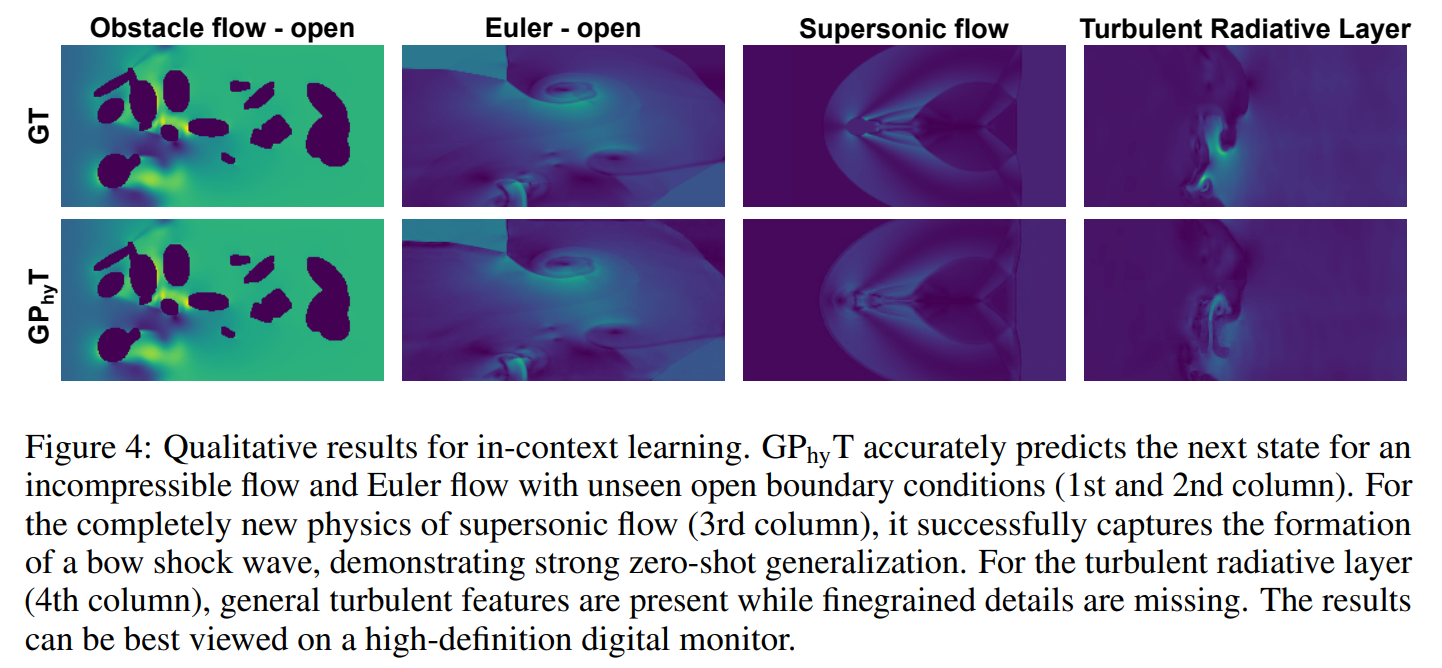

The most paradigm-shifting result is GPhyT’s ability to perform zero-shot generalization. The model was tested on tasks it had never encountered during training, with striking results (Table 2, Figure 4):

New Boundary Conditions: When presented with systems using “open” boundary conditions (absent from training data), GPhyT inferred the new dynamics and produced predictions with an MSE nearly identical to its performance on known systems.

Entirely Novel Physics: Even more impressively, the model was tested on completely new physical phenomena—supersonic flow and a turbulent radiative layer. It successfully captured the essential dynamics, correctly forming a bow shock wave in the supersonic case and generating plausible turbulent features. This is a powerful demonstration that GPhyT has learned transferable physical principles rather than simply memorizing patterns.

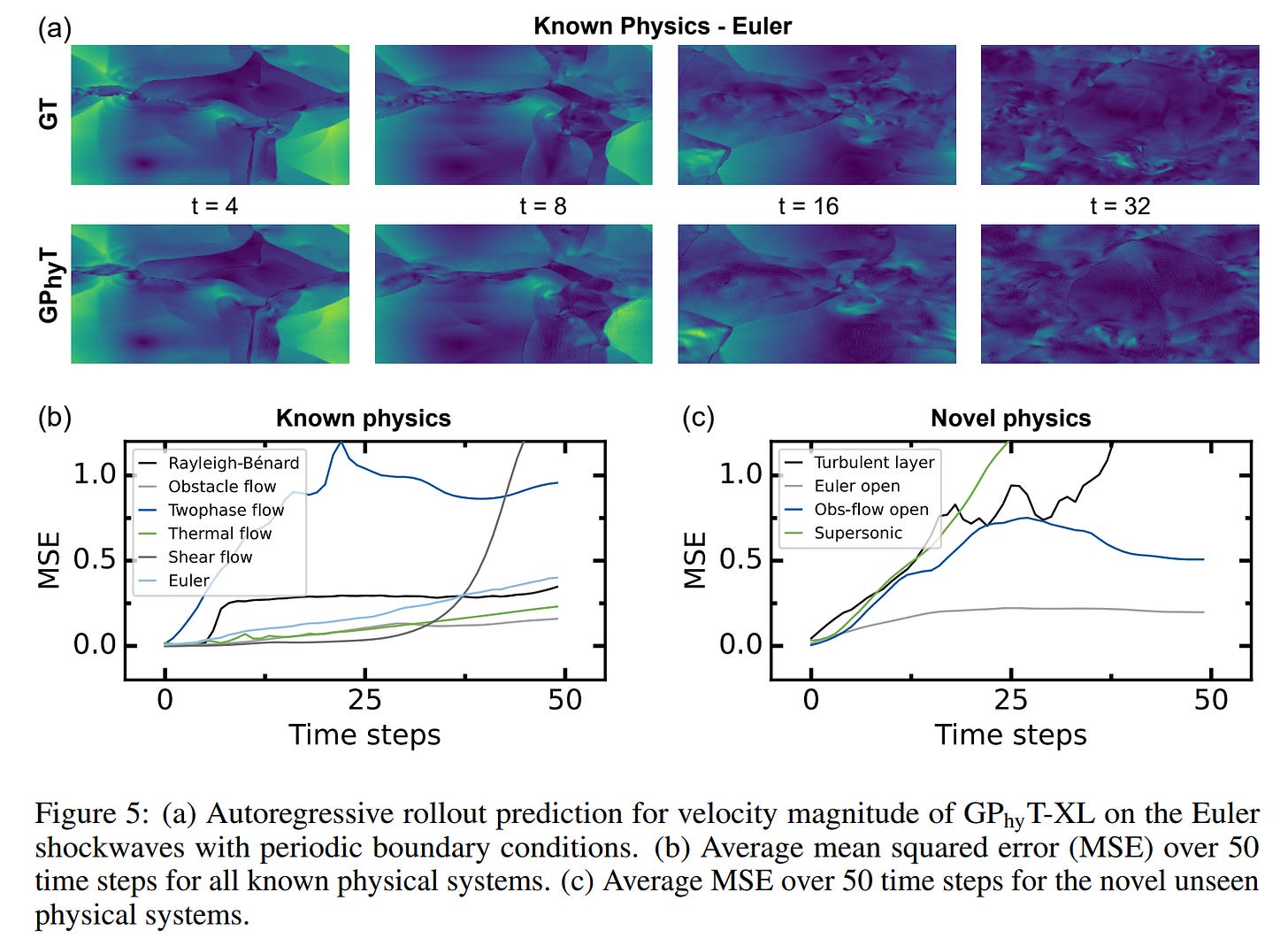

3. Stable Long-Range Predictions

A crucial test for any physics surrogate is its stability over long time horizons. GPhyT was evaluated in autoregressive rollouts for 50 timesteps. While some high-frequency detail is lost over time due to error accumulation, the model successfully preserves the global dynamics and physical plausibility of the flow (Figure 5). For most systems, the error accumulates in a near-linear fashion, demonstrating a remarkable degree of stability for a purely data-driven model.

Limitations and Future Outlook

The authors are candid about the current limitations. The model is presently constrained to 2D systems, has a fixed spatial resolution, and its long-term accuracy, while stable, does not yet match that of traditional numerical solvers. The physics coverage is also limited to fluid dynamics and heat transfer.

Future work will focus on addressing these challenges by scaling the model to 3D, incorporating a wider variety of physical domains (e.g., mechanics, chemistry, optics), enabling variable-resolution capabilities, and further improving long-term stability.

Conclusion

This paper presents a landmark achievement in the pursuit of general-purpose AI for science. By successfully demonstrating that a single transformer-based model can learn to infer and simulate diverse physical phenomena from context alone, the authors have laid a robust foundation for the development of a universal Physics Foundation Model. GPhyT is more than just an architectural innovation; it is a proof-of-concept that the “train once, deploy anywhere” paradigm is not limited to human language but can be extended to the fundamental language of the universe: physics. A universal physics engine could dramatically accelerate research and development in critical areas—from designing more efficient aircraft by simulating airflow under novel conditions to developing clean energy by modeling plasma in fusion reactors, all without the need to develop bespoke solvers for each new problem. By making high-fidelity simulation more accessible, GPhyT paves the way for faster innovation across countless scientific and engineering disciplines.