Training convolutional neural networks with the Forward–Forward Algorithm

Authors: Riccardo Scodellaro, Ajinkya Kulkarni, Frauke Alves, Matthias Schröter

Paper: https://www.nature.com/articles/s41598-025-26235-2

Code: https://doi.org/10.5281/zenodo.11571949 (not available at the moment), based on https://github.com/loeweX/Forward-Forward

TL;DR

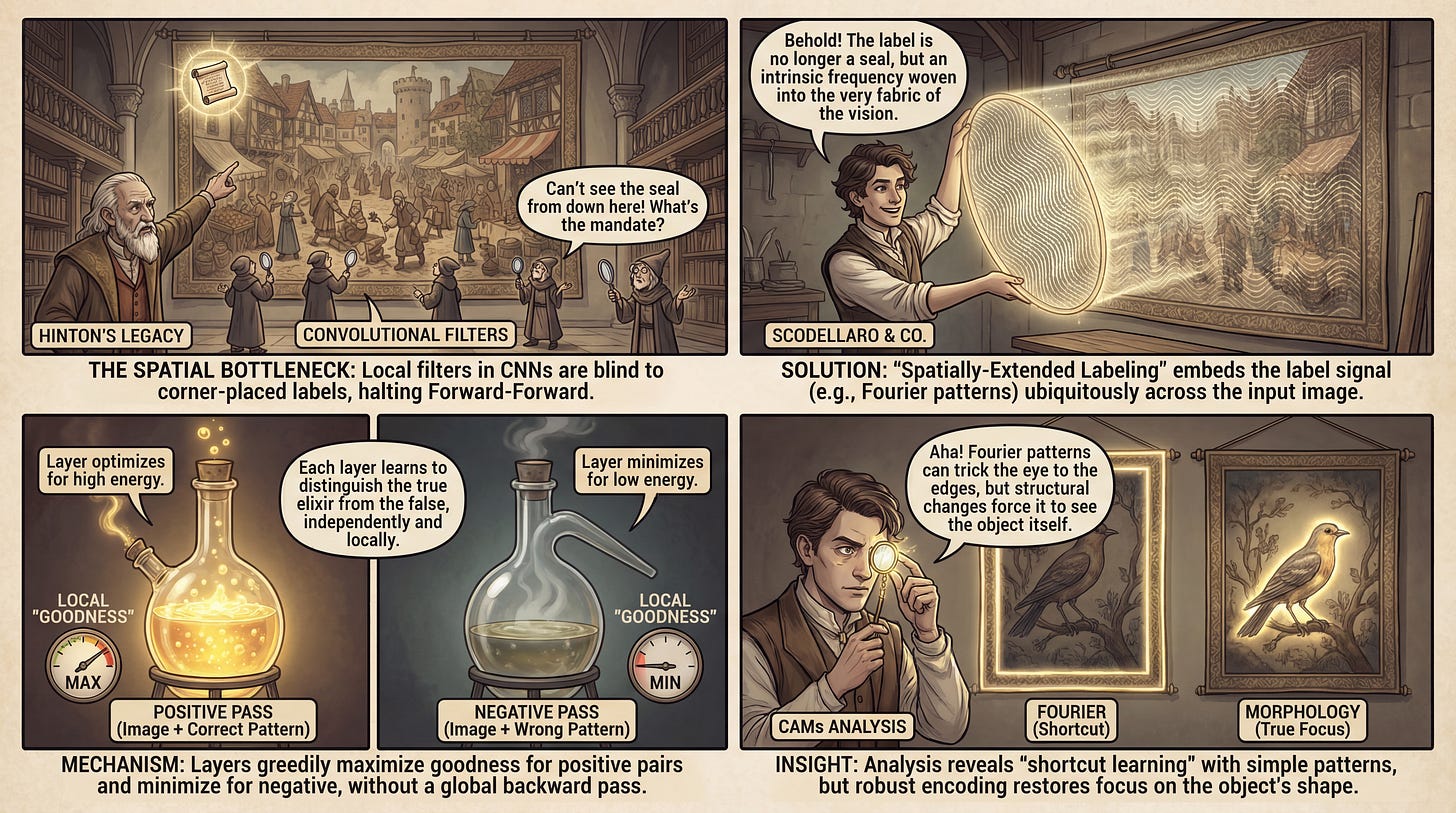

WHAT was done? The authors successfully extended Geoffrey Hinton’s Forward-Forward (FF) algorithm—originally restricted to fully connected networks—to Convolutional Neural Networks (CNNs). They achieved this by introducing “spatially-extended labeling,” a technique that superimposes label information (via Fourier patterns or morphological distortions) across the entire input image, allowing convolutional filters to access label data locally at any spatial position.

WHY it matters? This addresses a critical architectural limitation in non-backpropagation learning. Standard FF relies on localized label encoding (like a one-hot corner), which fails in CNNs where shared weights scan the whole image. By demonstrating that CNNs can be trained via local “goodness” maximization on complex datasets like CIFAR-100, this work advances the viability of biologically plausible, low-memory training methods suitable for neuromorphic hardware.

Details

The Context: Moving Beyond Backpropagation

To understand the significance of this work, we must first revisit the foundational shift proposed by Geoffrey Hinton. The Forward-Forward algorithm (see also) attempts to replace the global error signal of backpropagation with local learning rules. In the standard FF paradigm, the network does not minimize the difference between an output and a target. Instead, it maximizes a “goodness” score for data containing positive patterns (real images with correct labels) and minimizes it for negative patterns (real images with incorrect labels). This decouples the layers, allowing them to learn features greedily without storing a computation graph for the backward pass.

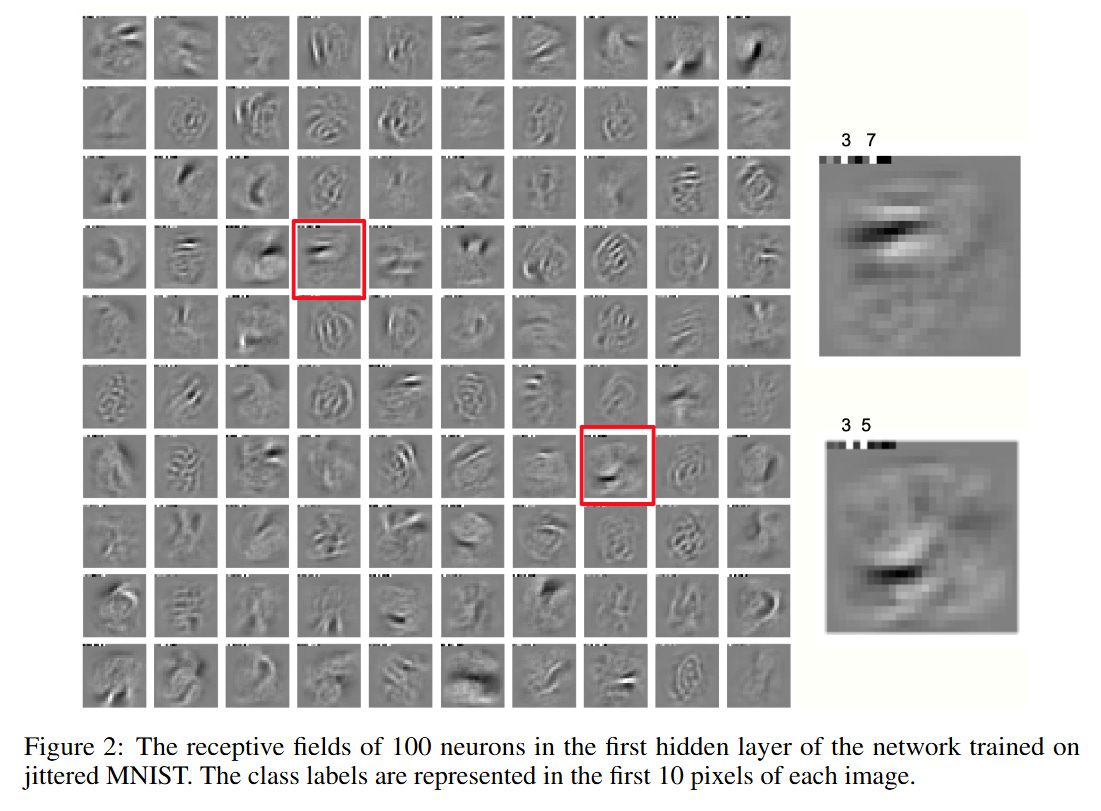

However, a major bottleneck emerged when applying FF to computer vision. Hinton’s original MNIST implementation embedded the label as a “one-hot” vector in the top-left corner of the image.

For a fully connected network, this is sufficient. But for a Convolutional Neural Network (CNN), which relies on translation invariance and local receptive fields, this approach fails. A filter scanning the bottom-right of an image has no access to the label in the top-left, making local supervised learning impossible. Scodellaro et al. tackle this specific “spatial bottleneck” by proposing a mechanism to distribute the label signal ubiquitously across the input.