AI Meets Brain: Memory Systems from Cognitive Neuroscience to Autonomous Agents

Authors: Jiafeng Liang, Hao Li, Chang Li, Jiaqi Zhou, Shixin Jiang, Zekun Wang, Changkai Ji, Zhihao Zhu, Runxuan Liu, Tao Ren, Jinlan Fu, See-Kiong Ng, Xia Liang, Ming Liu, and Bing Qin

Paper: https://arxiv.org/abs/2512.23343

Code: https://github.com/AgentMemory/Huaman-Agent-Memory

TL;DR

WHAT was done? The authors present a comprehensive survey synthesizing cognitive neuroscience principles with the architecture of Large Language Model (LLM) based agents. They propose a unified taxonomy for agentic memory that mirrors biological systems—specifically distinguishing between episodic (experience) and semantic (knowledge) memory, and mapping the lifecycle of memory formation, storage, retrieval, and updating.

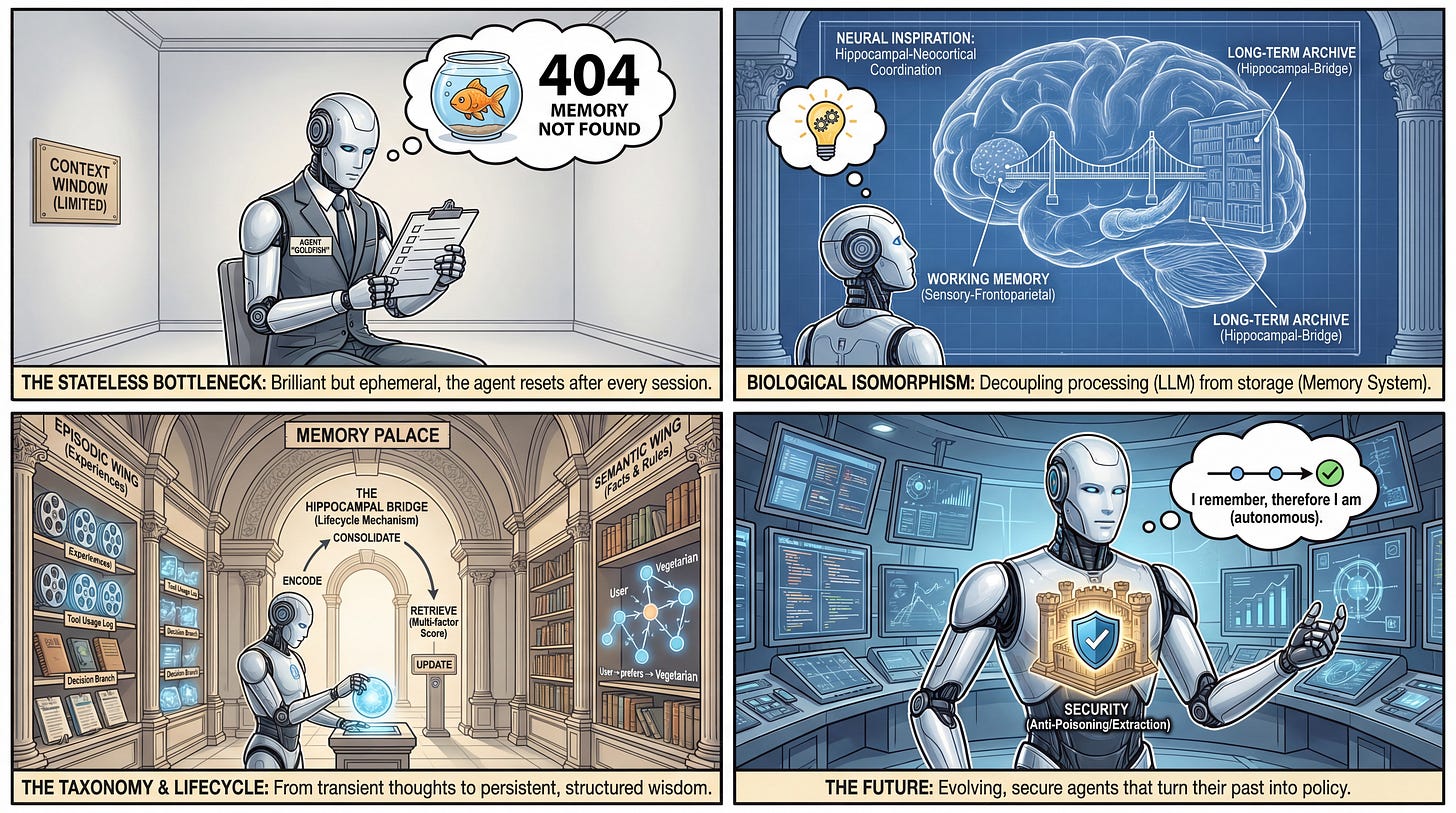

WHY it matters? This work addresses the critical “statelessness bottleneck” in current AI. While LLMs have vast parametric knowledge, they lack the persistent, evolving identity required for long-horizon agency. By formalizing memory not just as a retrieval mechanism (like RAG) but as a dynamic cognitive process (formation, consolidation, forgetting), this survey provides a blueprint for moving agents from passive responders to continuous learners capable of self-evolution.

There was also another recent review of memory for agents: Memory in the Age of AI Agents.

Details

The Statelessness Bottleneck and Biological Isomorphism

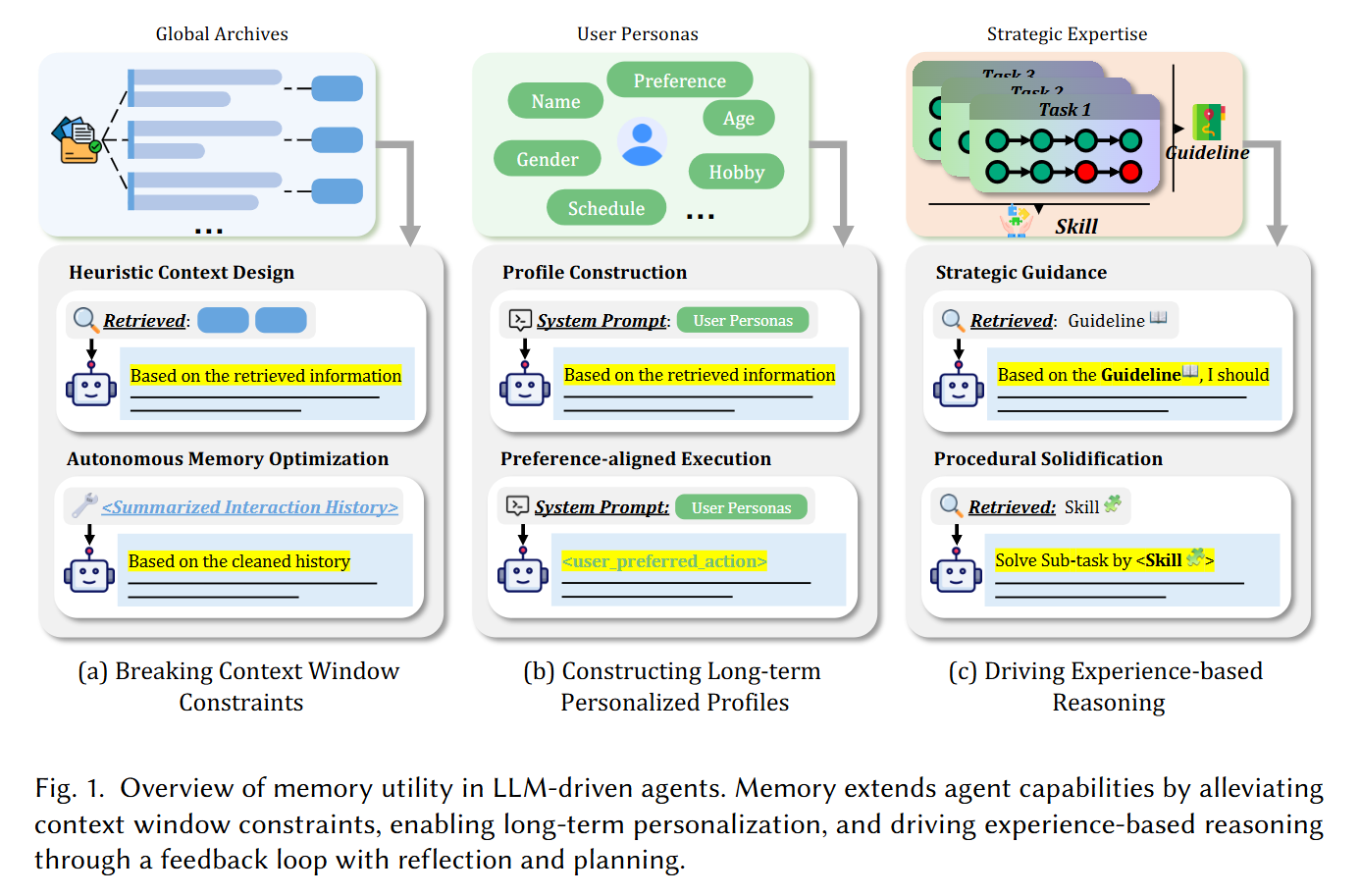

The central conflict in current agentic AI is the tension between the static nature of pre-trained parameters and the dynamic requirements of autonomous agents. Standard LLMs suffer from a “Goldfish effect”—they reset after every session, or they are constrained by the quadratic computational costs of the context window, often leading to the “lost-in-the-middle” phenomenon. The authors argue that solving this requires an architectural isomorphism with the human brain. Just as the brain utilizes a Sensory-Frontoparietal Network for short-term working memory and Hippocampal-Neocortical coordination for long-term consolidation, agent architectures must decouple processing (LLM) from storage (Memory). This survey moves beyond simple vector database lookups, framing memory as a sophisticated management system that governs the agent’s identity and temporal continuity (Figure 1).