Context Engineering 2.0: The Context of Context Engineering

Authors: Qishuo Hua, Lyumanshan Ye, Dayuan Fu, Yang Xiao, Xiaojie Cai, Yunze Wu, Jifan Lin, Junfei Wang, Pengfei Liu

Paper: https://arxiv.org/abs/2510.26493

Code: https://github.com/GAIR-NLP/SII-CLI

TL;DR

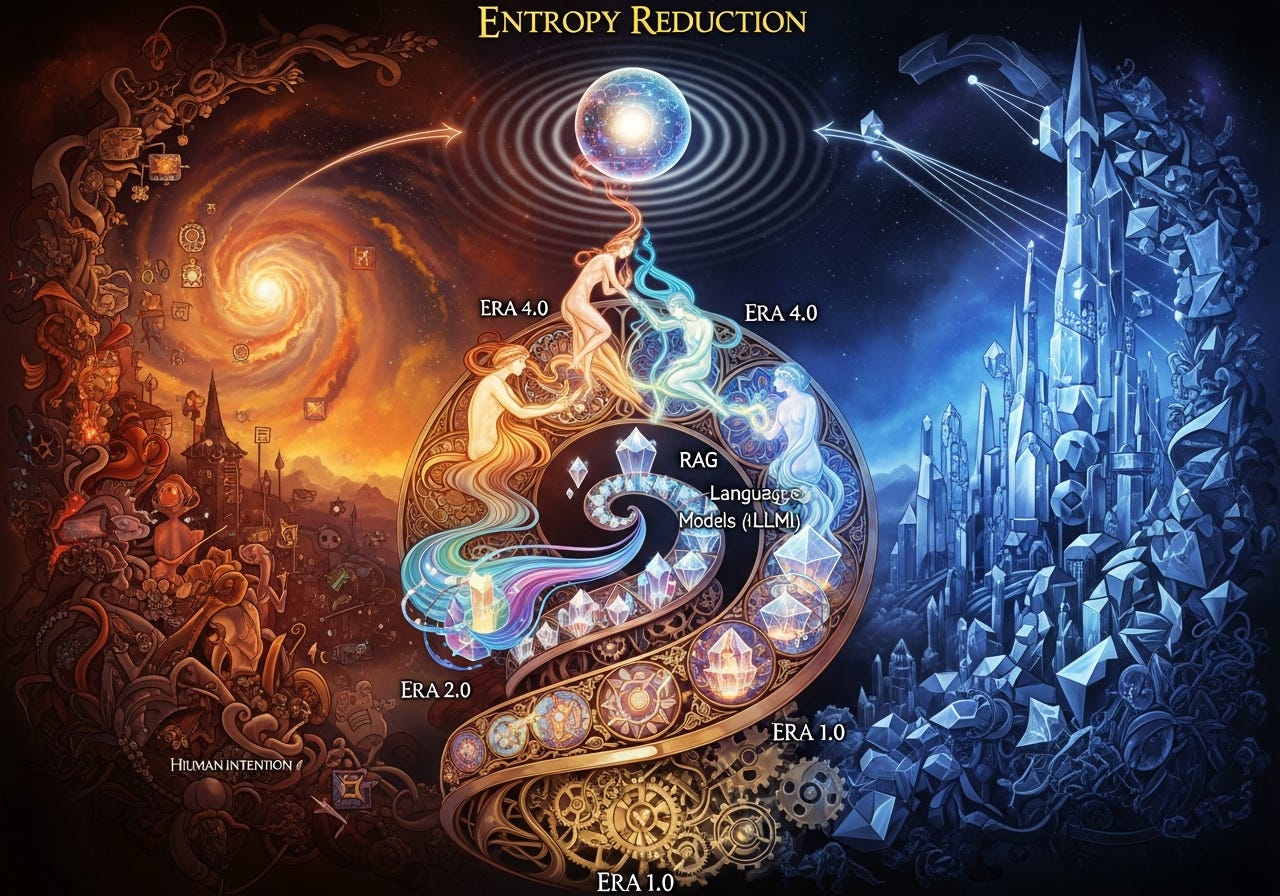

WHAT was done? This paper reframes “context engineering” from a recent LLM-era trend into a long-evolving discipline with a history spanning over two decades. It establishes a systematic theoretical foundation, defining the practice as a process of entropy reduction—transforming high-entropy human intentions into low-entropy, machine-understandable formats. The authors introduce a novel four-stage evolutionary model (Context Engineering 1.0 to 4.0) that maps the discipline’s progression against the increasing intelligence of machines, from primitive computation to speculative superhuman AI. The framework organizes current practices into three core dimensions: context collection, management, and usage, providing a comprehensive taxonomy of design patterns for building sophisticated AI agents.

WHY it matters? This work provides a crucial intellectual anchor for a field currently fragmented across disparate practices like prompt engineering and RAG. By historicizing the discipline and providing a unified conceptual language, it elevates the conversation from tactical prompt tweaking to the strategic design of cognitive architectures. This perspective is essential for tackling the core challenges of building scalable, long-horizon, and reliable AI systems. The proposed trajectory toward a “Semantic Operating System for Context” sets a clear and ambitious research agenda for developing agents that can manage lifelong context, moving the field closer to truly collaborative and proactive AI.

Details

The Context of Context Engineering

In the rapidly evolving landscape of AI, “context engineering” has emerged as a key discipline for unlocking the potential of large language models.

See another survey on context engeneering here:

A Survey of Context Engineering for Large Language Models

Authors: Lingrui Mei, Jiayu Yao, Yuyao Ge, Yiwei Wang, Baolong Bi, Yujun Cai, Jiazhi Liu, Mingyu Li, Zhong-Zhi Li, Duzhen Zhang, Chenlin Zhou, Jiayi Mao, Tianze Xia, Jiafeng Guo, Shenghua Liu

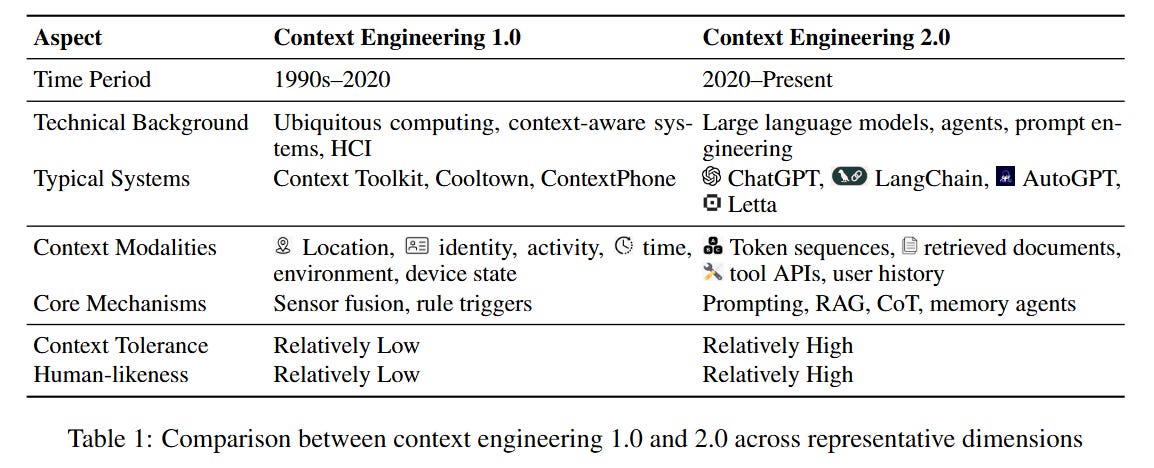

However, the term is often narrowly confined to recent techniques like prompt engineering and Retrieval-Augmented Generation (RAG). This paper, “Context Engineering 2.0,” argues for a much broader and deeper understanding, situating the practice within a rich historical arc that began over 20 years ago with early work in human-computer interaction (HCI) and the vision of Ubiquitous Computing (https://dl.acm.org/doi/pdf/10.1145/329124.329126).

The authors’ central thesis is that context engineering is fundamentally a process of entropy reduction. It is the systematic effort required to bridge the cognitive gap between human (carbon-based) intelligence, which excels at handling ambiguous, high-entropy information, and machine (silicon-based) intelligence, which requires structured, low-entropy inputs (Figure 2).

In essence, every GUI, command-line flag, or system prompt is a form of context engineering—an attempt to reduce the near-infinite entropy of human thought into a narrow channel a machine can process. This core challenge has persisted for decades, with its solutions evolving in lockstep with machine capabilities.

A Four-Stage Evolutionary Framework

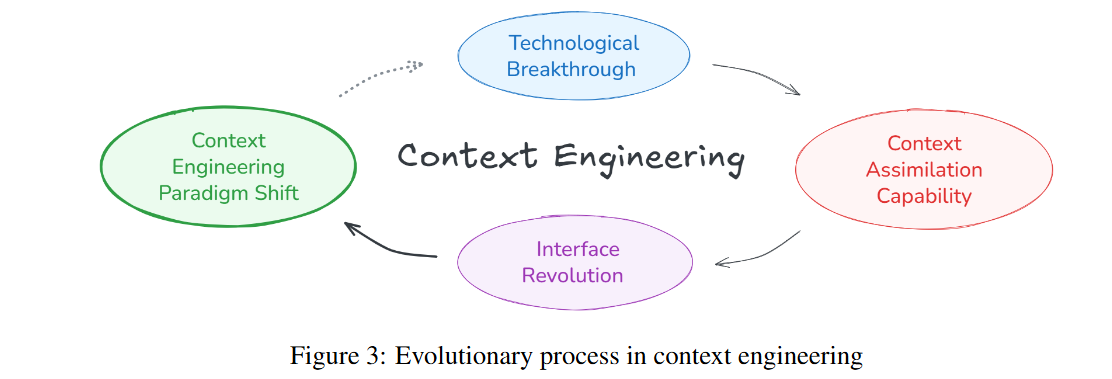

To formalize this evolution, the paper introduces a four-stage model where each paradigm shift is driven by technological breakthroughs that enhance a machine’s “Context Assimilation Capability,” thereby lowering the cost of human-AI interaction (Figure 1, Figure 3).

Era 1.0: Primitive Computation (1990s-2020): Machines possessed limited intelligence. Humans acted as “intention translators,” meticulously structuring context into low-entropy formats like GUI selections or predefined sensor data. The interaction cost was high, and the machine was a passive executor.

Era 2.0: Agent-Centric Intelligence (2020-Present): The advent of LLMs allows machines to process higher-entropy inputs like natural language and images. The AI becomes an “Initiative Agent,” capable of interpreting ambiguity and employing sophisticated mechanisms like RAG and structured memory. This is our current era.

Era 3.0: Human-Level Intelligence (Future): The AI evolves into a “Reliable Collaborator,” capable of understanding high-entropy social and emotional cues, enabling seamless human-machine collaboration.

Era 4.0: Superhuman Intelligence (Speculative): The traditional dynamic inverts. The AI, now a “Considerate Master” with a “god’s eye view,” proactively constructs context for humans, uncovers hidden needs, and guides human thought.

This framework not only provides historical clarity but also offers a predictive roadmap for the future of AI system design.

Formalizing the Discipline: From Theory to Practice

The paper grounds its framework in a set of formal definitions that build upon foundational work by Anind K. Dey, a pioneer in context-aware computing (https://link.springer.com/article/10.1007/s007790170019). Context (C) is defined as the union of characterization information for all relevant entities in an interaction. Context Engineering (CE) is then the systematic process of designing a function (f_context) that transforms this raw context to optimize for a target task (T).

To manage context effectively over time, the authors propose a layered memory architecture, formally distinguishing between:

Short-term Memory (M_s): Context with high temporal relevance (Equation 5).

Long-term Memory (M_l): Processed and abstracted context with high importance but lower temporal relevance (Equation 6).

Memory Transfer (f_transfer): The consolidation process where important short-term information becomes persistent long-term knowledge (Equation 7).

The Three Pillars of Modern Context Engineering

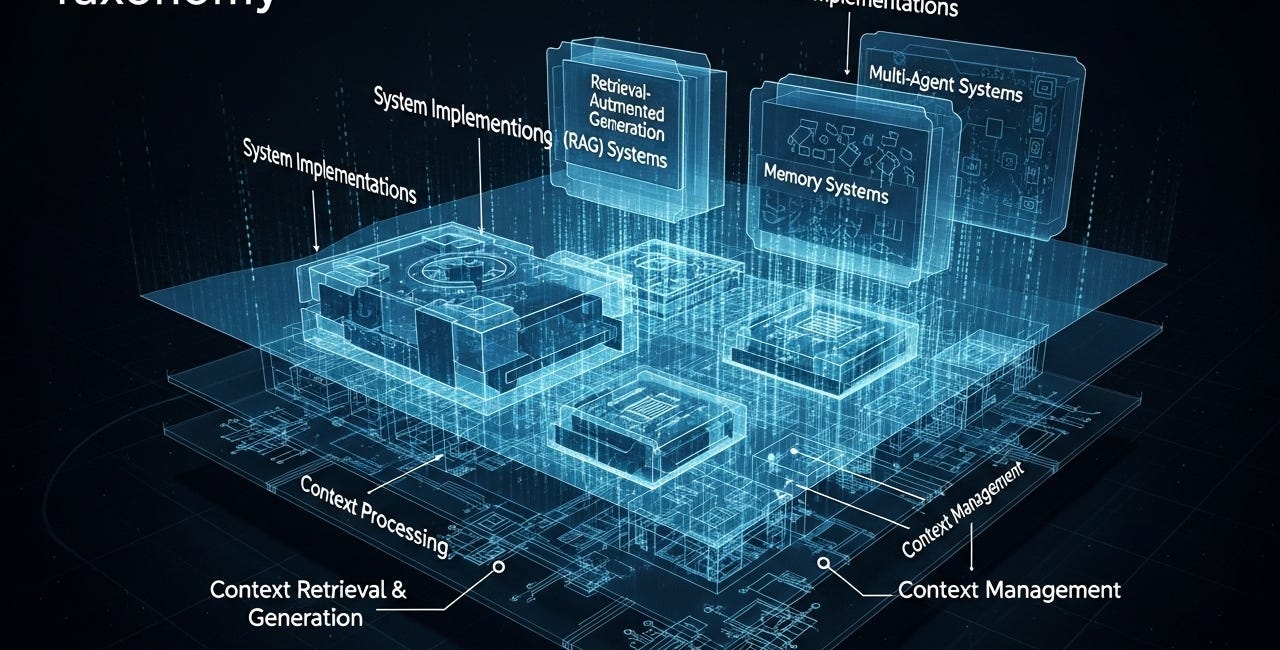

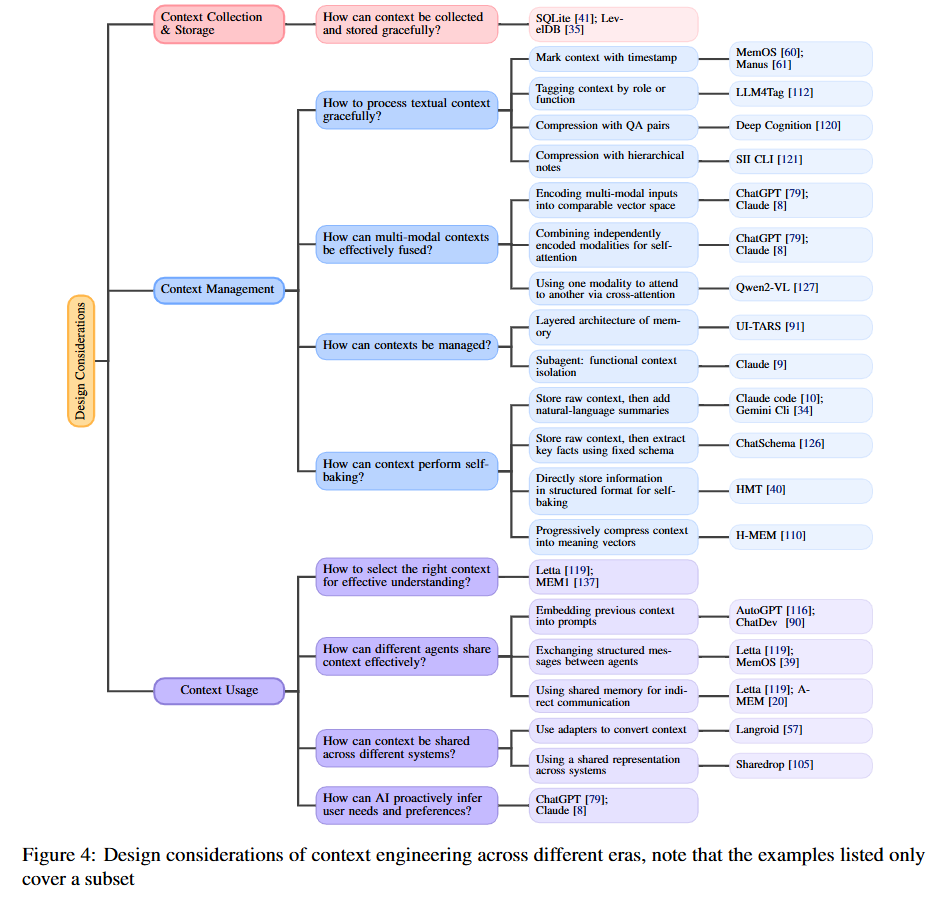

The paper organizes current Era 2.0 practices into a comprehensive design taxonomy covering three core dimensions (Figure 4).

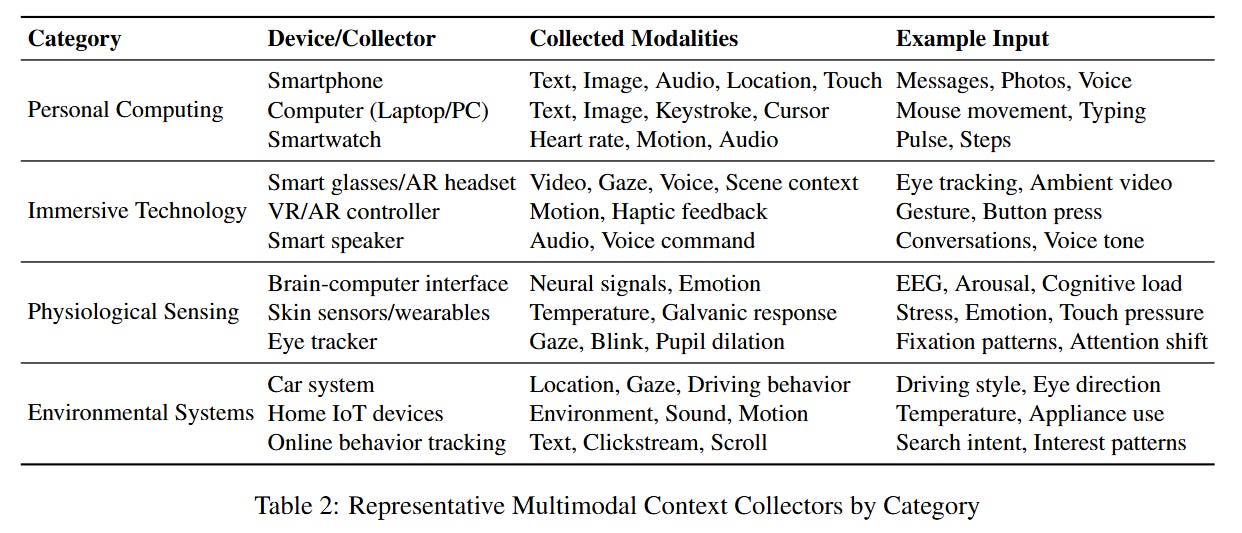

1. Context Collection and Storage

Modern systems must collect diverse, multimodal context from sources ranging from user keystrokes to physiological sensors (Table 2).

This process is guided by two key principles:

Minimal Sufficiency Principle: Collect and store only the information necessary for a task; value lies in sufficiency, not volume.

Semantic Continuity Principle: The goal is to maintain continuity of meaning, not merely raw data.

2. Context Management

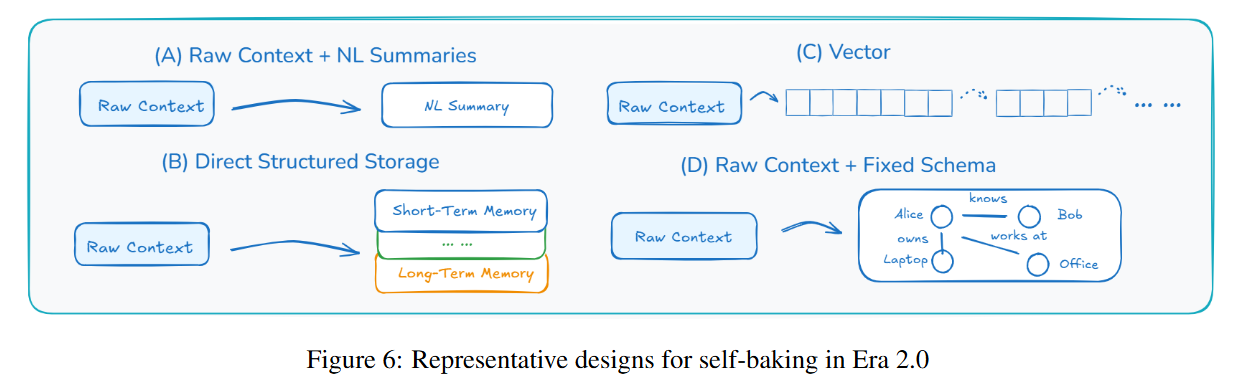

This is the heart of context engineering, where raw information is transformed into structured knowledge—a process the authors term “self-baking.” Key strategies include:

Context Abstraction: Compressing raw history through natural-language summaries, extracting key facts into a fixed schema, or converting it into dense vector embeddings (Figure 6).

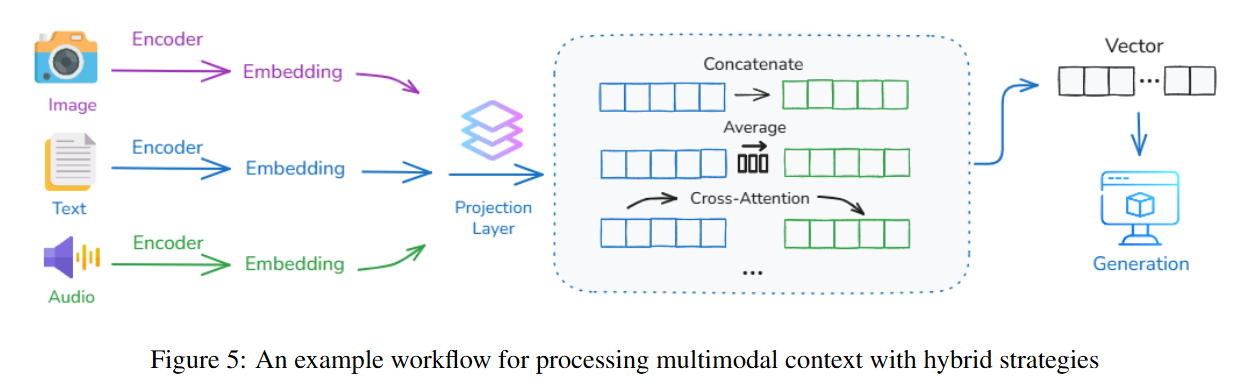

Multimodal Fusion: Handling heterogeneous inputs (text, image, audio) by mapping them into a shared vector space and using cross-attention mechanisms for fine-grained alignment and reasoning (Figure 5).

Context Isolation: Using specialized “subagents” with their own isolated context windows to prevent context pollution and improve system reliability.

3. Context Usage

Once managed, context must be deployed effectively. This involves:

Context Selection: Viewing filtering as “attention before attention,” dynamically selecting the most relevant context subset based on semantic relevance, logical dependency, recency, and user preferences.

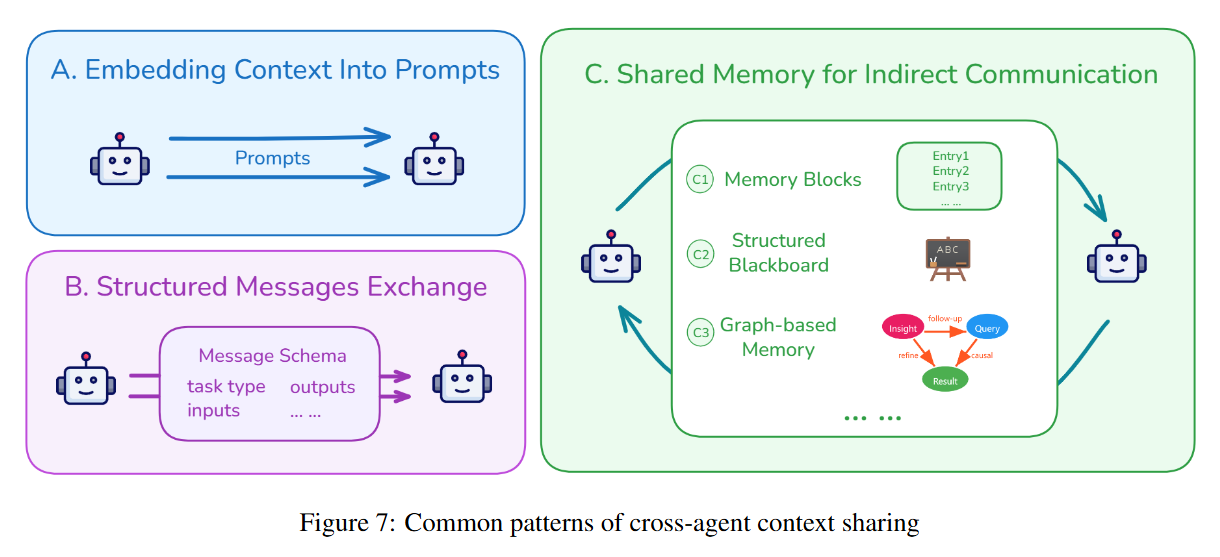

Context Sharing: In multi-agent systems, information is exchanged via structured messages or through a shared memory space (like a “blackboard” or graph-based memory) to ensure coherent collaboration (Figure 7).

Proactivity: Leveraging the accumulated context to infer latent user needs and proactively offer assistance, moving from a reactive to a cooperative paradigm.

Putting Theory into Practice: Real-World Applications

To demonstrate how these principles are applied, the paper examines two case studies. The first, Google’s Gemini CLI, illustrates a project-oriented context system. It uses a hierarchy of GEMINI.md files to manage static context (like project goals and coding conventions) and AI-generated summaries to compress dynamic dialogue history, ensuring consistent and stateful interaction over long development sessions. The second, Tongyi DeepResearch, is an agent for open-ended research tasks. It tackles the challenge of extremely long interaction histories by periodically invoking a specialized model to compress its observations into a compact “reasoning state,” allowing it to maintain a coherent evidence chain without exceeding the context window. These examples provide a tangible link between the paper’s theoretical framework and its practical utility.

Challenges and the Path to a “Semantic Operating System”

Despite significant progress, the paper identifies several critical challenges that define the frontier of the field: performance degradation from long contexts (due to the O(n2) complexity of Transformers), system instability as context accumulates, and the profound difficulty of evaluating lifelong learning systems.

To address these issues, the authors advocate for a paradigm shift toward building a “Semantic Operating System for Context.” This ambitious vision recasts the agent’s memory not as a passive database but as a dynamic, living system with its own cognitive functions—akin to a personalized knowledge graph or a “world model” that grows and prunes itself over time. Such an architecture is deemed essential for creating a persistent “Digital Presence,” where an individual’s context can evolve and interact with the world as a lasting form of identity.

Conclusion

“Context Engineering 2.0” is a landmark paper that provides much-needed structure, historical depth, and a forward-looking vision for a critical domain of AI. By formalizing the discipline’s core principles and organizing its practices into a coherent framework, the authors have created an essential resource for researchers and practitioners. While the framework is primarily conceptual and its more futuristic stages are speculative, its core strength lies in elevating the discourse from tactical problem-solving to the strategic design of cognitive architectures. This work offers a valuable contribution by charting a clear course for building the next generation of intelligent, collaborative, and truly context-aware AI systems.